Deciding what rhythm FPS to make

BPM: Bullets Per Minute is a rhythm-action FPS, where you fire, reload, jump, and dodge - all on the beat.

When we sat down to make it, we had several options on how to approach its design:

1. Note charts (e.g. Guitar Hero, Rock Band, Frets on Fire). Every track has metadata that defines “attack”, “dodge”, and “reload” beats. This metadata informs enemy behavior. The note charts tell the AI what to do.

Pros: Great curated experiences, the potential for high-quality user-generated content.

Cons: Very expensive to produce per minute of game. Low replayability.

2. Procedural music (e.g. PaRappa the Rapper or Wii Music). The music is made of track-segments. The track-segments are dynamic and an algorithm selects the next track-segment to play. The AI then attacks on specific markers for each track segment.

Imagine a drum roll segment played as the enemies attack quickly on sixteenth notes, followed by a backbeat where the enemies attack on the snare hits.

Pros: Dynamic, replayable.

Cons: Potentially low-quality music. Music is defined by the player, rather than a song structure, making for a poor musical journey.

3. Just the beat (e.g. Crypt of the NecroDancer). The AI sticks to the beat of the song, but its actions aren’t dependent on the track's state.

Pros: Dynamic, replayable, music can have its own musical journey, easier to make more content, game design isn’t dependent on music deliverables.

Cons: Music isn’t tailored to your experience.

As a small team, we wanted to make a pretty big game that we could play for many hours, so we decided to go with the “just the beat” approach. We also wanted to enjoy our game while we were developing it, so we chose a roguelike structure. A roguelike’s random level generation meant that we could enjoy the game's development, as it would always be fresh for us to play. Since a roguelike requires high replayability, we knew the “note charts” approach wouldn’t give us the 30 hours (at the high end) of gameplay we needed to have in BPM.

We did decide to have a few instances of procedural music in BPM, mainly as stingers around finishing bosses. They play as a player-controlled crescendo when you have defeated a boss.

Coordinating gameplay

BPM’s gameplay is essentially a step sequencer running in 4/4 at 88 beats per minute. An enemy can request to play an “attack” in a few beats’ time. They do this by querying the step sequencer to see if attacking on that beat would be overly cacophonous.

Enemies tell the step sequencer, “I wish to attack the player in two beats, forcing them to dodge”. The step sequencer responds “yes” or “no”. The step sequencer's response is based on whether too many attacks are happening on that beat or if another enemy has “exclusive” access to that beat. This way, the whole of BPM’s gameplay is informed by this step sequencer.

This sequencer is also involved in the player's gameplay. The player's actions can only be performed on every half-beat. For instance, the player can fire, jump, or cast magic every half-beat. But on each one of these beats, they can perform only one action: reload, dodge, fire, or cast. (They can jump at any time, however, because it sucked when we made it so they had to jump on the beat.)

So, the major problem from a technical perspective for us was making sure we knew when “beats” were happening in the track. We experimented with solutions inside Unreal Engine, but at the time we weren’t able to synchronize the audio clock and game clock. We also wanted to transition between tracks without missing samples causing pops.

We chose to use Wwise as it solved three problems for us:

1. Having tracks that transition well.

2. Being able to mix a game that requires the music to slap and the sound effects to always be audible.

3. Syncing the beat.

Transitions and effects

Josh Sullivan: I was in charge of all the sound for BPM. My process for creating/mixing sound is quite a strange one. I have more than a decade of experience in video production, so when it came to using a program to mix and mash the sounds that would go into BPM, I used Sony Vegas (video editing software) and not an actual audio editing suite. I’ve always seen Vegas as Sound Forge (Sony’s audio editing suite) with just a video preview window anyway.

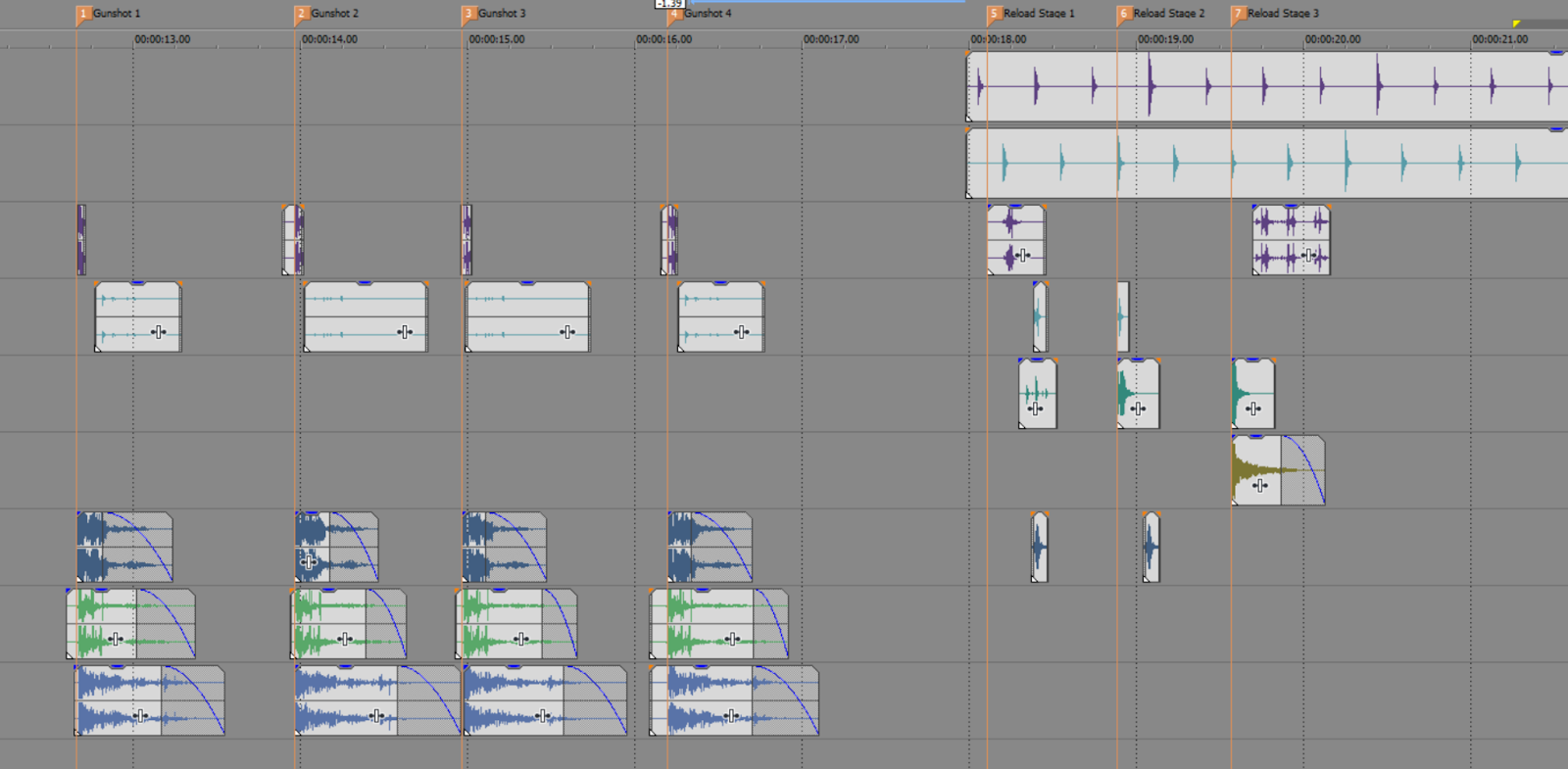

Fortunately for me, BPM’s soundtrack is 88 beats per minute. Because of this consistent tempo throughout the whole game, I could easily cut all of the audio sequences that had to be in time to a click track at 88 bpm. For example, a weapon’s reload stages in BPM are all tight to the beat (giving that Baby Driver feeling). I achieved this by putting an actual click track into the Vegas timeline and then cutting each sound to be in time with this physical click track. Each sound gets bounced to that single tempo. However, it’s worth noting that this method wouldn’t have worked if we had more than one tempo.

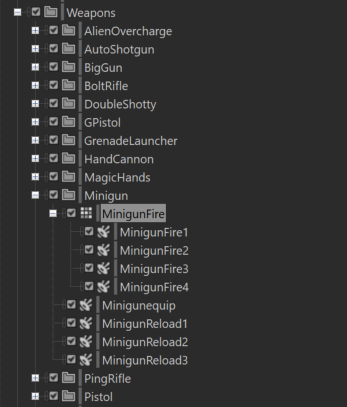

Once the sound(s) are ready, I simply import them into Wwise and give them positioning data (if it's needed). Then I limit the sound instance if required. An example where this is definitely required is the minigun, which fires every quarter note. Because of that rate of fire, the mix can get muddy, so it's best to limit it to one minigun shot sound at a time. Next I fiddle with the General Settings (voice, pitch, etc.). Finally, I set the sound to its respective Output Bus. It’s worth noting, for things like gunshots, I have at least four unique sounds for each gun. Then I put them all in a Random Container, so the gunfire doesn't get repetitive subconsciously for the player.

After this is done, all I need to do is create an Event in Wwise to the corresponding Audio. I didn’t get too complicated with Events. One thing I used them for was stopping previous sounds before starting new ones. An example of this is when enemies are making their chatter noises (grunting and growling to the beat). When the enemy’s pain Wwise Event is triggered, it not only plays the “ouch” Random Container, but it stops the enemy’s chatter.

When it comes to the music, using Music Switch Containers and Music Playlist Containers in Wwise made it relatively simple for me to transition one piece of music into another, all synced in time to the beat thanks to the time settings.

There is one instance in the game where some of the music isn’t supposed to be in time to the music, and that's the boss finishers. When you defeat a boss, you are able to finish him off with no tempo playing; each note shot is in your own time, and each note has to loop infinitely until you shoot the next shot. Even though they are technically “music”, I treated them as “sounds” in Wwise, as they were easier to implement as Wwise Events.

Mixing for a rhythm-action game

Sam Houghton: My primary goal when mixing BPM was to make the player feel like they were fronting a hellish rock orchestra. The weapons are the lead instrument in the mix, with the aim being exceptional levels of satisfaction when you get into the flow of the game and start making huge streaks. Conversely, when you fail to time a shot or a reload or an ability to the beat and no sound plays, it sounds empty and disappointing.

Because I also composed the music (alongside Joe Collinson), space had been left in the arrangements for these sounds. Consequently, there are plenty of comments online about the OST sounding great, but sounding even better in the game when you mix in the gunshots. These were really exciting to read, knowing that players had picked up on this intention. And after all, that’s exactly what a game soundtrack is for, to support the gameplay. It just does so more overtly in BPM than in many other genres of game.

Early on, I did play around with ducking the music channel when a player did an “on-beat interaction”, but the slight ducking really did nothing to enhance the satisfaction, and if anything just took away some of the energy of the game. So all I’m doing in Wwise is a few level tweaks here and there to make sure everything is clear and punchy in relation to the music. Josh Sullivan did a great job creating all of the sounds in the game, which made life very easy when it came to mixing.

Syncing the beat

David Jones: When syncing the game’s clock to the beat, I thought the best implementation would be the Borderlands solution, i.e., modifications to Wwise to hijack the MIDI calls to interrupt the game logic. At the end of every beat, we could request the state of input at that exact moment.

The perfect solution would be that every time the beat Event is called in Wwise, the controller inputs are polled almost immediately. An almost sample-based approach. Locking the input and audio very closely together in implementation so that the maximum possible precision can be achieved by judging whether the player’s inputs are “late” or “early” and by how many milliseconds. Then rejecting those inputs that are too out of time with the music. This solution however was untenable for a team of our size. This is the sort of job that would require a full-time audio programmer, as it would interfere with the whole input system of Unreal Engine and require modifications to Wwise. So we decided to go a different direction.

Cheeky Hacks

Our solution is very simple and lightweight. We register a callback on our music Event and look for EAkCallbackType::MusicSyncBeat. Each time we have one of these Events from the Music Switch Container, we find the difference between our game’s clock and the implicit progress we’ve made through the track. If this difference is greater than 10ms, we recalibrate our game’s clock and call an Event to all relevant actors.

We chose this simple calibration trick because our gameplay does not require anything more fancy. This keeps our enemies nicely in time with a ~10ms error, much smaller than our rhythm test window.

However, there are other errors you have to consider for the player’s inputs. The player error window is:

10ms from clock calibration, +[frametime]ms from frametime, +[frametime]ms extra from using the deferred renderer, +?ms from input device, +?ms from console processing, +?ms from TV/audio output processing.

This gives you a potentially huge error on some systems. We knew this error was highly variable per user. However, there is a much worse issue here, which is that rhythm FPS has a “Cursed Problem”.

During his 2019 GDC talk, Riot Games' Alex Jaffe defined a Cursed Problem as an “unsolvable design problem, rooted in a conflict between core player promises”. In BPM, our player promises are: “you shoot on the beat” and “bullets are fired when you pull the trigger”—these two things conflict. The only way to fix this problem is to “win by giving up”.

In a beat-matching game like Guitar Hero, your inputs are offset by the latency that you set by calibrating your controller. Because your notes do not have any causal effects, they can be tested with the latency taken into account. The game compensates for your video latency by testing whether you achieved a rhythm input in the past. The game knows when the note should have been hit, when the note was hit, and what your TV latency is. So the game looks into the past to see if you were inside a window at: current time - latency time. This can be difficult to wrap your head around. It is for this reason you can see notes disappear below the line in Guitar Hero and appear to be successfully “strummed” despite passing the line. Your TV has latency and you are seeing this latency in real time. This however is not viable in an FPS, as your aim is defined at every moment in time.

In BPM, we assume the user has less than 80ms of latency. If the user has more latency than that, we detect that during the startup calibration sequence and enable auto-rhythm. Auto-rhythm holds the player’s shots when they input until the next beat occurs. For users who have less than 80ms of latency, we delay their early shots so that they occur on the next beat and let their late shots fire immediately.

BPM also has a bunch of sorcery that forgives players for certain poorly timed actions in favor of player feel. There’s a lot of logic that defines what is forgivable timing and what is unforgivable. Most of this is to enable all input configurations to play without trouble. We went for the experience of being in an epic rock opera shooter over being a tightly defined rhythm game.

In the end, BPM was a success due to its solid integration of music and gameplay, and Wwise helped us achieve BPM with speed and control.

David Jones | Programming |

Josh Sullivan | Sound |

Sam Houghton | Music |

Comments