At GameSoundCon last October, I was having lunch with Damian at the fancy sandwich place around the corner from the hotel, and of course we're talking tech, and I say, "So apparently, you can now run MetaSounds INTO Wwise as a sound source -- now that’s freakin' useful" ...

Just then someone says "Thank you!" so I look up, and there's Aaron McLeran, the Director of Unreal Audio and MetaSound architect, hand on the doorknob, walking into the restaurant! The timing was so perfect, had you seen it in a movie, you'd have thought it contrived…

There have been significant improvements to Unreal audio systems in recent versions. Many of the new features and workflows are designed to facilitate the production of soundtracks that have more than simple "push a button, make a noise" functionality. And yet, if I'm trying to evoke emotional responses to gameplay actions for a large complex console game, I'm going to want to use Wwise every time.

Enter AudioLink, “a tool that allows Unreal Audio Engine to be used alongside middleware.” And more specifically, alongside Wwise. Traditionally, that's always been a "one or the other" proposition, but AudioLink seems to promise a more collaborative "both at the same time" paradigm. To test that theory, I wanted to create some MetaSounds and route them into a few rooms in Unreal via Wwise. Thus I could use the power of a Blueprint-controlled modular synthesizer, and place the results in a spatialized environment. As an added bonus, I could then monitor the MetaSound output using the Wwise Profiler.

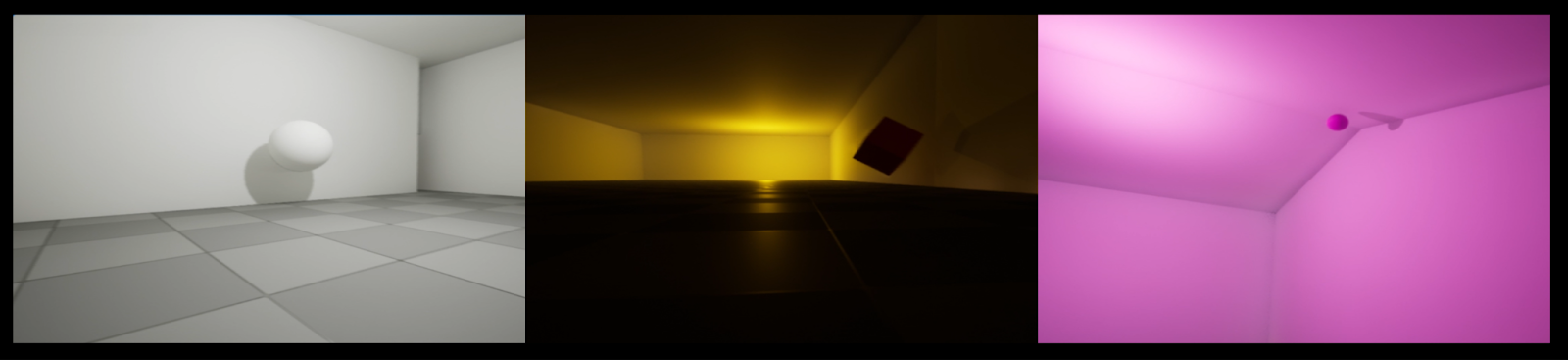

White, Brown, and Fractal Music

I upgraded to Unreal 5.1, and created three MetaSound implementations, using algorithms derived from an article titled "White, Brown, and Fractal Music" from Martin Gardener's Mathematical Games column in Scientific American magazine, circa 1978. While the piece contains some truly fascinating discussions about mathematics and the nature of perception, its main focus is on algorithmically generated melodies. Gardener describes three methods:

1. White Music is basically chaos: completely random, unpredictable, with zero correlation between successive notes.

2. Brown Music is based on Brownian motion, which is the random movement of particles suspended in liquid. When plotted on a graph, the particle traces a path mathematically known as "the drunkard's walk": a meandering trail with unpredictable vectors. This creates a random series of notes that are nonetheless highly correlated, each note related to the previous one by numerical formula.

3. Fractal Music (aka Pink noise) is generated by rolling six dice in a binary-based pattern to generate numbers between 6 and 36. Assign those numbers to an array of notes on a keyboard, and the algorithm will produce melodies with remarkably more musicality than the other methods.

Having Fun With MetaSounds

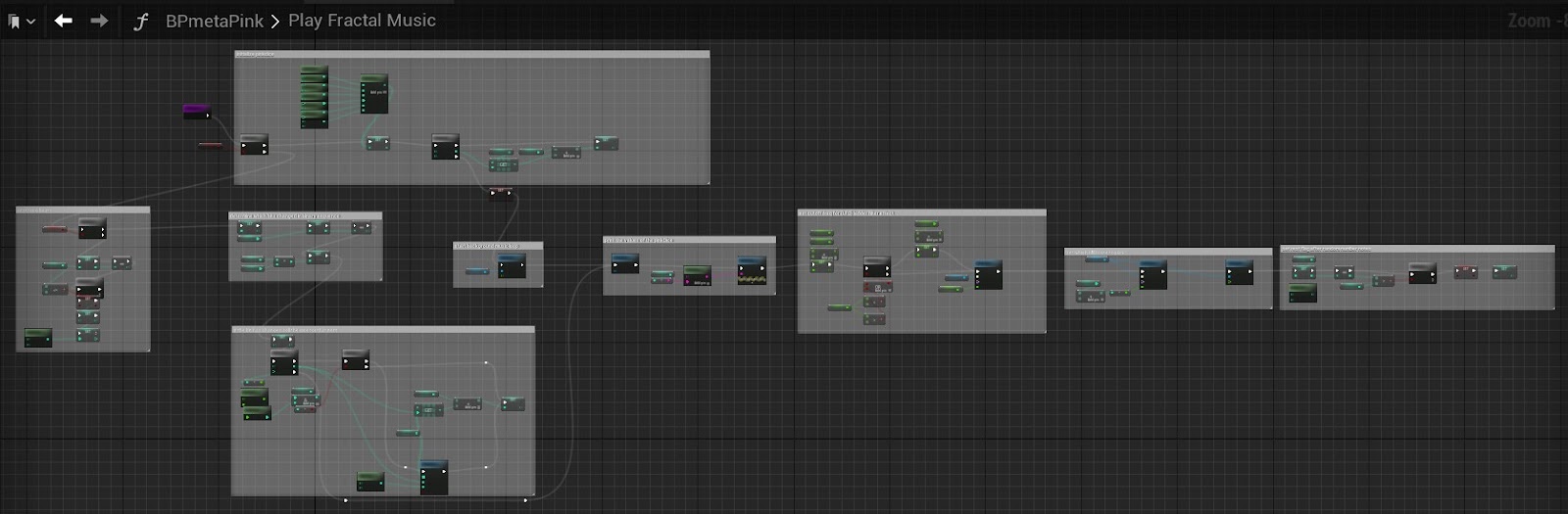

I had previously produced an Unreal/Wwise Blueprint project to demonstrate the three algorithms, so it was fairly easy to convert them to MetaSounds of three flavors: straight synth, wavetable, and "melody over background loop".

MetaSound supports a nifty "Scale to Note Array" feature that lets you quantize MIDI notes to a wide range of scales and tunings. When set to Pentatonic, even the random algorithm produces euphonious results. But I also had great fun playing around with the extensive set of tools and effects the MetaSound synthesizer provides (and you know my motto: "if you ain't having fun, you ain't doin' it right!")

In Unreal, I created a structure containing three rooms of increasing size, connected by doorways. Each room contains an object that bounces around the room, and each object emits one of the generated-melody implementations, with volume and position controlled by the Attenuation settings of the MetaSound. So far, so good.

Setting Up Wwise

Then it was time to integrate Wwise ... and therein lay my first stumbling block (see note). The latest version, the one with the “experimental” AudioLink updates, required users to install the Wwise Unreal Integration "by hand". This meant downloading the "engine plugin" files, and putting them where the "game plugin" files would usually go. To be honest, I'm still surprised that even works, but by carefully following the instructions posted at the Wwise Q&A (https://www.audiokinetic.com/qa/11062/5-1-support-wwise-roadmap/), I was able to get Wwise up and running on Unreal 5.1.

Note: You, gentle reader, will not have to do this. Wwise v.2022.1.2 is now available and supports the standard installation method.

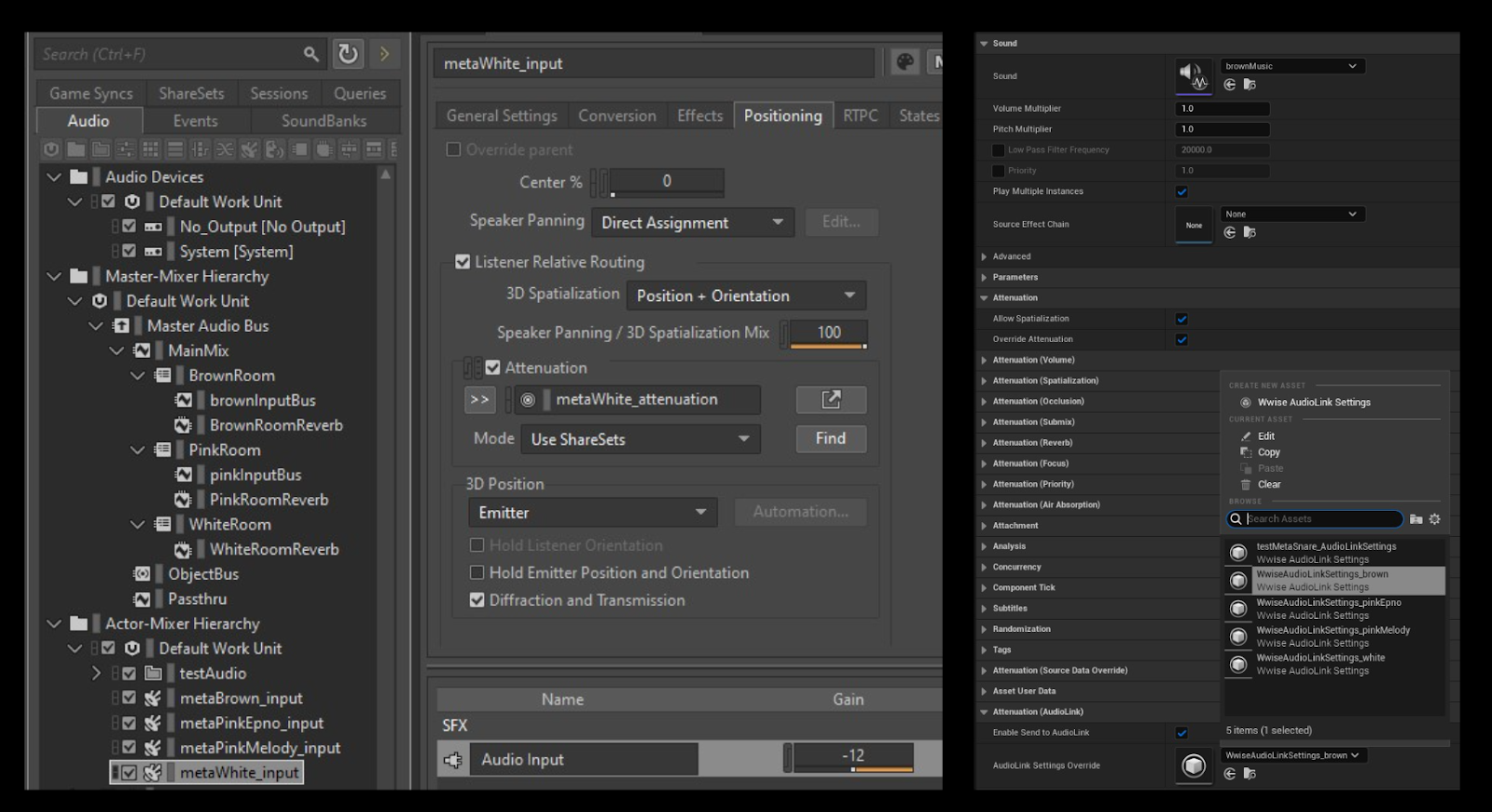

Now I was ready to acoustify the environment. I added AkSpatialAudioVolumes to each room, and AkPortals to each doorway. Each room also got its own aux bus for reverb, so I could specify "tiled bathroom", "medium hall", and "cathedral" settings for the RoomVerb effect on each of the increasingly sized rooms. As a control, I also added looping test pings as AKAmbient sounds in each room to verify the setup was working as expected … it was.

Connecting Unreal to Wwise via AudioLink

Next, I created three Wwise Events, each playing a SoundSFX with an "Audio Input" source. This Source Plug-in option allows audio content generated outside the Wwise project to be processed by the Wwise engine. While it is predominantly used for in-game voice chat (taking sound data from a microphone), it can also be used as an inter-app audio-data conduit from the Unreal MetaSound output into the Wwise project.

I have just described what AudioLink is designed to do.

That's when I ran into my second stumbling block. My understanding was that a Wwise Audio Input Event could be specified in the AudioLink section of the MetaSound's Attenuation, located near the bottom of a long list of override options. But knowing I could do that did not mean I knew how to, and the available choices seemed opaque and confusing.

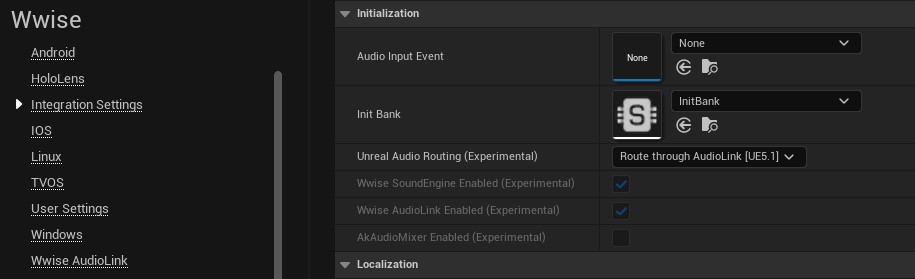

I spun my wheels for far too long before realizing (in typical "oh, ya gotta push THIS button" fashion) the Unreal Project Settings => Wwise Integration Settings => Unreal Audio Routing (Experimental) had to be set to "Route through AudioLink [UE5.1]" -- THEN the Create New Asset button for custom "Wwise AudioLink Settings" would appear in the Attenuation (AudioLink) dropdown. From there the asset could be set to point at the appropriate Wwise Audio Input Event.

Et Voila!

It works! The MetaSound and associated music systems were audible in the project, attached to the bouncing objects, and their outputs could be monitored in the Wwise Profiler. Woohoo!

I could also:

- Control volume levels

- Change bus routings

- Set attenuations

- Add effects

- Use RTPCs

- Modify and audition the Unreal audio on the fly

…all while working with the familiar Wwise tools. Like I said at the start, useful!

But I wanted more...

Spatializationable Objects

I wanted to place the MetaSounds into the acoustic environment of the three different rooms, and provide smooth transitions between them. I wanted to mix the MetaSound with the reverbs specified for each room, bounce the music off the walls using WwiseReflect, change the EQ based on location and material, really sell the idea of the audio-emitting objects being IN the room, while using all the spiffy spatialization features Wwise provides (and that Unreal native audio would be hard pressed to match).

That's when I ran into my third and final stumbling block. The "Audio Input" source Plug-in acts more like a “feed” from a mixer than an object that can be spatialized by Wwise (like a SoundSFX container). Even though the MetaSound input events were routed the same as the AKAmbient test pings, they profiled with "100% Transmission Loss" (meaning silent) when "diffraction/transmission" (necessary for spatialization) was used. When diff/trans was turned off, the MetaSound was audible, but not mixed with the Reverb, and could be heard through the walls.

The solution would be to route the Audio Input to a Wwise component that CAN be spatialized, but in this early version of the AudioLink implementation, there seems to be no way to do that from the MetaSound Attenuation overrides. Our friends at Epic assure me this functionality is available but not currently hooked up ... and that it will be in successive releases.

Connect Via Component

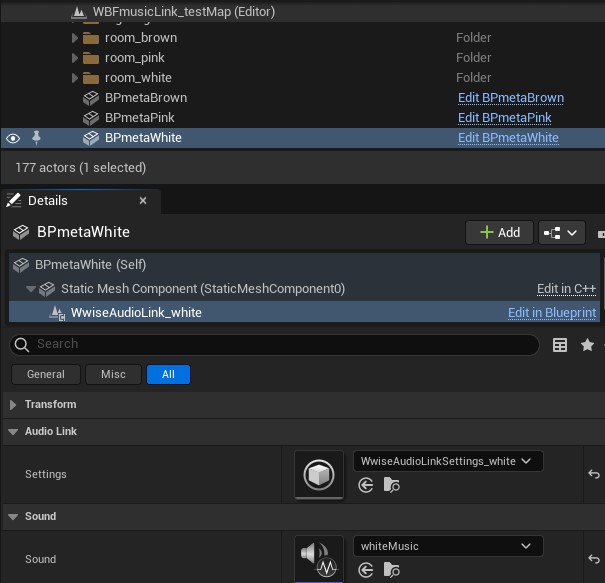

But wait! I like to say "there's always 6 different ways to do anything in Wwise", and indeed, that's one of its greatest strengths: the flexibility to solve game audio problems in whatever way works best for your implementation. Turns out there's another method for routing the MetaSound into Wwise -- the WwiseAudioLink component.

As part of the integration package, you can drop this component on an object, specify the AudioLink settings and MetaSound there, PlayLink on BeginPlay in the level event graph ... and bing bang boom, the MetaSound plays *as a spatialized object*. The sound stays in the room, the portals fade in/out based on player position, and the music plays with the reverb. Success!!

But as before, the current "experimental" iteration doesn't quite catch all the use cases. I could only get the component to work with the whiteMusic MetaSound, which is a single instance of a looping patch. The brownMusic uses a "wavetable" approach, calling the MetaSound repeatedly while passing in the name of the file to play, which seemed to confuse the component code (or at least, it confused me!)

Close Enough For GDC

SO, not to be dissuaded from my quest, I put together a hybrid approach:

- The whiteMusic uses the WwiseAudioLink component, and is spatialized with reverb in the White Room.

- The brown and pink music algorithms use the AudioLink settings in the MetaSound Attenuation overrides. The music plays in the Wwise project, but is not spatialized, so I came up with a workaround kludge using 3D emitter busses and trigger volumes to simulate a spatial reverb implementation in the Brown and Pink Rooms.

The results sound pretty good. Here's a link to a video flythrough of the three rooms:

You can download the full Unreal/Wwise project here:

https://media.gowwise.com/blogfiles/WBFmusicLinkAKh.zip (4.3Gb)

The project demonstrates two techniques for using AudioLink -- which method you use will depend on your particular needs. For instance, if your game music is a stereo MetaSound, spatialization likely isn't a priority, so connect via the MetaSounds Attenuation settings. But if you're synthesizing a sound to be played by an object in an acoustic environment, then the WwiseAudioLink component would be the way to go.

While you can do your own code-fu to implement additional AudioLink functionality if necessary, I'd guess increased feature support from both Wwise and Unreal will be forthcoming. In subsequent versions, I'll want to have fun playing MetaSounds with Reflect, acoustic textures, and other cool aspects of Wwise Spatial Audio.

Collaborative Development

- A frequent dilemma when making a game is: "Do we want to use the native Unreal audio, or do we want to go with the full Wwise implementation?" The myriad cost/benefit factors make that a perennial question, but it's not uncommon to use Unreal audio for prototyping, then install Wwise when the funding comes through.

Usually, that means recreating everything you've done in the prototype in Wwise ... but not any more! Now you don't have to throw out all that sound design work; you can just route it to an input event via AudioLink and Bob's Yer Uncle.

- There's a HUGE market for modular synthesizers, and enthusiasts are frequently pictured grinning wildly in front of complex arrays of knobs, switches, and colored cables. While you can record the audio output of these pulsating contraptions and use them in your game, you can't hook up an RTPC to outboard hardware.

And now you don't have to. MetaSound lets you do all the knob-twiddling and patch-cord-routing you want ... but then you can connect the knobs and switches to variables that modify the sound based on gameplay (interactive audio, what a concept). One might even use Wwise RTPCs to, say, change the mix, or modify EQ settings, on multiple MetaSound layers via AudioLink.

- As an audio engineer, I run the Wwise Profiler all day every day when developing a game soundtrack. It tells me everything I could possibly want to know about what's playing when, how loud, which bus, reverb/effects, CPU/voice usage, and on and on. It is the single most valuable tool in the Wwise feature set for monitoring, debugging, and optimizing soundtracks --> that use the Wwise engine.

But now Unreal audio can join the fray! The Wwise Profiler captures processing from the Input Events, allowing them to be monitored in the various Profiler views. By routing through AudioLink, you can gauge voice usage, track positions, check bus routing, effects, mix values, and other properties at runtime.

- [your brilliant MetaSound => AudioLink => Wwise idea goes here]

Building Bridges

Game audio is all about creating emotional experiences in an interactive gameplay environment. Extraordinarily powerful tools and techniques have been developed to facilitate that goal; indeed, to make them possible at all. Current soundtracks on this platform literally could not exist without sophisticated audio systems created by both Epic and Audiokinetic.

But there's always been something of a "pick a side" dichotomy between the native and the middleware, mostly for technical reasons. AudioLink provides a technical solution to that dilemma, and can let sound designers, musicians, and engineers use whichever tech works best for them to achieve the desired results.

It's a bridge between technologies, and when you want to get from here to there, ya know what bridges are? USEFUL!

- pdx

Shoutout to Damian, Michel, Benoit @ Audiokinetic; Jimmy, Dan, Grace, and Aaron @ Epic, for their support on this project.

.jpg)

Comments

Mike Patterson

June 23, 2023 at 06:05 pm

All I'm getting from this is the following error: `FWwiseAudioLinkInputClient: Starving input object, PlayID=73, Needed=1024, Read=256, StarvedCount=10, This=0x00000812BD083B70` I've tried increasing the max number of memory pools, the max number of positioning paths, the number of samples per frame, max system audio objects, and of course the Monitor Queue Pool Size. Nothing works for the WwiseAudioLink component. I've followed these instructions to T - added the WwiseAudioLinkSettings to the WwiseAudioLink component, added my working MetaSoundSource to the Sound property of the WwiseAudioLink component, and added my AudioInput AkEvent to the AkAudioEvent property of the WwiseAudioLink Component - NOTHING WORKS. So frustrating dealing with this audio input voice starvation error and the absolute silence and missing info in this blog post. WwiseAudioLink->PlayLink just immediately results in Voice Starvation problems with Default Wwise settings. Ugh.

pdx drescher

August 03, 2023 at 02:28 pm

[AUTHOR] Sounds like you're running into the same/similar problem I had when trying to use the WwiseAudioLink component with a wavetable approach to the brown/pink music (i.e. triggering the MetaSound repeatedly from the blueprint), as described in the last paragraph of the "Connect Via Component" section above. Instead, try using the "Wwise AudioLink Settings" from the Unreal MetaSound's "Attenuation (AudioLink)" dropdown (as shown in the pic in the "Connecting Unreal to Wwise via Audiolink" section above). That might work for you, particularly if you don't need to spatialize the output ...

Doug Prior

October 05, 2023 at 10:48 pm

Since trying Audio Link on our project, our game cannot be packaged in unreal. It errors out with " Ensure Condition Failed: Factory [File:C: \Workspaces\Plugins\Wwise\Source\WwiseAudioLinkRuntime\Private\Wwise\Audiolink\WwiseAudioLinkComponent.cpp] [Line: 30] I reverted all of the audio link components used and still won't build a package and errors out with the same. Have you experienced anything like this before?

Doug Prior

October 09, 2023 at 01:07 pm

We have solved the above problem, and I am really loving the Wwise Audio Link feature. Thanks so much.

Andrew Brewer

February 08, 2024 at 05:28 pm

Hi doug! I know it's been a while since you had this error, but it just showed up for us and it would be pretty impractical to revert to where our project could build last. Could you describe what you did to fix this error? Thank you so much!

marcel enderle

October 25, 2023 at 11:28 am

Is it possible to use AudioLink to get a wwise audio to use Unreal's box shaped attenuation? This is a must for my sound game project. Thanks for the blog, really good

Aaron McLeran

November 27, 2023 at 07:11 pm

You would play UE Audio sources like normal, use the box attenuation as you would UE Audio. Then render the audio from UE Audio into WWise using Audio Link. You can't use the box attenuation on WWise sources.