Hardspace: Shipbreaker is a first-person, zero-g, spaceship salvaging game, recently released on Playstation 5, Xbox Series S/X and PC. It is a satire about work, debt and capitalism. It takes the form of an immersive simulation in which players experience the daily grind of working for a massive future megacorporation. The player floats in orbit above Earth, tasked with carrying out the recycling of spaceships large and small, while trying to avoid being blown up by mishandled fuel systems or nuclear reactors. It’s hard, dangerous work. It’s also weirdly fun.

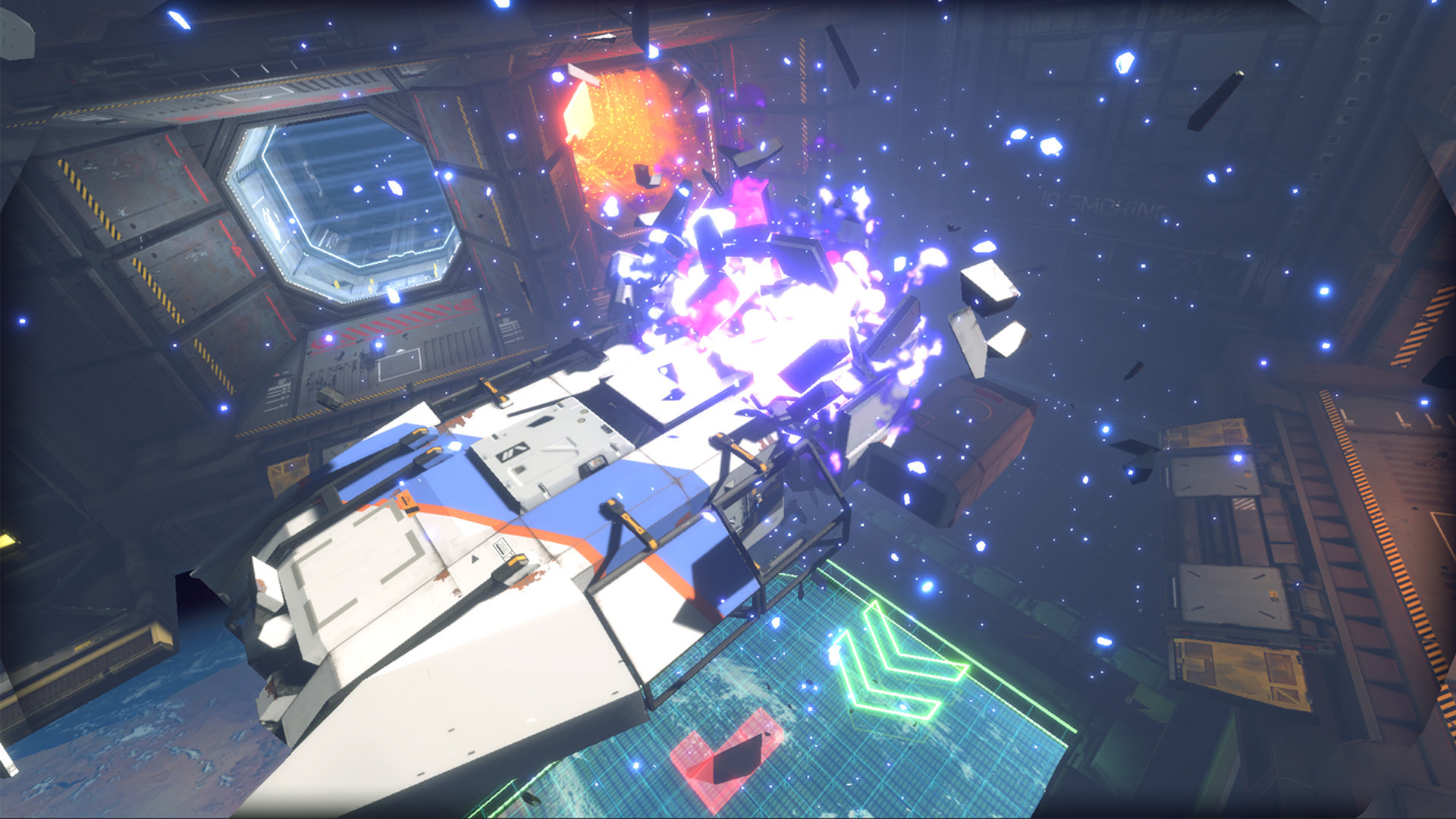

The view from the salvage bay in Hardspace: Shipbreaker

When I first became involved in the project back in 2017, it was a much smaller and simpler game than it is now, but even then, its core pillars were already established:

- Simulation first. Simulate a fictional future job; players should feel like they are doing the job of a spaceship salvager.

- Physics gameplay. Generate the majority of the gameplay through a satisfying physics simulation that invites playful interaction and experimentation.

- Cutting gameplay. Add depth to the gameplay by way of a complex and satisfying cutting mechanic. Objects can be sliced up into tiny pieces by using the "cutting tool".

- Blue-collar vibe. Lean heavily on a blue-collar aesthetic; equipment is hand-me-down and sometimes malfunctions. Nothing works as well as it once did. Everything should feel well-worn and industrial.

The team and I wanted the game’s audio to truly inhabit those pillars, to permeate them in as meaningful a way as possible. Audio needed to be deeply connected to the simulation, the physics, and the aesthetic, responding in an entirely believable way, in lockstep with the player’s actions and in harmony with the setting. With the game having simulation at its core, we wanted the audio to obey its simulated rules. For example, if the player is out in the vacuum of space, there shouldn't be any airborne sound. Airborne sound should only be present if the player is inside a ship in a pressurized environment.

This desire to "follow the sim" immediately presented some interesting challenges:

- How do we deliver a great-sounding experience while spending most of the time in the vacuum of space?

- If areas can be pressurized and depressurized by the player, how do we emphasize the transition without just switching from "lots of sound" to "no sound at all"?

- How do we make physics interactions sound good when any object in the game can be cut into tiny pieces?

- How do we make equipment feel worn-out and on the verge of malfunctioning while also feeling satisfying and entertaining to use?

After a lot of deliberation and prototyping, we developed a suite of systems that helped us answer those questions and deliver a soundscape that's detailed, unique, engaging, and supports the simulation at the heart of the game. In this blog, I'll talk about a few of those systems and how we used Wwise's features to bring them to life.

Oops…

Resynthesis

First up, our "resynthesis" system. We wanted players to be able to hear the sounds of hazards in the environment because getting too close to a sparking electrical cable or a flaming fuel line could prove to be… unprofitable. This immediately made us bump into our first question above; how can players hear these hazards if we're simulating the behavior of sound in a vacuum? In short, they can't.

Working with the game's designers, we created a piece of in-game technology called the Resynthesizer. This is a fictional piece of equipment that can be attached to the player's salvage suit. It detects hazards in the environment and plays back a simulated sound for the hazard in question. This tech is offered to the player as an optional upgrade at a cost of in-game currency. It is also upgradable, allowing resynthesis to operate over an increasingly large range as the player upgrades it.

Resynthesis - The Implementation

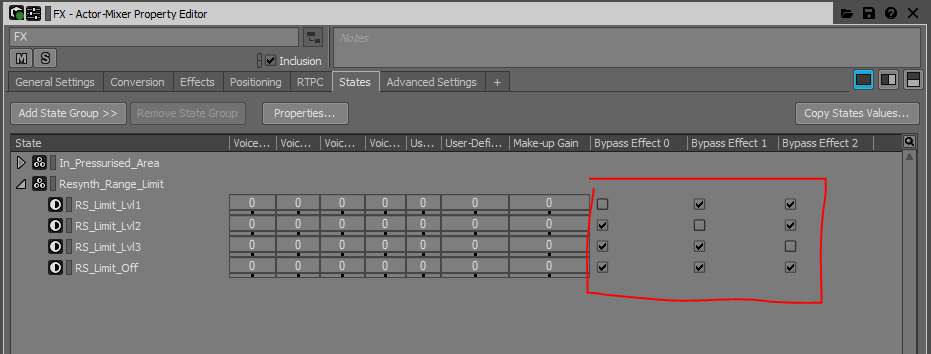

We handled the range upgrade by enabling and bypassing Wwise Gain Effects on the hazard fx actor-mixer in Wwise. With an RTPC bound to Distance, we were able to use these Wwise Gain Effects to shut off the sound when the player was more than a certain distance away. This allowed us to set up standard attenuations for our hazard sounds so they'd attenuate normally in pressurized spaces, but would only be audible over the current range of the player's resynthesizer when in unpressurized spaces.

Wwise Gain Effects on the hazard actor-mixer

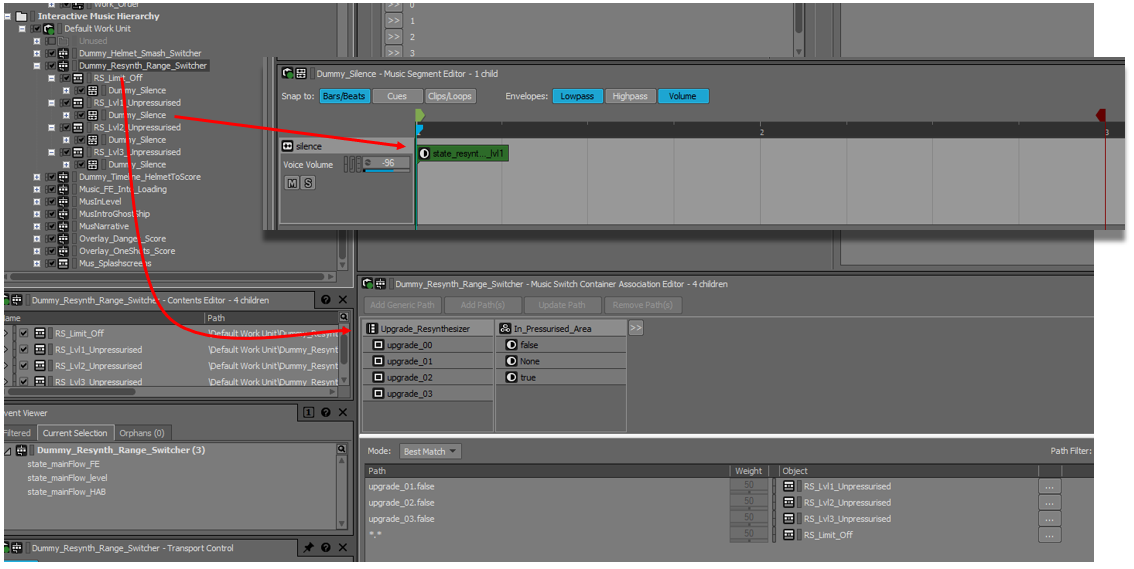

We used States to bypass and enable the Effects, but the way that we triggered the state changes was via an unconventional use of Wwise's music system. In the music system, it's possible to query a variable—for example, a State or a Switch—and trigger an Event based on that variable's value. In other words, you can query the value of one State Group and use the result to set the value of another State Group.

The player's current resynthesizer upgrade level was being sent to Wwise as an RTPC, not as a State. It wasn't possible to use that RTPC directly to enable the Wwise Gain Effects because the RTPC was being set globally, and the hazard objects were using their local RTPC values. We needed to receive the global RTPC value, and convert it into a State value that could be referenced by all the hazard objects to bypass/enable their Wwise Gain Effects. Thankfully, Wwise's music system allows you to do exactly that.

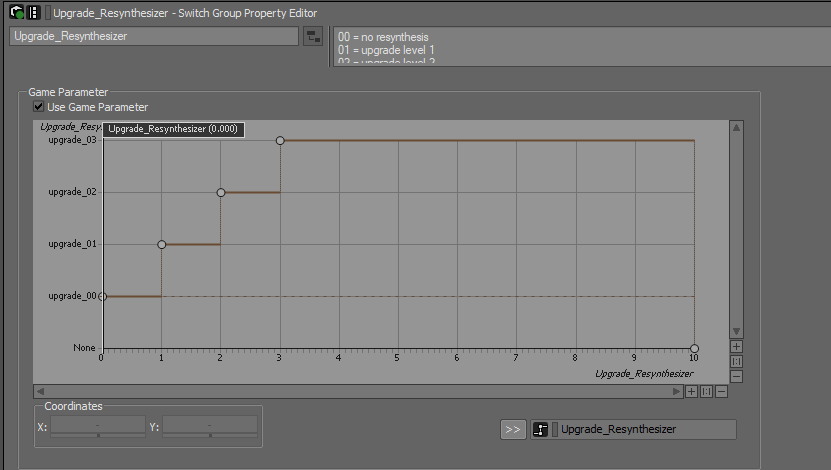

First, we created a Switch that selected a target value from the global RTPC:

Then, in the music system, we had a container looping every 1 second, querying the value of the Upgrade_Resynthesizer Switch. Based on the value of that Switch, a silent Music Segment would set the value of a State via a Music Event Cue.

That state would then be used to enable and bypass the Wwise Gain Effects on the hazard sounds.

Now, this is of course a fairly arcane implementation, and I don't necessarily recommend it! But it's interesting to see how far Wwise's systems can be pushed in the event that code support is simply not available and there's a feature that needs to be implemented in a hurry.

The player-facing view of resynthesis in the upgrade tree

Resynthesis - The Sound

The next challenge we faced in making the resynthesis feature was getting it to sound good, in a way that didn't cause conflict with our blue-collar aesthetic. Although we wanted the player to hear hazards in a vacuum, the resynthesized sounds couldn't be too perfect, or sound too much like their airborne equivalents. We'd embraced the fiction of the resynthesizer being a piece of in-game equipment, and we'd already established that the player's equipment needed to sound worn-out and on the verge of malfunction. If the resynthesizer was to be believable, it would have to glitch, bleep and stutter.

To achieve this, initially we turned to an offline process based around a plugin called Fracture by Glitchmachines. We hosted this plugin inside Plogue Bidule, which we used to generate pseudo-random sample-and-hold values and pass them to Fracture to manipulate some of its parameters.

Hazard sounds were offline-processed through this chain, and we were happy with the glitchy results.

However, it only worked when one sound played at any one time. When two or more sounds played together, the stepping of their sample-and-hold changes was not synchronized and the end result was a mess; a mess that only became worse as more sound sources were added. It was clear that an offline process was not going to be effective. If we wanted to use this type of DSP for our resynthesized sounds, we'd have to use a real-time effect, applied to a submix of all our resynthesized sounds together.

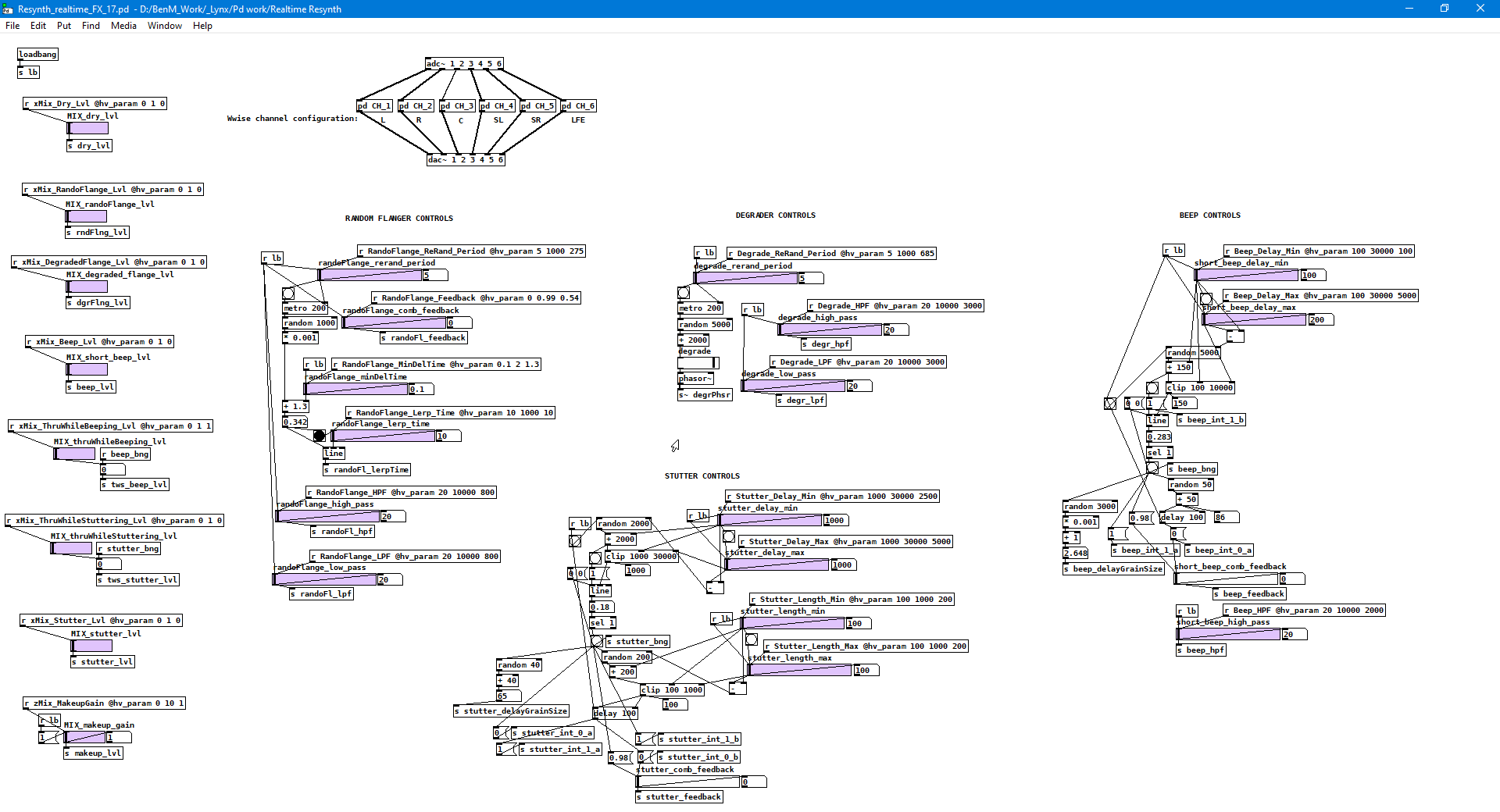

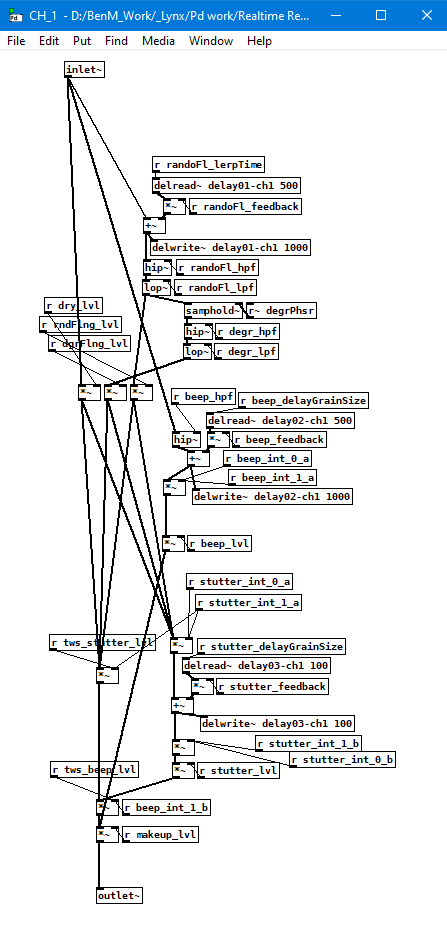

This led us to begin experimenting with the creation of custom Wwise plugins, using Pure Data and converting the Pd patches into plugins using the Heavy compiler. In Pd we created an approximation of the process that we'd configured in Fracture; a multi-effect comprising a sample degrader, a step flanger, a micro-stutter and a beep-generator.

The Resynthesizer Pd patch - top level

We exposed the required parameters as "@hv_param" nodes so we could configure the parameters in Wwise. We were able to assign RTPCs to those parameters to make the glitching & degrading effects reduce in severity as the player upgraded their in-game resynthesizer.

The processing is then carried out in subpatches, with identical processing for every channel. The subpatches can be seen in the image above between the adc and dac nodes.

The channel processing subpatch

After conversion via Heavy, the resulting plugin could then be instanced on the mixer bus receiving all our resynthesizer sounds, and all the sample-and-hold stepping would be synchronized. We had a single DSP running across the entire resynthesizer submix, and CPU usage was minimal. It was exactly what we needed.

Here's the plugin running in Wwise, processing a flame plume loop:

And here's the effect running in-game:

Heavy Gotchas

At this stage I should mention a couple of gotchas that we ran into when working with Heavy-generated plugins.

Firstly, out of the box, the Heavy library does not support more than 2 channels. We wanted to instance the effect post-panning on a surround bus, which meant we needed to modify the compiler to support 6 channels. This was done in hvcc's c2wwise.py file and was a quick and easy change. However, we found that the bus hosting the effect had to be limited to 5.1 in the Channel Configuration dropdown in Wwise to avoid serious stability issues, manifesting as crashes when using output devices with more than 6 channels.

Secondly, while it was easy to generate plugin DLLs for use in the authoring tool and the PC version of the game, when it came to generating plugins for Playstation and Xbox, it was not such an easy process. I was lucky in that I could turn to some of the coders on the team at Blackbird who managed to configure the targets in Visual Studio and generate the files required for the console platforms. Be aware that if you don't have code support, you'll have a bit of work to do to get your effects working on consoles.

Touch-Transferred Sound

Next up, the system that we called "Touch Transfer". This is the system that makes sounds audible when the sound source is in contact with the player, allowing sounds to be heard even in unpressurized environments. This was an effect inspired by the movie Gravity, which was released a few years before development on Shipbreaker began, but was still a major influence.

The Touch Transfer system was borne out of one of those "wouldn't it be cool if…" conversations that spin up constantly during game development. We had our 2D ambient audio and our 3D environmental emitters producing a rich soundscape when inside a pressurized ship, then getting muted when the area became depressurized. This was a nice effect in its own right but we couldn't help but ask the question "wouldn't it be cool if you could hear those sounds, through your suit when you touch the objects emitting them?"

This idea crystalized into three complementary design objectives:

1. To heighten immersion by making the objects in the ship feel like real, vibrating objects full of systems and machinery rather than just empty polygonal boxes.

2. To invite players to touch and feel their way around the ships and create a very tactile experience.

3. To communicate dangers in the environment; for example, noisy fuel pipes and humming electrical hazards.

The sounds we identified as candidates for touch-transfer treatment were:

- 2D ambiences. When touching the structure of the ship itself, the 2D ambiences would be audible through touch.

- 3D environmental emitters. When touching an object, for example, a computer or fuel pipe, the audio emitted from the object would be audible while touching it.

- Physics collisions. When touching a colliding object or a collided surface, the sound of any physics impact would be audible through touch.

There are a couple of other special-case sounds that are set up for Touch Transfer, but the vast majority belong to one of the categories above.

Touch transferred sound in action in the game

Touch-Transferred Sound Setup

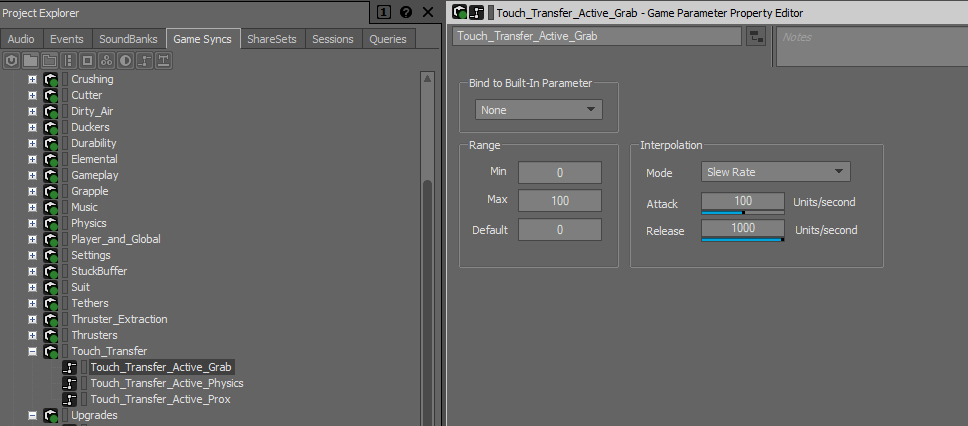

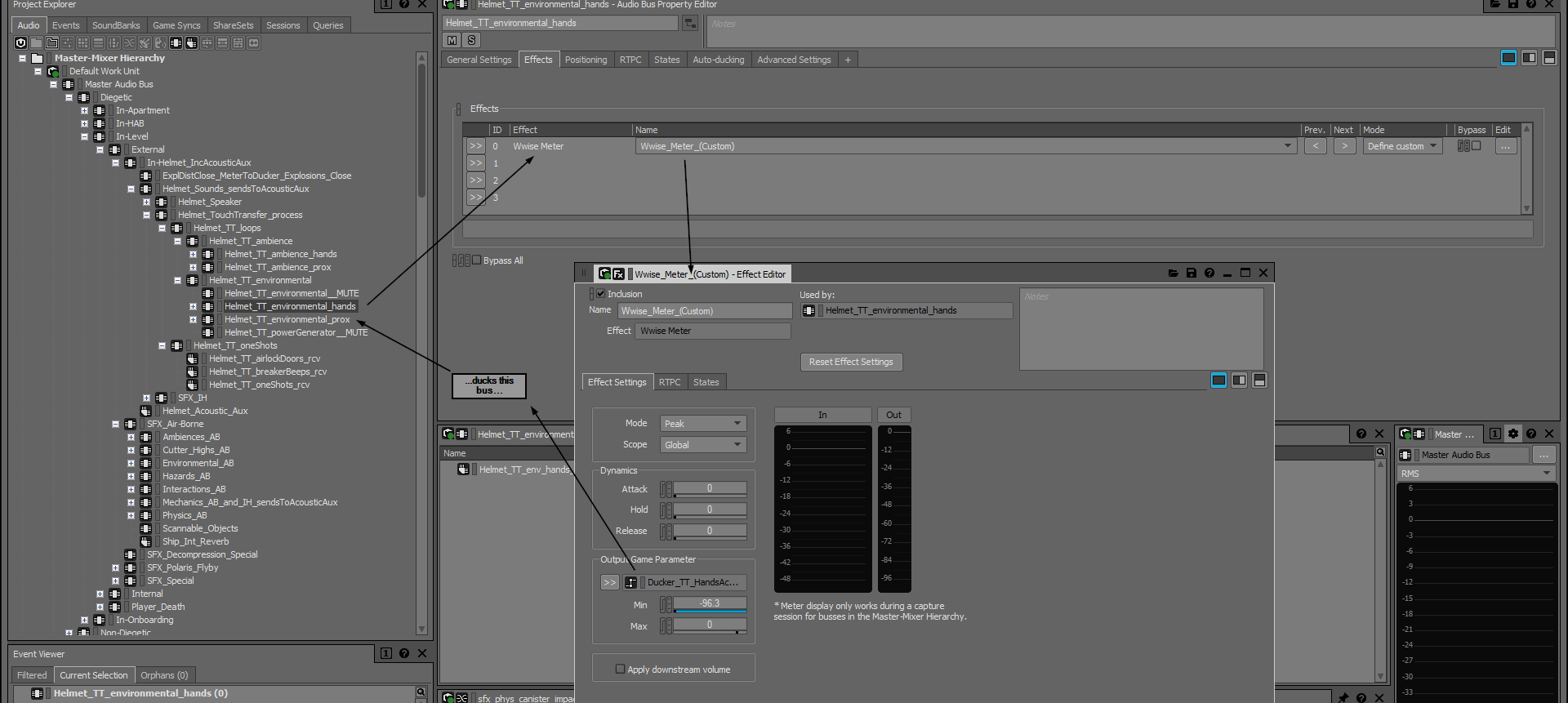

In Wwise, we had three RTPCs that represented the three different ways the player could generate a touch-transferred sound. One RTPC for touching something with the hands, one for touching something with a part of the body (i.e., coming to rest against an object), and one for touching something involved in a physics collision. These were respectively labeled "Grab", "Prox" and "Physics" in Wwise.

The three touch-transfer RTPCs

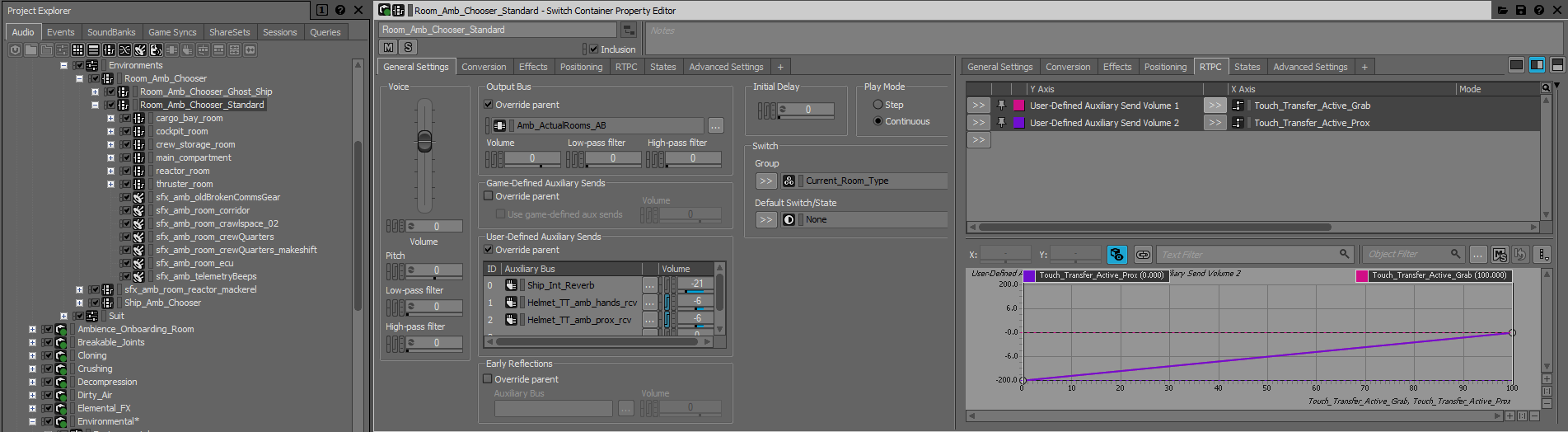

For 2D ambiences, the RTPCs were implemented on a parent actor-mixer node that contained all the 2D ambiences. When a wall or a part of the ship hull was touched, we'd raise the relevant RTPC to 100 and send the sound to the Touch Transfer bus.

2D ambience node with its Touch Transfer RTPCs

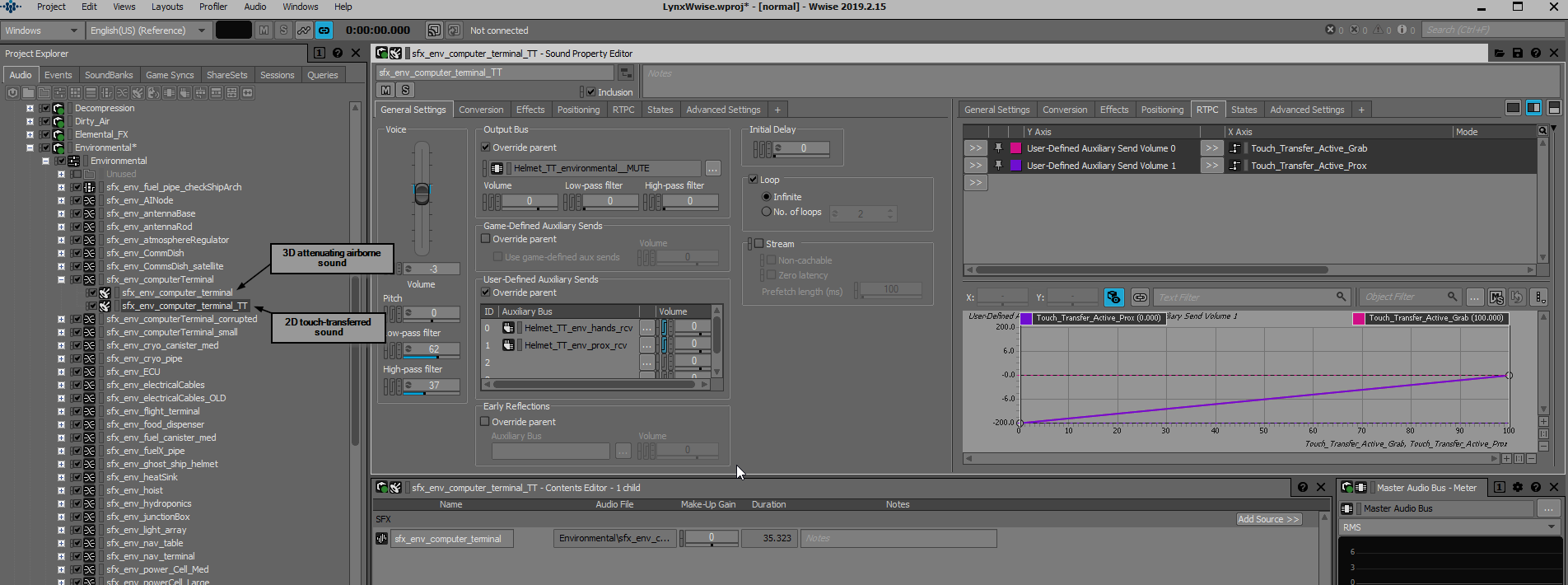

For 3D emitters, it was slightly more complicated because the airborne version of the sound was 3D with an attenuation, but when we sent the sound to the Touch Transfer bus we wanted it to become 2D without any attenuation. For these sounds we played two instances, one 3D attenuating version for pressurized areas, and another 2D version that was inaudible until the Touch Transfer RTPC got raised.

3D emitters configured for Touch Transfer

Physics sounds were handled the same way as 2D ambiences, with the RTPC on a parent node containing all physics sounds.

Separating touch-transferred sounds into "Grab", "Prox" and "Physics" categories allowed us to set up a mixer structure where the sounds get prioritized. Sounds transferred through the hands duck sounds transferred by the player resting against an object. This is because the use of the hands is a positive, deliberate action, whereas coming to rest against an object is passive. One-shot touch-transferred physics sounds are set up to duck both other types of touch-transferred sound. With this configuration, no matter what kind of change in touch-transferred sound occurs, it's always something that can be felt by the player.

We're happy with the results of this system, particularly when it comes to its use as a gameplay feedback mechanism. There are many dangerous objects in the game into which the player can accidentally cut, causing lethal fireballs or electrical arcs. These objects can be made safe by disconnecting fuel or electrical supplies, so we tied the audio into these systems, stopping the emitter's audio when the relevant resource gets disconnected. This allows the player to check if objects are safe just by touching them.

The following video is an example of touch-transferred sound used as gameplay feedback:

- There are two fuel pipe systems running down either side of the ship.

- The pipes are full of fuel, making them dangerous to cut. The rush of the fuel inside the pipes is audible.

- The fuel flush valves are at the opposite end of the ship from the pipe section that the player wants to cut.

- After depressurizing the ship, the lack of air means it's no longer possible to hear the fuel in the pipes.

- However, the player can touch the pipes to hear which one contains fuel and which one has been flushed.

- After flushing both fuel systems, the player touches both pipes and finds them safe to cut.

Example of using touch transferred sound to check that a fuel system is safe

Touch Transfer - Under The Hood

The system to keep track of which objects were being touched is fairly involved. Here's our Technical Director, Richard Harrison, with an overview of the code that manages the object tracking:

There are three tiers of how objects are categorized in our ships: First, "Parts": single pieces of the ship. Second, "Groups": small collections of Parts that, in effect, represent a single object (e.g., a mounting plate and an antenna are both Parts of the Group that make up a satellite dish). Parts and Groups can both be 3D SFX emitters. Finally, Hierarchies are collections of all Parts and Groups that are physically connected to one another; if you cut off a chunk of the ship, all Parts or Groups that were cut off from the source, but are still connected to each other, are then assigned their own new Hierarchy.

"Touches" are made by either Grabs (left/right hand) or Proximity, which are tracked separately as they each affect different RTPC values. Grabs are fairly self-explanatory, and proximity detection is handled with a spherical overlap check within a certain radius around the player. If an object falls within that sphere, it's considered "touched" by proximity.

At its core, the Touch Transfer system is a glorified reference counter, where every time we start touching a Part, Group, or Hierarchy, we add 1 to the count for that particular item, and every time we stop touching it, we remove 1. As long as the number of touches for any Part, Group, or Hierarchy is greater than zero, we can be sure that we are "touching" it. For Parts and Groups that have their count increased above zero, we increase a corresponding Grab or Proximity RTPC on that item. If that Part or Group was emitting 3D SFX, the RTPC now makes that audible in some manner. Upon the number of touches returning to 0, the corresponding RTPC is reset back to zero.

Hierarchies are tracked the same as Parts and Groups, but used differently; Hierarchy RTPCs are only set if the Hierarchy contains the ship's Reactor, which is taken to be the source of the 2D ship ambience. Otherwise the tracking is used to inform the collision system whether we're touching a Hierarchy that has been impacted for transferred physics SFX.

The final complication comes from the splitting and destruction of objects. Our reference counter needs to be smart enough to understand when a Part, Group, or Hierarchy is split, to remove touches from the original item, and reallocate those touches to the appropriate newly created item that is still being touched. The details of how this works rely heavily on Shipbreaker's cutting logic, and are beyond the scope of this article.

Physics Head-Scratcher

Finally, a quick look at Shipbreaker's physics audio system.

On previous projects, physics systems have been based around objects having a "physics audio tag" of some description, which determines what sound should play when the object impacts surfaces. There might be a lookup table to determine what sound should play when two tagged objects impact each other, or there might be child debris objects that spawn when the original object breaks apart, with their own physics tags too. On impact, the collision intensity is passed through as an RTPC, and the combination of the physics tag and the intensity value determine what sound should play. However, on Shipbreaker, we had a unique problem that wouldn't be solved using that traditional approach.

In Shipbreaker, players can pick up large objects like computer terminals and throw them around in a standard "rigid body physics object" way. However, the player can also use the cutting tool to slice the computer in half. Then we have two halves of a computer terminal, which can then be thrown around. So far, not so insurmountable: just use an RTPC to represent the object's mass, and use that RTPC in Wwise to change the "size" of the computer terminal sound. However, the player can use the cutting tool to cut off a wafer-thin slice of the computer terminal, producing a sliver of sheet metal. At this point it shouldn't sound like a computer terminal at all, it should sound like a piece of sheet metal as it bounces around. In other words, at some point that computer terminal should switch from playing one physics sound, to playing a completely different one.

Computer terminals inside a ship

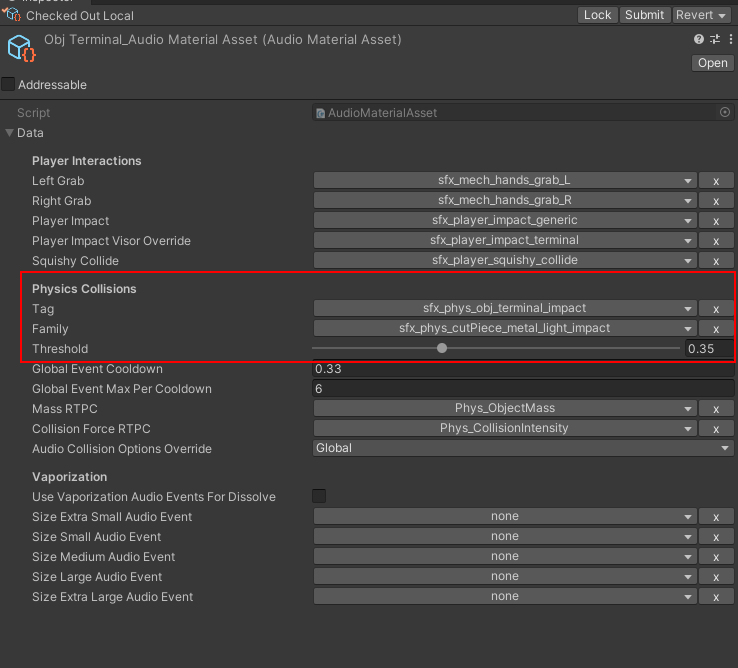

This problem stumped us for a while. How could we make a computer terminal know that it needed to become sheet metal when sliced thin enough? The solution we ended up using was extremely simple. Objects just needed to have two physics tags and be configured with a mass value below which they switch from the first tag to the second.

At this point, I'll copy/paste from the physics audio design spec:

Objects like computer terminals will have an "AudioMaterialData" component. This component will have three fields:

- Tag: The physics audio identifier for this object. A computer terminal might have a value of "object_large_metal_electronic_heavy".

- Family: The generic material group to which the object belongs. The computer terminal might have a value of "metal_light".

- Threshold: A numeric value that represents the percentage mass of the original object below which the object will use its Family value instead of its Tag value.

The Tag can effectively be seen as an override for the Family, as long as the object is above the mass percentage specified in Threshold.

On collision, the absolute value for the object's mass is passed through to Wwise as an RTPC, which is then used by the sound designer to affect the perceived 'size' of the sound. This RTPC is updated and passed through regardless of whether the object is above or below its Threshold value.

The Family and Tag fields are optional:

- If no Tag is present when an object collides, the system should use the Family value, regardless of the Threshold value.

- If no value is present for Family, the object uses its value for Tag until it falls below the Threshold value, after which it produces no sound when it collides. (This may be desirable in some cases.)

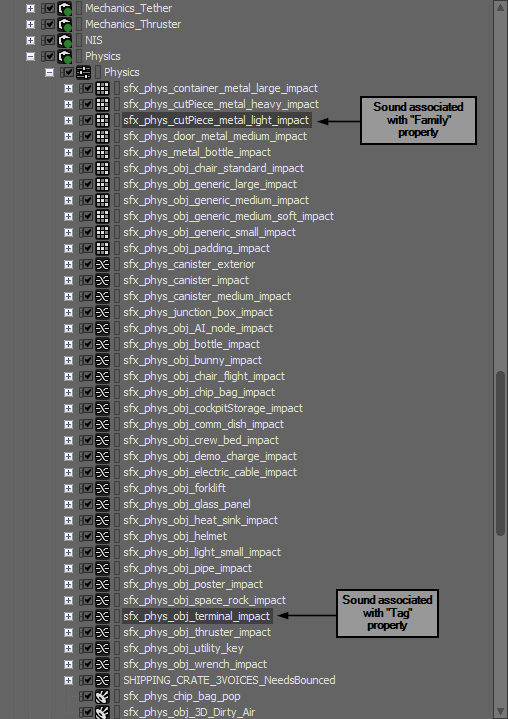

The different values for Tag and Family are assigned different Wwise events; the physics audio system will fire the correct event based on whether the Tag or Family is used.

Physics audio setup in Unity

Once we'd hit upon this idea, it was plain sailing from there. In Wwise, we set up physics sounds as normal, and in Unity objects would know when to use one sound and when to use another based on the combination of their Tag/Family values and their mass compared to the Threshold value.

Here's the system functioning in the game:

Hopefully, this has been an interesting look at some of our systems. Shipbreaker is a very systems-intensive game. It includes many more systems we could talk about that didn't make it into this blog post. Perhaps we can go into those in another post at a later date. Thanks for reading!

Comments

Jakub Ratajski

January 22, 2023 at 08:41 am

Very insightful, learned a lot!