Hello. I’m Eddy Liu, the audio designer of the recently released Immortal Legacy: The Jade Cipher. I was responsible for creating the sound effects and interactive music, and setting up the Wwise project for the game. Immortal Legacy is a PSVR action-adventure/horror shooter game featuring a lot of puzzle elements. Due to the particularity of the VR platform, the audio production was a little different from regular games. In this two-part blog series, I’d like to share some ideas on the overall audio design for this project.

Immortal Legacy: The Jade Cipher official trailer

Ambisonics: From Concept to Usage

The ambisonics format was developed in the 1970s under the auspices of the British National Research Development Corporation. However, it was not as widely used or commercialized until VR came to be. The core idea behind ambisonics is about introducing the 3rd dimension (i.e. height), giving listeners a better perception of the spatial position of the sound sources. Conventionally, when sounds are made for stereo or surround systems, the panning would situate the audio on a horizontal plane. However, once we introduce the concept of height, the audio is no longer flat, and audio positioning feels more spatialized. Spatial perception is something the audio industry has been working towards. It’s an area where Dobly ATMOS and DTS:X technologies have been focusing efforts in recent years.

Despite the fact that ambisonics has been around for some time, ambisonics is not widely used in the traditional film and television industry. On one hand, although it provides accurate spatial audio positioning, it poses a real big challenge in regards to playback accuracy, with higher requirements when it comes to playback devices and systems installed in theaters. This makes it difficult to commercialize. On the other hand, in traditional films and games, since the screen is usually fixed directly in front of the audience, listeners tend to pay more attention to the sound information coming from the direction they are facing. In most cases, point sources do not appear frequently on the left, right or rear side of the viewer. Meanwhile, human ears’ judgment on the vertical direction of the sound source is actually not as sensitive as the horizontal direction. Even if we add a speaker array at the top like the Dolby ATOMS system, the movement of sound objects in the top speaker array is still regarded as optional in production. So, for an omnidirectional sound system like ambisonics, there is no obvious superiority.

For VR products, ambisonics has natural advantages. Thanks to the panoramic features of VR, we can use ambisonics to create a three-dimensional sphere space, and place the listener in it. Its height control allows the listener to better estimate sound positions in a 3D space. Unlike with traditional panning, ambisonic sounds are played back through one or more speakers in a sphere-like structure. This creates a sound radiation surface, and makes the movement of sounds in different speakers and directions feel smoother and more natural.

However, as mentioned earlier, ambisonics has certain requirements when it comes to playback devices. Ambisonics itself is not limited to a certain speaker array. You can play ambisonic sounds through Stereo, 5.1, 7.1 or other devices. But since most users don’t have a multi-speaker system with 360° or even 720° panning capability, and are still using traditional stereo systems, it will lead to unsatisfactory playback results. So, for the moment, ambisonic sounds in games are mostly played back in the form of HRTF binaural signals for headphones, which are converted from multichannel signals. That's why most VR games recommend that players wear their headphones at the beginning in order to get the best listening experience.

While VR cinepanoramic films can make great use of ambisonics, with VR games, due to unique game interaction and uncertainty on the spatial orientation of emitters, we cannot rely solely on ambisonics live recording assets. So far, commercial audio assets recorded in ambisonics format are mostly static ambiences. For point sources in a 3D space, we need to use the Wwise ambisonics pipeline to re-render sounds in ambisonics format according to their real-time positions, and then feed them to the listener in binaural or other ways.

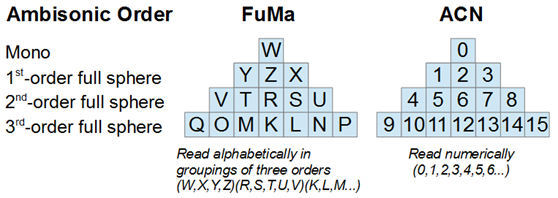

In terms of orders, ambisonics can be divided into 1st order (4 channels), 2nd order (9 channels), and 3rd order (16 channels).

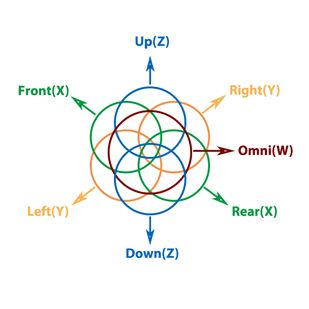

That means, as the number of channels increase, you can create a more realistic and delicate soundscape, and get a better perception on sound positions. Note that we are talking about the number of ambisonics channels, it has nothing to do with the number of channels during playback. Even if we use a stereo playback device, you will have a much better listening experience with 3rd order ambisonics than 1st order ambisonics. In terms of format, ambisonics can be divided into A-Format and B-Format. In short, A-Format refers to unencoded original tracks that we recorded with ambisonics microphones. Nevertheless, when talking about ambisonics in general, we usually mean B-format. After encoding an A-Format audio file to 1st order ambisonics, you will get a B-Format file with four tracks (W, X, Y & Z).

- W indicates an omni-directional microphone recording all-around sounds equally

- X indicates a Figure 8-directional microphone recording front and rear sounds only

- Y indicates a Figure 8-directional microphone recording left and right sounds only

- Z indicates a Figure 8-directional microphone recording up and down sounds only

Ambisonics B-Format Figure

These four tracks (W, X, Y & Z) are not the same as 5.1 or 7.1 channels, but rather they represent recordings of all 3D spatial audio information. Only through B-Format can we get the correct spatial position of the sounds, and then perform processing such as mixing and editing.

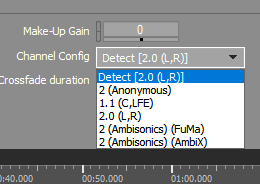

Considering the differences in channel order, weight and quantization, B-Format can be further divided into several sub-formats. The following two are commonly used: Furse-Malham and AmbiX (ACN ordering with SN3D normalization). The former is referred to as FuMa for short, which is a traditional B-Format that has been widely used. The latter is a new format that has become popular in recent years, mostly used in software and games. AmbiX has better scalability than FuMa. But, note that these two are very different in channel ordering. Whether the sounds are created through live recording or post-synthesis, you need to confirm whether the B-Format files you are using are in FuMa or AmbiX format so that you can use a compatible decoding system to restore them. Otherwise, you will get incorrect information regarding the sounds' positionings. When you import a B-Format file into Wwise, remember to manually select the correct decoding format.

Ambisonic Format Select in Wwise

Now that we have an understanding of ambisonics, let’s talk about how it is used in VR games. Basically, this can be grouped into two situations:

- Importing existing B-Format audio files for decoding and playing them in the game

- Importing audio files in any format and, after being rendered through the Wwise ambisonics pipeline, playing them

The former is mostly for ambience assets recorded in ambisonics format, while the latter is normally for importing regular audio assets and playing them back in the game after converting them to ambisonics.

Ambience Design

VR games have a significant advantage when it comes to enhancing immersion because of the visual impact that the panoramic screen brings to the player. With Wwise, we can import B-Format ambience assets directly for use, and provide a better immersive experience. Today, there are plenty of ambisonics ambience libraries for commercial use on the market. Most of them are live recordings based on natural environments. If we want to get more abundant ambisonics ambiences, we can use DAWS (such as Nuendo or Reaper) in combination with proper B-Format codec plug-ins (such as ReaJS ATK, Matthias AmbiX, Waves ambisonics Tools, and Noise Maker Ambi Head) for artificial synthesis. But these B-Format artificial sounds are not as good as directly recorded ambisonics assets when it comes to the listening experience they provide, and they increase production hours and costs. Therefore, in addition to ambisonics ambiences in some game scenes, we also supplemented them with lots of Quad ambiences and some stereo ambiences. For Quad ambiences, we can easily downmix existing ambience assets from 5.1 to Quad, which is more convenient than processing ambisonics assets. With respect to the listening experience, as we tested, we found Quad ambiences are good enough for delivering an enjoyable and immersive player experience.

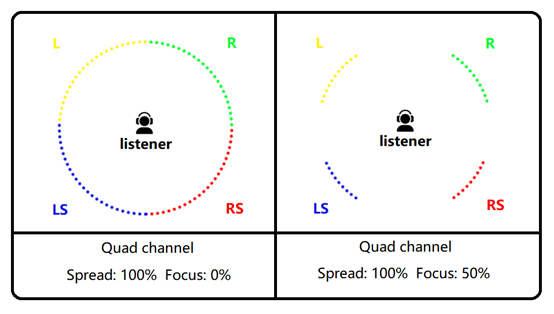

In the game, we attached ambiences directly to the player’s camera. The player would feel changes with the ambiences when they turn their heads. We were able to control the smoothness of ambience transitions between channels when the player turns their head by adjusting Spread and Focus. For example, when Quad ambiences are used, we can smooth their transitions between directions as much as possible and make them very subtle with 100% Spread and 0% Focus. If you want to deliberately highlight ambience changes in different directions, you can increase Focus or even decrease Spread at the same time.

Spread and Focus Demonstration

It’s important to know that even if various formats of ambiences are used in a single project, we can still mix and match these sounds while maintaining the game audio’s overall spatiality and directionality. Let’s say we play a stereo ambience and an ambisonic ambience concurrently. When the player turns their head in the game, they would feel ambience changes in different directions, while the Stereo ambience would provide more sound elements. Stereo also allows us to design audio more easily.

In the game, we designed around 40 ambiences. These ambiences are different in format (Ambisonics, Quad or Stereo), usage (Country Yard, Storm, Heavy Wind, Cave Dripping, etc.) and style (Eerie, Tension, Ancient, Mechanic, etc.). Each of them can be considered a layer. In the game, each scene actually consists of three layers of ambiences which are added layer upon layer as needed. The good thing is that all scenes will be filled with different kinds of ambiences instead of just one. By arranging and blending, we can get many more ambiences from the imported assets. Even if we use the same ambience assets in different scenes, we still get highly unique ambiences by setting Volume for each layer. In the game, ambience events are attached to the player’s head with Attenuation settings disabled.

Immortal Legacy is more linear in terms of its audio, so we intentionally grouped ambiences by areas. This allowed us to further divide areas based on scenes and avoid constantly repeating the same ambiences. We also chose to blend layers. For instance, scene A consists of ambience 1, 2 and 3. Ambience 2 is the primary one with the highest volume; 1 and 3 are auxiliary ambiences with lower volume. When the player enters the adjacent scene B, they change to ambience 2, 3 and 4. Ambience 3 would become the primary ambience, while 2 and 4 become auxiliary ambiences. As such, each two adjacent scenes partially share the same ambiences. As long as we split them properly and set appropriate Cross Fade Time, the ambience transitions between scenes will be smooth and pleasing. The player barely feels the gaps or boundaries.

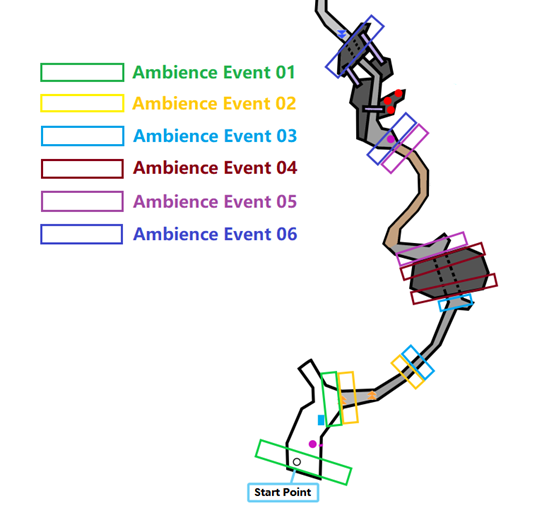

Ambience Layout

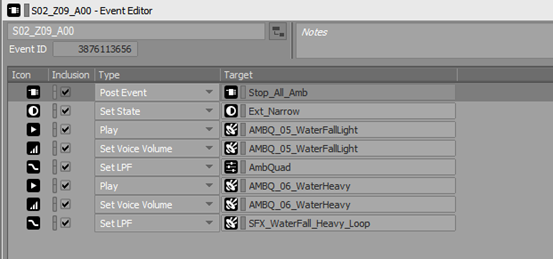

As shown in the above screenshot, each area within a scene consists of two ambience Events. When the player is about to enter the next area, the ambience Event in the current area will be triggered once again before the ambience Event in the next area is triggered. This is to ensure that the correct Event can be called when the player goes back. Let’s take look at one of the ambience Events as an example:

Ambience Event Demonstration

All ambience Events start with posting a Stop_All_Amb Event:

Stop All AMB Event

This Event is used to stop all ambiences and ensure that they won’t be overlaid when the player constantly triggers the same ambience Events. In other words, the Event will reset these ambiences. So, each time an ambience Event is triggered, it will stop all currently playing ambiences first, then start playing triggered ambiences in the current scene. All Stop and Play actions have a relatively long Fade Time, so that the ambience transitions are more seamless and the player won’t feel any pauses or gaps.

The benefit of 3D Position Automation

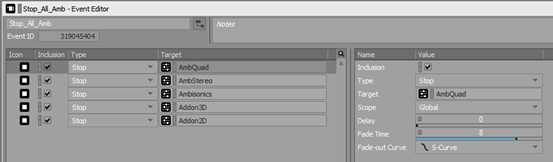

For an entire soundscape, the ambiences mentioned above are only bed elements, which can be considered as pads. In order to create a realistic and immersive soundscape, we need more than just ambiences. We have to decorate the entire environment with more sound elements, such as a ticking clock in the bedroom, crickets in the grass, birds flying by, etc. These sound elements appear to be point sources, but they are also part of the environment. Even though there are no specific game objects to attach these sounds, we must find a way to emulate them as long as they are needed in game scenes. For example, there is a dark, moist and spooky catacomb in Immortal Legacy. In this case, a relatively quiet environment can reinforce the mood. But this doesn’t mean that there should be no other sounds at all. In this situation, we included wood creaking sounds, metal stressing sounds and bug crawling sounds, in addition to the ambiance sounds. Some of them needed to be attached to game objects in the scene, such as wooden beams and iron frames. Others had to be emulated with Wwise. Let’s say the producer wants to add bug crawling sounds occasionally when they crawl past the player in a certain area, and let's say the area is very dark, so it’s difficult for the player to actually see the bugs. Because there won’t be any actual bugs in the game, we don’t have appropriate game objects to attach these sounds to. So, at this point, we turned to the Wwise 3D Position Automation feature, and it helped us a lot. First, we made a group of bug crawling sounds, and created a Random Container. Then, we set this container to an Emitter with Automation and manually defined the movement path of these sounds. Then, we specified some random values to ensure that the path is different each time bugs are moving.

Path Simulation

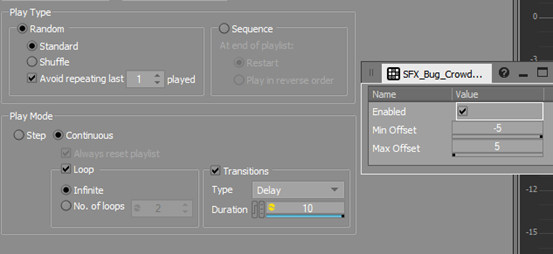

Finally, we set this Container as a looping Event with random intervals and attached it to the player. It is triggered by ambience Events in a specific area with a Post Event.

Loop Randomization

In the end, when players enter that area, they will hear bugs crawling occasionally nearby with a different movement path every time. If we enable “Hold Emitter Position and Orientation” at the same time, when these sounds are triggered, the players’ movement will also change its position relative to these sounds. This will make the players feel that there are actual bugs.

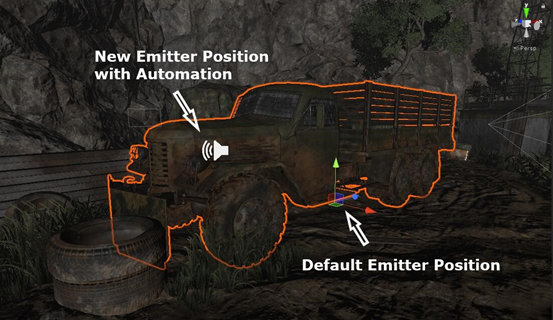

The 3D Position Automation feature can also solve many other problems effectively. Suppose there is a truck idling in the neutral position. Since the center point of the truck model is not at the front of the head part of the truck, the engine sound actually comes from the lower middle part if we attach the sound to the model. In this case, we can use Emitter with Automation to manually adjust the emitting position and move it to the head part. We don’t have to ask our programmers or art designers to solve the problem. And, this could save us a lot of time.

Engine Sound Position

In the video below, there is a huge statue in the scene. When the mechanism is triggered, we can hear unlocking sounds in different directions. These emulated sounds are all centered around the statue. They can be used to simulate the interior of the wall or a game object in the distance which has no physical model.

Unlock Sound by 3D Position Automation

Cut-scene Design

If game cut-scenes can either be pre-rendered or rendered in real-time, we can implement cut-scene sounds with pre-made tracks or by calling events in real-time through the program. The former method is similar to the production of traditional films and TV series. We only have to create an audio file according to the animation content. In this case, all mixing work is done in advance. Sound properties like content, proportions and directions cannot be changed in game. Regardless of the game capacity and memory usage, this method has two disadvantages:

a) We would have to rework audio assets if the animation content or edit points were adjusted many times.

b) During playback lasting a longer time period, there could be unexpected crashes depending on your computer's performance, which would affect the synchronicity of visuals and sounds.

We can avoid this issue through real-time game engine calls as we did to cut-scene sounds. However, there will be a big challenge on sound mixing when we call cut-scene sounds in real-time. And, with complex cut-scenes, productivity will be decreased if we chop post-production tracks into individual sound events. For Immortal Legacy, there are more complicated issues. Since the cut-scenes in the game are displayed in real-time on a panoramic screen, players may turn their heads or even move their bodies while the animation is playing. This means that all sounds have to be adjusted in terms of direction and distance according to the players’ movement. In the end, we combined these two methods for the cut-scene design. Based on each character’s conversation and movement, we created dialogue and animation tracks, attached them to characters while playing simultaneously with the animation. As such, we were able to ensure that all cut-scene sounds were still 3D in the game. Other insignificant sounds in the animation (such as a door closing or an item falling) or sounds that need to be triggered frequently (such as footsteps and gunshots) can still be called in real-time through the game engine. This reduced our production hours and simplified mixing. After testing, we found that there was almost no crash on PS4 when we used the Stream - Zero Latency feature provided in Wwise. And, this could relieve any memory pressure caused by long pre-rendered audio files.

Comments