Overview

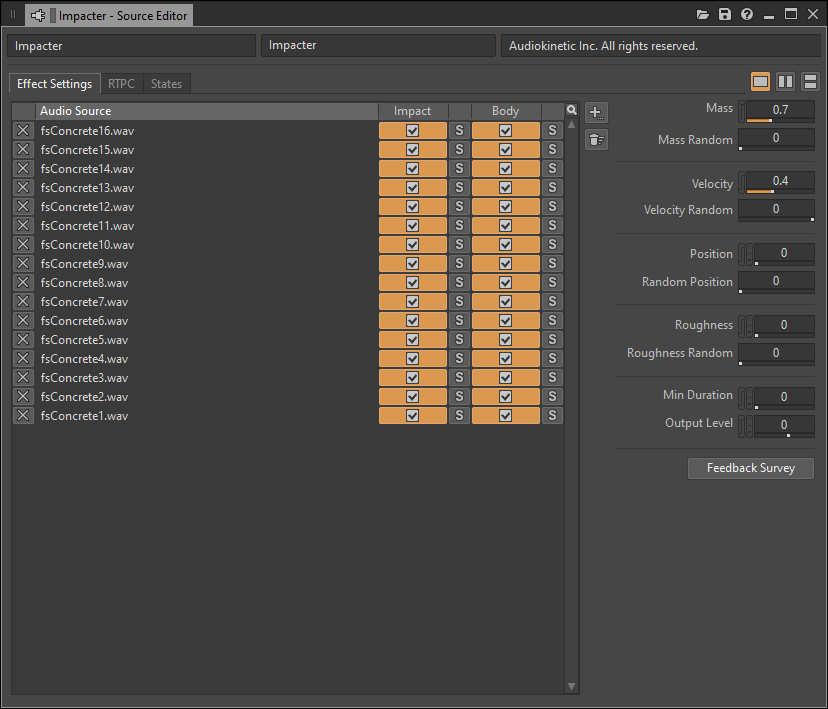

Impacter is a new source plug-in inspired by the spirit of the original SoundSeed Impact Plug-in. It allows designers to load “impact” sound files into the authoring tool, which are analyzed and saved as a synthesis model. The runtime plug-in can then reconstruct the original sound, or more interestingly generate sound variations controlled by intuitive physically-informed parameters and cross synthesis.

The mass, velocity and position parameters model the behaviour of the size of the impacted object making sound, the force of the impact, and the acoustic response of where it is being struck respectively. Each parameter can also be randomized.

To manage cross synthesis, multiple sound files can be loaded, and the plug-in will randomly combine “Impact” and “Body” components of different sounds (more on this below). It is possible to individually include or exclude each file’s impact or body from the random selection should there be specific combinations of sounds the designer wants to use.

While the workflow is similar to the first Impact Plug-in, we’ve made a few quality of life improvements. The plug-in no longer requires an external analysis tool, as the Wwise authoring tool handles audio analysis in the background. Cross synthesis combinations were possible in the original Impact, but there was some risk of sound instability and huge changes in gain, whereas Impacter is stable across any cross synthesis combination. The UI has also been made more conducive to working with groups of sounds, even leveraging random playback selection to function like an extended random container in some cases. The parameters were also carefully designed to be more physically meaningful and intuitive with respect to the sounds they are treating.

Motivations

The development of Impacter grew out of research exploring methods for applying creative sound transformations on compressed audio. Creating an audio representation that was broadly and generally useful for manipulation proved to be quite difficult, as more flexibility often compromised the potential for rich transformations.

Focusing on a specific domain of sounds, however, allowed for the potential to deliver more expressive sound transformations. Impact type sounds became an inspiring first choice, as they could be controlled with physically intuitive parameters. Complimenting our work in spatial audio, where the goal is to provide the sound designer with creative control over the behaviour of acoustics (physics of sound propagation), we imagined a synthesizer that allowed the sound designer to work similarly with the sound produced by physical events such as collisions.

We hope novelty arises in allowing the designer to work with existing sound material as opposed to synthesizing from scratch. This way they can apply game physics type transformations to their favourite foley recordings! We are also well aware that most great sample libraries and recordings do not provide impact sounds in groups of one, and it was important to develop a workflow that would leverage working on groups of footsteps, bullets, collisions etc. Cross synthesis is a useful way to allow the sound designer to work with collections of these impact sounds, and explore the space of variations between them.

Definitions

Before we talk about Impacter in greater detail, it’s useful to establish some definitions and concepts related to how the underlying algorithms of the plug-in function:

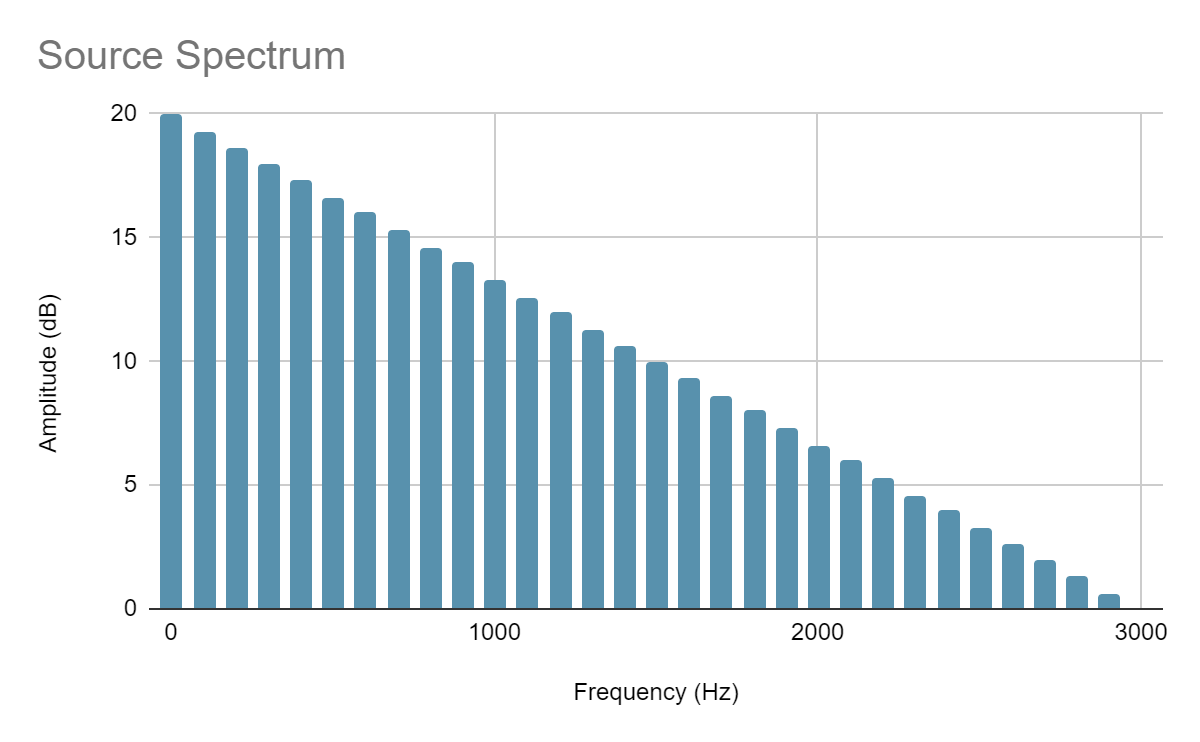

A source-filter model is a sound synthesis model based around an excitation source signal being passed through a filter. Speech or voice is commonly modeled using a source signal and a filter, where a glottal pulse excitation (air passing through vocal chords) is shaped into vowel sounds using a filter (the vocal tract). There are classic analysis methods, such as Linear Predictive Coding, that deconstruct an input signal into a filter and a residual excitation signal.

Figure 1. An example of a source spectrum and the filter function that shapes it. The source spectrum corresponds to the glottal pulse excitation and the shape of the filter function corresponds to the shape of the vocal tract. (Open image in new tab for full size.)

|

.png) |

.png) |

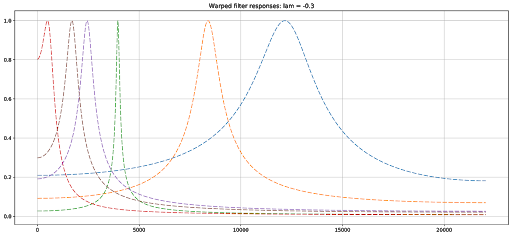

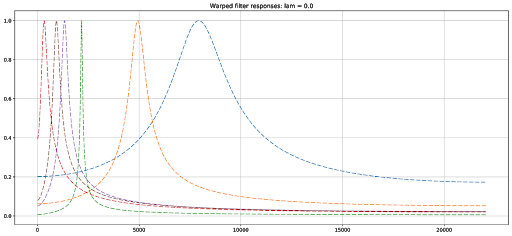

Frequency warping is a non-uniform scaling in the frequency domain (spectrum). It takes frequencies on the linear scale and redistributes them along some other scale, often compressing the lower portion of the spectrum towards 0 and stretching the higher portion across to fill the remaining space, or vice versa. Warping can be applied directly to the frequencies of a collection of sinusoids, or to the coefficients of a filter which correspond to a curve in the frequency domain.

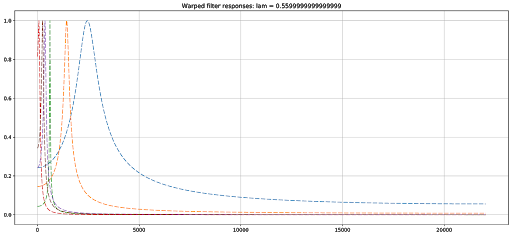

Figure 2. An example of typical filter responses used in Impacter being shaped by warping. (Open image in new tab for full size.)

|

|

|

For simplicity's sake, it can be understood that the activation of different acoustic modes on a physical material corresponds to the presence of energy in different frequencies. In the case of a synthesis algorithm like Impacter, the position and gain of individual filter bands and sinusoids in the synthesis algorithm are affected. Borrowing from physics, the intuition about acoustic modes is that materials which are being excited (struck by an impact) will have modes that are activated depending on where the impact occurs on their surface. An impact at the center of a membrane, for example, will activate the least number of available acoustic modes, while an impact near the edge will activate more modes. When it comes to parametric control of this behaviour, as we’ll see below, it is often enough to just model the distance from the center of a surface. In a simplified model, anywhere around a circle that is equally distant from the center will have the same approximate acoustic behaviour. This will be useful for understanding how the position parameter works in Impacter.

Furthermore, the size of an object is correlated to how many acoustic modes it may contain. A larger object will contain more and lower frequency acoustic modes, which can produce more spectral density if excited at different positions. Generally, we perceive an increase in size as a lowering in pitch due to new lower frequency modes changing the fundamental frequency of the impact sound. Research [1] has shown that warping provides a useful approximation to changing the size of an object by reproducing the increasing density of modes in the low end of the spectrum, but for a fixed amount of modes generated by a synthesis algorithm. This turns out to be a useful way to model the effect of changing size without requiring the algorithm to do more work to synthesize more modes.

Synthesis & Analysis

Impacter’s synthesis algorithm is based around combining a sinusoidal model and a source-filter model. Put otherwise, any original input sound is reconstructed during runtime using a combination of sinusoids, and an excitation signal running through a bank of filters. Conceptually, we relate the excitation signal from the source-filter components to the “impact” components of Impacter, and the source-filter model’s filter bank and the sinusoids to the “body”.

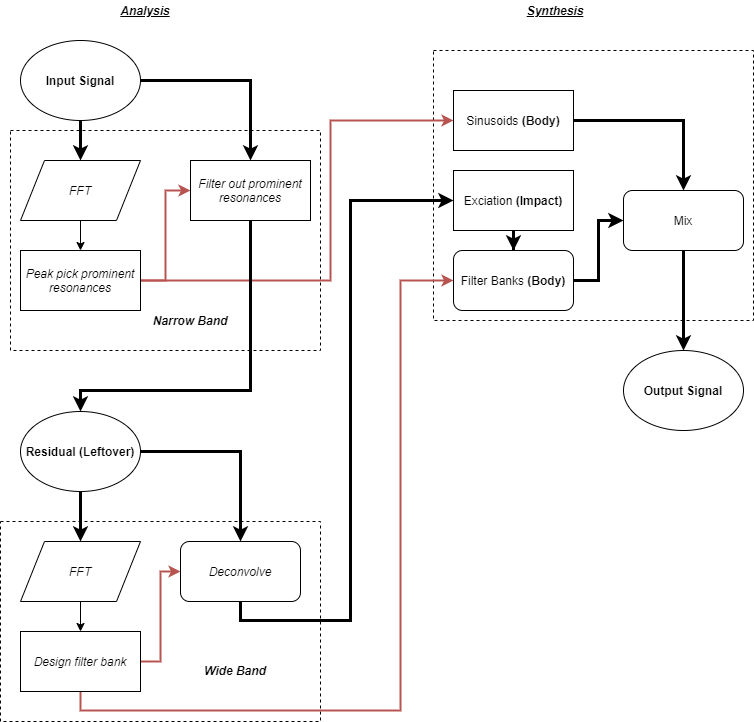

The analysis under the hood of Impacter’s authoring plug-in is based around a two stage algorithm which first extracts the frequency and envelope information for the sinusoidal synthesis model, then extracts a bank of filters and a leftover excitation to use in the source-filter synthesis model.

The first stage, “narrow band” analysis, performs peak picking on the input sound spectrum to find the most resonant frequencies of the impact. As these resonant peaks are filtered from the sound to produce a first residual, their frequencies and envelope information are stored as parameters for the sinusoidal synthesis model. Next, the narrow band residual is passed to the “wide band” stage, where the residue spectrum is used to design a bank of filters for the source filter model. The final excitation signal is produced by deconvolving the residue and the filter banks.

Figure 3. A system diagram of the underlying analysis and synthesis processes used in the Impacter.

Resynthesis

All the work the analysis algorithm does in the authoring tool makes for a very light amount of work for the synthesis algorithm to perform during game runtime: the mixing of a handful sinusoids with the excitation signal passed through a biquad filter bank - no FFT’s required! And rest assured, the reconstruction of the original input sound is perfect, so no part of the input sound is lost.

Transformations

This hybrid synthesis model provides many different transformations to shape the timbre of the sound during synthesis, independently or together.

-

The coefficients in the filter bank and the frequencies of each sinusoid can be warped to non-uniformly affect the timbre and perceived pitch of the impact.

-

The excitation signal can be resampled (pitched up or down) independent of the spectral shape of the filter bank or positions of the sinusoids.

-

The envelopes of each sinusoid can be stretched or dampened to match the length of the resampled excitation, or to further decouple the resonances for the impact sound.

-

Specific sinusoids and filter bands can be selectively turned on or off, providing intuitive control over level of detail, or even a mapping that models the excitation of acoustic modes with respect to position.

-

FM (Frequency Modulation) can be applied to each of the sinusoids. Research has shown mappings of FM parameters that can be used to control the perceptual effect of roughness in impact sounds. [2]

Sound Examples

The transformations 1, 2, 3 are all controlled by the mass parameter in Impacter.

Mass:

4 is used in different ways to provide velocity and position controls.

Velocity:

In the case of position, the parameter ranging from 0 to 1 behaves like the radial distance from the center of the Impacted surface.

Position:

Lastly, 5 was the basis for a roughness parameter.

Roughness:

Cross Synthesis

The Impacter plug-in supports the loading and synthesis of multiple sound file sources, and the hybrid sinusoidal-source-filter model can cross synthesize different model components from each sound source. While there are many different ways to combine the components of the synthesis model, the most successful cross synthesis result was found to be the excitation of one file - the 'impact' - combined with the filter bank and sinusoids - the 'body' - of another. Thus Impacter’s UI allows for such a selection of impact and body.

Wwisdom

A warning: combining incongruent sounds will often result in sinusoidal ringing disjoint from the impact because the narrow band peaks have little overlap or relation with the impact spectrum, or the impact does not contain spectral energy that is emphasized by the filter bank. Cross synthesis seems to work best when permuting sounds from a similar group. A set of n footsteps from a foley session can quickly be extended to n2 microvariations before parameter changes.

Conclusion and Uses

Impacter was built with the goal of providing new experimental ways to design sounds for game audio. Focusing on a specific class of sounds (impacts) allowed us to build a plug-in that can be used in multiple ways. For a group of sounds where variations are needed, the random selection of permutations thanks to cross synthesis, on top of Impacter’s internal parameter randomization, allows for an extended random container-like behaviour. If the designer has access to RTPCs and events affected by in-game physics (collisions, velocity, size), they can intuitively map them to physically-informed transformations on their Foley sounds.

While we are offering Impacter to our users as an experiment or a prototype, we are absolutely confident in it’s engineering and QA. Impacter should fit seamlessly into your authoring workflow without hiccups or crashes, and its game runtime is a lightweight and stable synthesis algorithm that won’t break your memory or CPU budget.

Comments