If we imagine that a theater set is comparable to a virtual game world, wouldn’t Wwise succeed in giving life to a stage? In fact, it beautifully did on Le Léthé, and although Audiokinetic’s middleware Wwise was initially designed for games, it may be as powerful for theater sound designers as it is for game audio designers.

Prior to working on Le Léthé, I had experience working with Wwise for games, but absolutely none in theater. This was the occasion to confirm my belief that a game audio mindset and workflow (i.e. granulized, event based approach) can be effectively applied to a theatre play. Beyond the technical side of things, theater and games share some similarities as to where and how action and narration unfold within a particular game or on stage, so it seemed to make sense.

I hope that theater sound designers with no experience in middleware may find this article helpful. From my experience on this project, I believe game audio middleware can enable them to build adaptive soundscapes, music, and playback systems that are rock solid and incredibly easy to use during shows.

The project

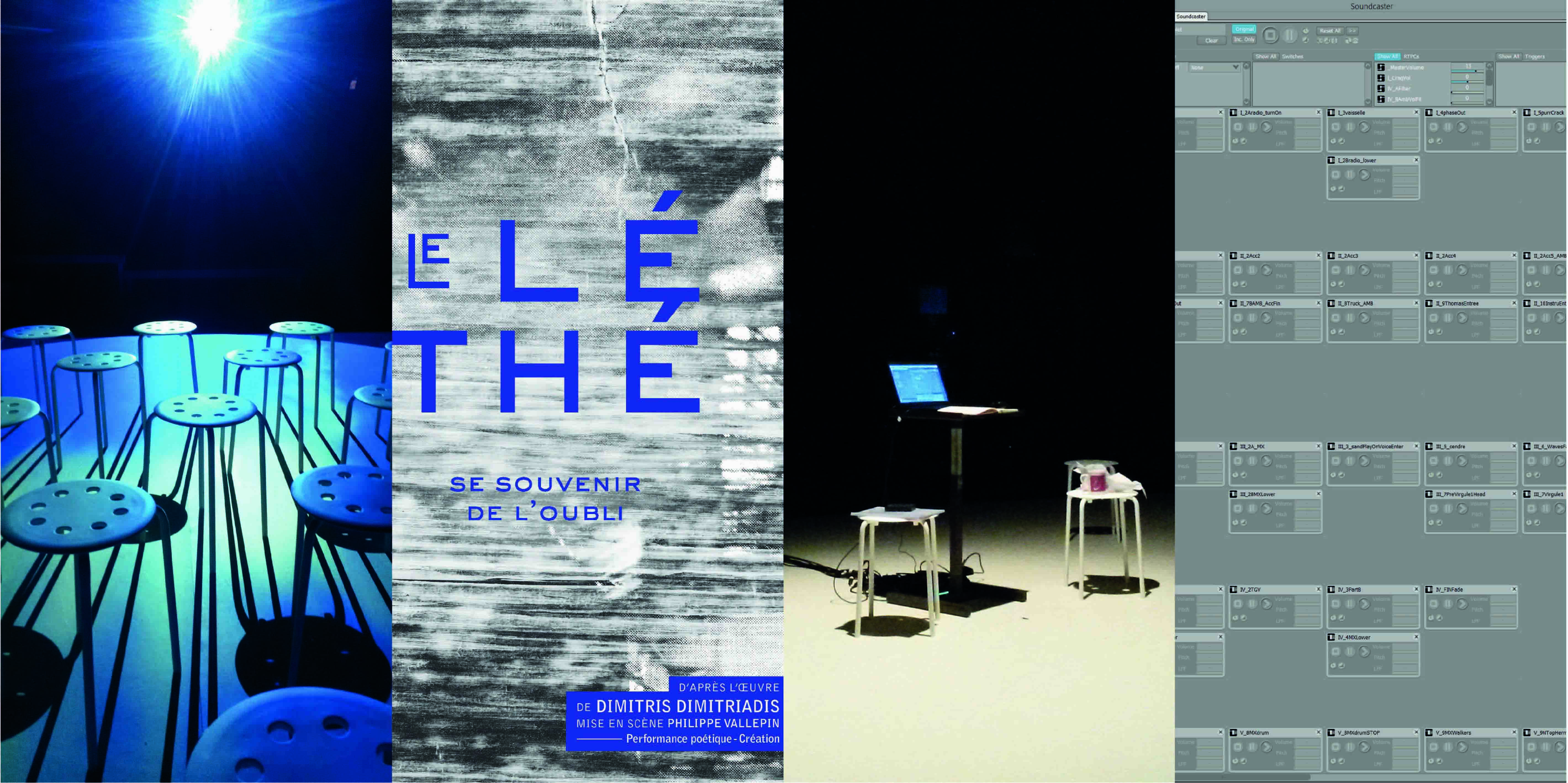

Le Léthé is a play about memory and forgetfulness by play director Philippe Vallepin, adapted from a 2000 book by Dimitris Dimitriadis.

From the beginning of production, the play director knew that audio was going to play an important role, as it would help to pace and structure the text (which contains many inversions and repetitions of words).

I was initially brought on this project to design “sound textures”; but, as we progressed, I was given almost complete freedom over the content, how much sound there would be, and how it would play back.

Production Work Method

I worked with video shootings of rehearsals in Reaper. From there I spotted where Wwise Events would be triggered. I then designed and composed a short “static” version of the soundtrack. I mixed the stems in 7.1 with the Ircam’s Spat (each act happens in a different corner of the auditorium). The sound design and music compositions were then granulized and exported into smaller segments and layers. Finally, the real-time designs were made in Wwise and played back with a Soundcaster session.

I would say that one third of the time was spent on gathering field recordings, working with musicians, and recording foleys; another third was composing drafts in Reaper; and the last third was spent in Wwise on the playback system.

Approach

The main objective was to make the soundtrack inseparable from the actors’ performance. We also wanted to support the actors without having them worry about synchronization with a complex soundtrack. It had to be subject to the actors’ own pacing during rehearsals while acting as a coating, by structuring, pacing, creating ruptures and smooth transitions, and changing densities.

I made no distinction between foley, music, and field recordings when composing. They are taken as a whole, and every recorded sound served as a potential component. For example, some winds in Le Léthé were designed by manipulating silk clothing and creaking bow hair on a double bass, part of the rain comes from manipulating peas in a bowl, and some of the internal body sounds were made with parquet floor creaks, nylon bags, an accordion, unusual microphone placements, and detuned instruments.

Space & Time Continuum

All scenes in Le Léthé’s original text were taking place in unknown spaces (except one, which was at a bus stop). Additionally, an ambiguous creative element was that it was not clear whether the twelve characters in the play were indeed twelve characters or just one person.

Considering the abstract nature of the text and its characters, I thought that the soundtrack could serve as an ‘anchor’ on which both actors and viewers could hold on to through the show. Audio would act as a thirteenth character, embracing them all, and sometimes acting as a physical extension of their bodies (the question of the body is central in the play), or working as a modular scenography set.

I wanted to avoid the artificiality of having the soundtrack play the first minute of every scene and then fade out when actors speak. Hence, there is sound all along the play (~1h15min). It goes from a barely audible sound support, working as some kind of ‘perfume’ to maintain the sensation of space, to competing with shouting actors at times. This helps viewers bypass the soundtrack naturally, yet if ever one of them would wander off into an inner reverie, the soundtrack was there to support him or her get right into the play.

So, I needed a playback system capable of driving smooth complex transitions that was quickly adaptable during rehearsals and that could keep track of dozens of sounds playing at the same time with automatic and independent actions like fades in/out, delayed triggers, filtering, volume, limited/unlimited number of loops...and so on.

Basically, the Wwise Event system helped me systematize and encapsulate audio behaviors that were always the same (i.e. at this point in the text, this set of sounds stops here, and these start in 10 seconds with a 20 second fade in, those others start right away, etc.), and trigger them all with a single button (Event). It helped me concentrate on more critical elements like syncing with actors, and dynamic sound objects (RTPCs, see 2nd extract below).

This is all very basic game audio design, but it works wonder in a theater play!

Detailed extracts of playback designs in Le Léthé

ACT V - ‘Shouts and Racket sequence

In this extract, each layer of sound has its own fade curve and Action delay linked to Events. Each Event corresponds to a specific section in the text.

In this simple extract there are 32 Actions performed. Some of them play voices (some with infinite looping, others with limited looping, or one-shots), some of them stop voices, or modulate filters each with their own delay, fade in/out time value and curve type.

Yet during the show, there are only a few buttons to play with (7 to trigger Events, 1 to tweak an RTPC). If actors forget their lines, improvise for a minute, or jump to the next page, I was able to follow them with no audio drops. The fact that I am playing with Events and their hidden associated audio logic changes everything. I am not manipulating audio, Wwise does. I am simply following the action on stage and triggering “Events”.

Fade curves were defined in Wwise; the original stems contained no fades. This had at least two advantages for me. One, each stem could easily be tuned and locked in Wwise during rehearsal, so that they were perfectly performed every time. Two, I didn’t have to take care of how fast I would have moved a group fader on a mixing console; I just had to press a button in Soundcaster that had independent consequences on potentially many sounds.

ACT V - Use of Real Time Parameter Control - ‘Body sequence'

In this sequence, actors go through varying intensities and moods. I followed them with this audio design made with a Blend Track and controlled with a MIDI knob. It allowed me to stick to the actors’ performance and create sudden silences, crescendos, and so on.

It is simply a crossfade between different layers of sounds, each with independent modulations, that do not depend on time but on the value of the parameter I tweak.

Other examples of the use of RTPCs in Le Léthé include managing foley textures in a love scene and playing with the intensity of a fire. I used RTPCs to drive complex behavior as well as to ride a simple volume fader or filters on certain individual sounds or groups.

ACT III - Ambiances

Le Léthé contains several sequences with long looping ambiances. For the shorter sequences, each layer has a single simple loop. For the longer ones, I used Random Containers.

I wanted to minimize the sensation of repetition and audio patterns for the audience. To that extent, I designed ambiances in Wwise that were non-repetitive by breaking up each layer of the ambiance into smaller segments. Wwise then picked them up randomly. Again, this is classic game audio practice and it works flawlessly for theater.

The command post: Soundcaster

During the show, I only worked with the Soundcaster Session interface. Its simple interface has really helped me to concentrate on the action on stage. The Soundcaster is so user-friendly that it ensures a stress-free performance for anyone who operates it. Someone with little to no experience with Wwise can take care of the audio during a show; it's unlikely to overwhelm and there are few wrong keys to hit.

Therefore, I can craft a complex soundtrack but do not necessarily have to tour with the theater company since it can easily be handed over to someone else. It can consist of a simple list of sync points with the text and associated Events, to a larger creative freedom with Real Time Parameter Controls to tweak. It becomes up to the sound designers to determine how much room for interpretation, tweakability, and adaptability they would want during performances.

Conclusion

While the use of Wwise requires a significant amount of time to design the playback system and to make sure it is bulletproof and flawless during shows, its environment and tools (Soundcaster sessions, Random Containers, Event system, RTPCs, States, Switches, music hierarchy...) have enabled me to craft a soundtrack that the actors felt confident in. They knew it was reliable and would adapt to their pacing, and not the other way around.

For example, during rehearsals some actors took twice as much time to complete a scene than what I expected, just because they knew the audio system would follow them no matter how fast or how slow they would speak.

I believe that tools like Wwise might change and facilitate the way we approach theater live sound with interactive workflows. They enable sound designers to create adaptive soundscapes and free actors from inflexible synchronization.

Comments

Davide Castagnone

September 13, 2017 at 09:48 am

Very interesting article, congratulations. Thanks and good work!