Read the next blog in this series: Reviewing the immersive potential of classic reverberation methods

This is an introduction article on immersive reverberation. We are preparing a series of articles on the subject in the coming months. Today, we would like to briefly introduce the topic and extend an invitation to a conference talk we will be presenting at the Montreal International Game Summit on November 15, 2016.

The challenges of immersive reverberation in VR

Artificial reverberation is one of the most commonly used audio effects today. It was originally developed to give sound designers a creative control over the aesthetic sense of space during sound reproduction. Since the 1930s, many different reverberation techniques have been created. These range from echo chambers using loudspeakers and microphones that can play back and record sounds in physical spaces, to more portable approaches such as electronic devices that can efficiently copy a signal to create a high density of repetitions. More recently, new methods have emerged that can approximate the physical properties of sound propagation and provide more realistic effects.

Over the years, reverberation techniques have continually evolved to adapt to the specific needs of the various forms of media they were used on. Movies usually use subtle effects that can favor speech intelligibility and blend well with on-set recordings, while music productions use a large variety of creative options ranging from a single tap delay to dense ethereal reverberation. Until recently, video games have mostly borrowed reverberation techniques from classical linear media. However, complex spatial panning algorithms such as binaural and ambisonics are now at the forefront of a push towards greater immersion in a way that has not been encountered in other media forms. Therefore, the time has come to rethink the existing reverberation algorithms and create new ones specific to the interactiveand immersive needs of these new media forms.

Reverberation in real life

When a sound vibration is emitted in a room, the energy gets propagated in all directions through different mediums, such as air and objects. The energy gets reflected on surrounding materials and eventually fades away as it keeps traveling, bouncing, and scattering. Throughout this cycle, the energy will find its way back to a listener and create a succession of echoes we call reverberation. The types of materials in a room will influence the frequency response of the reverberation. The shape of the room, including the walls and furniture, along with their textures, will determine the direction of those echoes through reflections, diffraction, and scattering. Together, they create amazingly rich and complex phenomena that can inform a listener about the shape and nature of an acoustic space.

Classic artificial reverberation

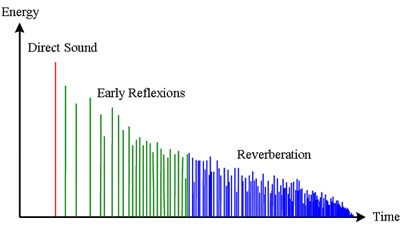

Due to its complexity, simulating this effect has been accomplished by greatly simplifying it. In a classic artificial reverberation algorithm, sound propagation is recreated by repeating the original signal at an exponentially increasing rate while altering the frequency response over time. The spatial aspect of the propagation is entirely lost in this process. Since the output of a reverberator is static given a fix set of parameters, we can illustrate it through an impulse response (IR). An IR is a way to store, visualize, and reproduce the echo density pattern and amplitude. Over time, we see the density impulses increasing exponentially while the amplitude decays (see the graphic below).

On multi-channel systems, each output channel will have its own impulse response. In this case, the desired goal is not accurate spatial cues, but rather, to have signals which will not interfere with each other when they come out from different speakers. In fact, in most reverberation algorithms, spaces are considered spatially diffused. This means echoes are expected to come from all directions fairly equally. This simplification dates back to the early days of research on the topic, where the main goal was to reproduce concert hall acoustics on mono or stereo speaker systems. Classical music concert halls are usually built with the objective of maximizing the spatial diffusion of reverberating sounds. Although results vary, this aims towards a good listening experience from every seat.

http://acoustics.org/pressroom/httpdocs/152nd/behler.html

The importance of early reflections

As previously stated, most reverberators have fixed output behavior. This means that routing the same sound twice through them will result in the same output. In modern applications, they can be tuned and tweaked with parameters such as pre-delay, but not in a scalable way to account for each individual sound coming from different positions. Imagine having to set a different instance of an insert effect for each sound and using a real-time parameter control to change the parameters; the number of reverb instances would quickly be prohibitive on the CPU. Therefore, no matter where the sound is emitted in the room, the effect will remain the same. In real life, however, a sound emitting from the center of a room will be perceived differently from a sound emitting close to a corner. This is due to the time it takes sound to travel different distances. The perceived proximity between a sound and its first few reflections, called early reflections, is an important cue that our brain unconsciously uses to interpret our surroundings. Considering that even the time difference for sound traveling to one ear before reaching the other ear is a crucial cue in binaural panning, dynamic delay times should be an essential component of any spatial reverberation.

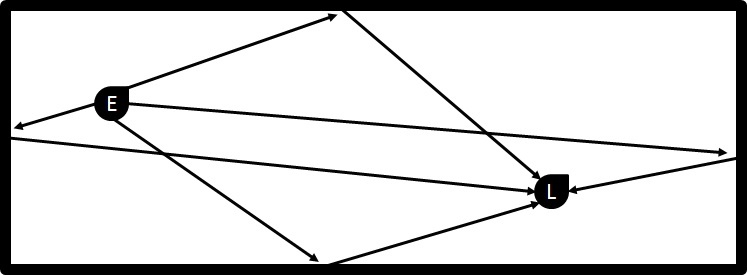

The graphic bellow illustrates the early reflection pattern of a simple two-dimensional room. Any movements of either the emitter or the listener will change the early reflection paths and, therefore, their respective lengths. For a sense of scale, in a small 5 by 10 meter room (16' x 32'), a sound will take approximately 32.8 milliseconds to travel between the two furthest corners, but only 14.7 milliseconds to travel between the two closest ones. Ideally, the reverberation should be able to dynamically interpolate between early reflection patterns for each moving source and listener.

Two-dimensional early reflection pattern from emitter (E) to listener (L) at every wall.

The spatial limitations of artificial reverberation

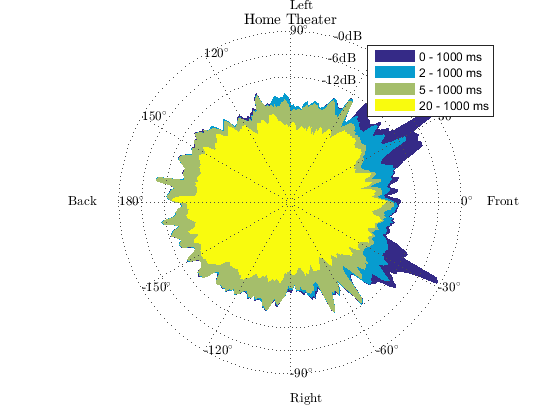

The following graphic shows the spatial properties of real life acoustics. Recorded in a simple living room, we can see that the sound field is not fully diffused. This representation is made through a technique called Spatial Decomposition Method developed at Aalto University. It shows the spatial behavior of reverberation in a space. The distance from the center represents the amplitude of sound coming at specific angles. The colors show the progression of the sound energy at different times. Although reasonably simple in this example, the spatial pattern will quickly become much more complex in a typical video game setting. For instance, standing at the corner of a long corridor, we would likely see some energy coming from the opposite side at a later time. Evidently, the reverberation is not well diffused in many scenarios we encounter every day while developing virtual worlds.

https://se.mathworks.com/matlabcentral/fileexchange/56663-sdm-toolbox

Positioning reflections in virtual reality

While classic reverberation has mostly been created for mono or stereo output and ignores the actual spatial properties of sound propagation, new panning algorithms now include much more complex spatial depth. In binaural panning, the angle between a sound and a listener’s ears will change which HRTF filter is used to simulate how the shape of your head influences both the time of arrival and the frequency response. Consequently, reverberation should also convey a sense of directionality that can adapt to more complex geometry and can also vary based on the source and listener locations.

Conclusion

Until recently, there hasn’t really been much motivation to evolve reverberation algorithms into spatial and immersive tools. A tunable but static output is widely sufficient for regular linear media. Now that we are entering into a new media era with virtual and mixed reality platforms, we need to rethink these algorithms. The main two challenges for immersive reverberation are allowing them to have dynamic reflection times and spatial cues to make them adaptable to changing positions and room geometry. During the upcoming conference talk and with the coming articles, we will dig deeper into emerging reverberation algorithms to see how these challenges are being tackled and what it means for future spatial sound design.

Read the next blog in this series: Reviewing the immersive potential of classic reverberation methods

Photography credits: Bernard Rodrigue - 'Audiokinetic spatial audio team' image

Comments

Terry Shultz

November 08, 2016 at 03:56 pm

Good presentation , would love to hear more write-ups.

Tom Todia

November 13, 2016 at 11:49 am

This is a really important topic, thank you for writing about it! We am currently working on a few VR titles with WWise and AURO. How to use real time Reverb is right now one of my biggest questions. There is a "Reverb" setting in the AURO headphone plugin, but how do you handle Reverb as you move from one environment to another? Right now I am running most scenarios as "Dry" and using the AURO Reverb to create a more natural and neutral space in the Binaural decoding, but not in the way I would normally implement Reverb presets. Has anyone been able to go deeper than that with this pipeline so far?