Introduction

Impacter is a new impact modeling plug-in prototype for Wwise, read this article for a first look. In this article, I will cover the use of Impacter to implement and integrate sounds into a demo game environment created with the Unreal Engine. I begin with a brief outline of the Impacter key features before going into detail about how it can be used in the context of Unreal. We will focus mainly on how the physics system was used to drive the mass and velocity parameters of Impacter. Other game engines are available, and the takeaways here apply regardless of your main tools.

Impacter

Impacter is a source plug-in that works by cross-synthesizing components of different sounds and applying physically-informed transformations to these sounds. For further details on Impacter, you can read this blog written by Ryan Done or take a look at the documentation but here's a quick run-down of the main features:

Cross Synthesis

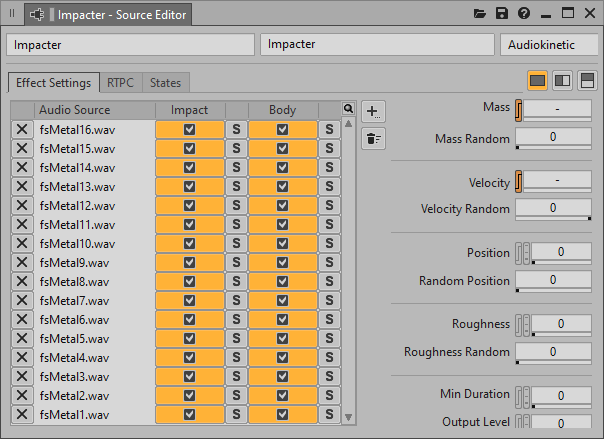

- Multiple sounds can be loaded into Impacter. These are analyzed internally and split into two components: the 'impact' and the 'body'. The impact refers to the initial collision when an object is struck. The body refers to the resonance of the object after the initial strike.

- Impact and body components can be mixed and matched. Impacter will randomly choose between impact and body components between each playback.

Physically-Informed Parameters

- Mass refers to the size of the impacted object.

- Velocity refers to the force with which an object is impacted.

- Position refers to the position at which the object is struck. This parameter is inspired by the phenomenon of acoustic modes. Varying the position will subtly vary the resonance of the sound.

- Roughness refers to aural roughness and is implemented by adding frequency modulation (FM) to the resonance of the sound.

The Impacter Unreal Demo

The Impacter Unreal Demo was developed as a way to test out and demonstrate different uses of Impacter in a (simple) game environment. In the following sections, I outline some of these use cases and discuss how Impacter can be effectively driven by the game physics.

Hitting the Ground Running (Footsteps)

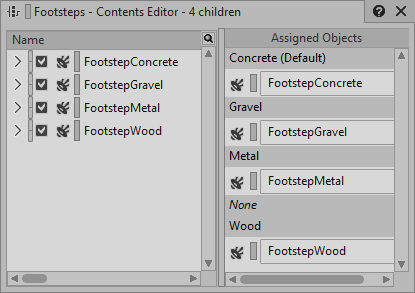

The setup for footsteps in the demo is fairly standard. In Wwise there is a switch container containing footstep sounds for the various ground materials, as well as a State Group ('FootstepMaterial') that controls this switch container. The ground surfaces in the game set the FootstepMaterial state in Wwise.

Since each footstep SFX contains an instance of Impacter, we are afforded further bang for our buck - that is, further variation from our audio samples - through the use of cross synthesis. Nonetheless, so far it's a typical footsteps scenario.

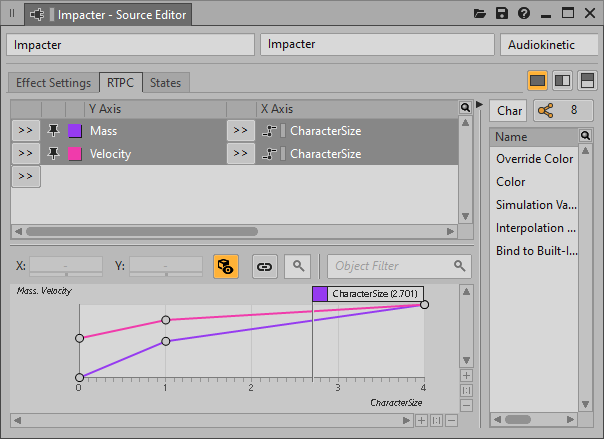

Things get more interesting when we consider the use of the mass and velocity parameters. These can be used to make our footstep sounds adapt to different character sizes. There is an additional RTPC - CharacterSize - that is used to drive both the velocity and the mass of each of the footstep sounds. In Unreal, the CharacterSize RTPC is set using the size of the character object. The size of the character object also inversely affects the character speed (and run animation). This simulates the effect of transitioning from a huge, lumbering character to a tiny, nimble character. By mapping the CharacterSize RTPC to velocity and mass in Impacter, we can easily reflect this in the sound of the footsteps.

When world objects collide

Each of the objects and surfaces in the environment has a corresponding Wwise event that triggers one or more instances of Impacter. Each instance of Impacter has velocity and mass hooked up to RTPCs ('ImpactVelocity' and 'ImpactMass'), which are driven by the game when collisions occur.

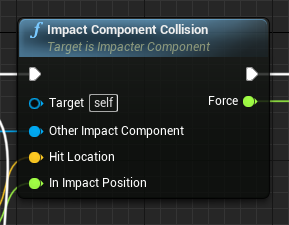

While creating the Unreal demo and integrating Impacter, one of the most important tasks was querying the Unreal physics system during collisions to provide sensible values for the mass and velocity parameters, such that the Impacter sounds respond naturally. The place where this is implemented is ImpacterComponent, a custom SceneComponent Blueprint. Each Actor Blueprint class in the game has an ImpacterComponent (some have multiple). When a collision occurs between two objects, their ImpacterComponents call ImpactComponentCollision. This is where the ImpactVelocity and ImpactMass RTPC values are calculated that get sent to Wwise before the Impacter events are triggered on each object.

It is useful at this point to define some terminology. A static object is an object that never moves, as opposed to a dynamic object that can move around the world. When two objects collide, two collision events are triggered in Unreal - one for each object's ImpacterComponent.

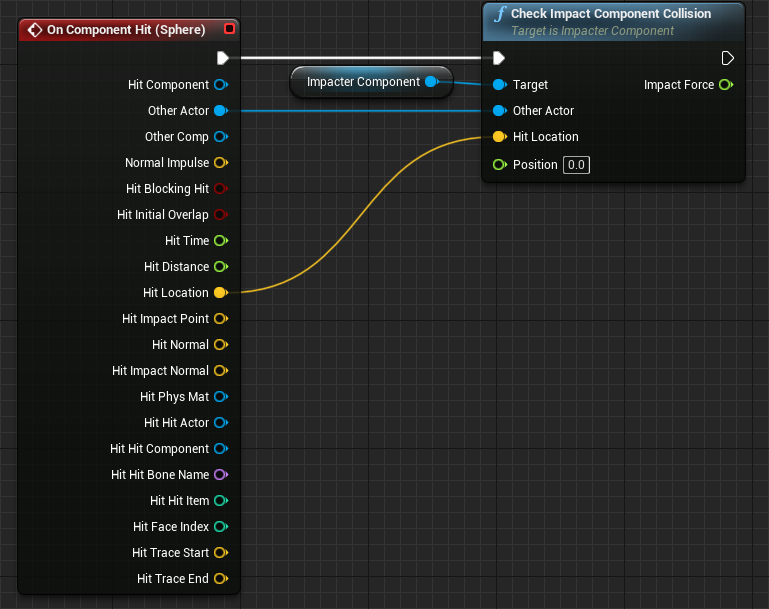

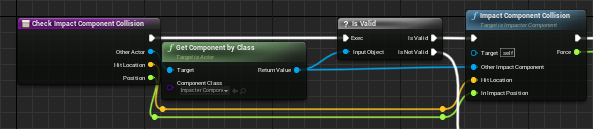

Each object handles collisions in this way. The On Component Hit event calls the CheckImpactComponentCollision on the ImpactComponent. CheckImpactComponentCollision checks if the 'Other Actor' has an ImpactComponent. If an ImpactComponent is found, ImpactComponentCollision is called.

To clarify this point, let's take the specific example of a projectile colliding with a wall. From the perspective of the wall, the impacting object (the 'other' object) is the projectile. From the perspective of the projectile, the impacting object (the 'other' object) is the wall. It may seem counter-intuitive to think of the wall impacting the projectile, as well as the projectile impacting the wall, but it is useful to keep these two contexts in mind during the remainder of this section. The key idea is that each object triggers its corresponding Wwise event, and it uses both its own physical characteristics as well as the characteristics of the other object when calculating the ImpactVelocity and ImpactMass RTPCs.

Since ImpacterComponent is used across all objects in the game, the goal here is to implement physically plausible and generalisable mappings from collision physics to the RTPC values. This way, the physical characteristics of the objects will be sufficient to drive the sounds, without any further manual tweaking required on the part of the sound designer. Level designers can design, place, and alter objects with assurance that the sounds will respond appropriately, so long as the sound designer has adjusted the velocity and mass curves sensibly.

With that in mind, let's look at how ImpactComponent calculates the RTPC values during collision events, using the ImpactComponentCollision function.

Mass and Velocity

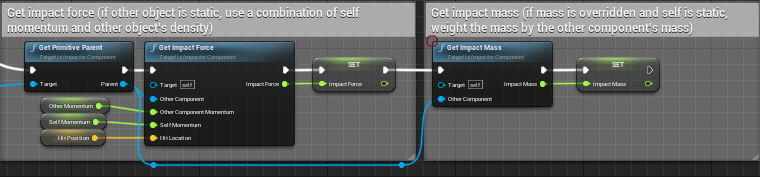

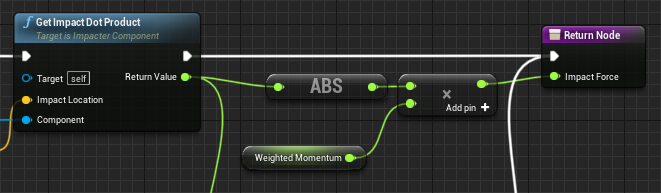

The ImpactMass and ImpactVelocity RTPC values are calculated in GetImpactMass and GetImpactForce, respectively. These are both called from ImpactComponentCollision.

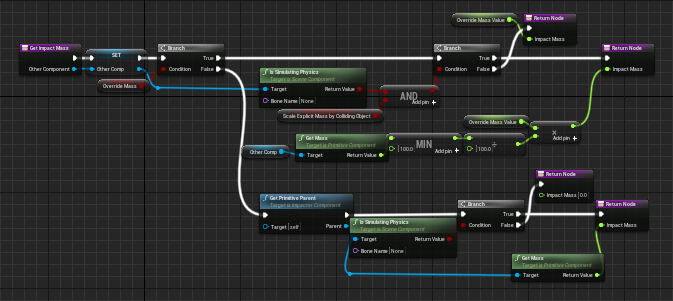

Mass (GetImpactMass)

Mass is taken directly from the Unreal physics system for dynamic objects. (Note: we need to set up Physical Materials for our objects with appropriate density values such that our objects have appropriate mass). For static objects, there is no mass value as they do not simulate physics. They are instead given an explicit mass value. For the walls and the floors, their mass value is weighted by the mass of the impacting object, during collisions, when setting the mass RTPC value.

Velocity (GetImpactForce)

We could map the velocity of an object directly to the velocity parameter in Impacter. However, this would cause unnaturally loud impacts for small, light objects. For example, a small soft ball impacting a metal surface with sufficient speed could cause that surface to trigger a loud impact sound. For this reason, it is better to map the force of the impact to the Impacter velocity parameter. We should therefore use the momentum (velocity x mass), rather than just the velocity. This way, lighter objects will produce lower ImpactVelocity RTPC values, and therefore 'softer' impact sounds. We also need to consider the momentum of both objects when calculating the ImpactVelocity RTPC value for each. Finally, we can also introduce the density directly into our mapping such that softer objects produce softer impacts.

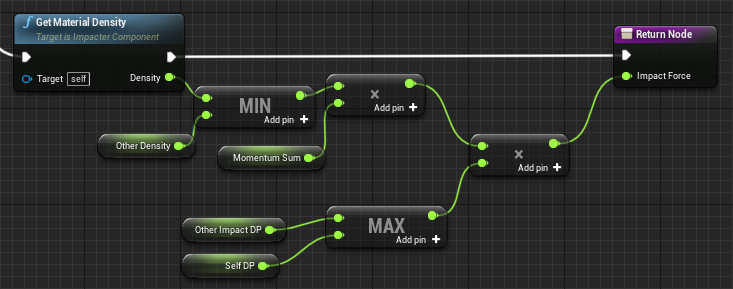

As is the case for mass, the calculation of velocity differs slightly depending on whether the impacting object is dynamic or static. When the impacting object is static, the ImpactedByStaticObject function is used to calculate the force. When the impacting object is dynamic, ImpactedByDynamicObject is used.

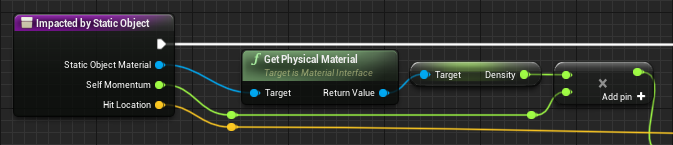

ImpactedByStaticObject

Here we take the momentum of the impacted object and multiply it by the density of the impacting object to get the ImpactVelocity RTPC value. Remember, the impacting object will be a wall or a floor in this case.

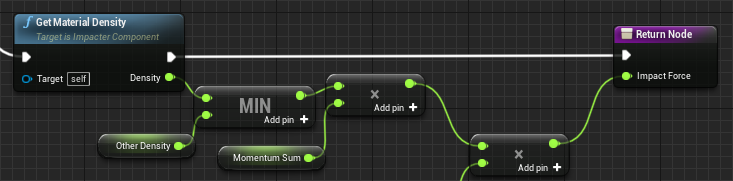

ImpactedByDynamicObject

Here we use the sum of the momentum of each object, weighted by the smallest of the objects' densities. Note that in this situation the impacted object could be static, in which case its momentum will be 0 and the momentum sum will be equal to the impacting object's momentum.

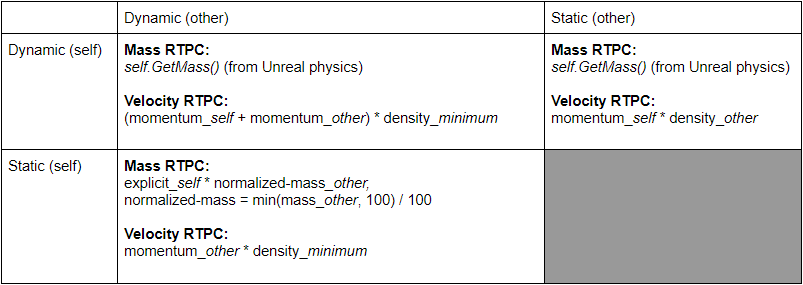

At this point it is useful to layout the various circumstances in a collision table. Figure 1 shows how the Velocity and Mass RTPCs are calculated for different collision scenarios. Note that two static objects can never collide.

Figure 1: RTPC calculation matrix

Testing & Refining

With these mappings setup, we can fire a projectile onto a surface and test how it sounds.

It sounds awful! This is because the Unreal physics system is continuously firing collision events as the ball rolls along the floor. Since the ball rolling does not exactly contain distinct impact events, we could just disable impacts for the ground surfaces and be done with it (or maybe use a different plug-in such as SoundSeed Grain to implement rolling sounds). However, it turns out we can use the continuous collisions to our advantage and simulate the sound of something rolling as lots of small impacts - 'hit the ground rolling', as it were. To start with, we can restrict the rate at which Wwise events can be triggered by the ImpacterComponent.

This sounds less awful, but it is quite metronomic which can sound unnatural. To mitigate this we can add some randomness to the period between Wwise events. To do this we'll add a random offset to the period every time the timer is reset.

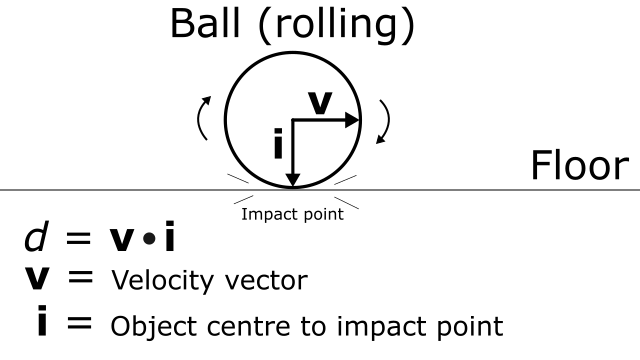

It's starting to sound better, but the repeated impacts as the ball rolls are too strong for a rolling motion. It sounds more like someone continuously hitting the floor. To get around this we can take the direction of motion into account. Specifically, we can use the dot product between the object's velocity vector and the vector from the object centre to the impact point on its surface. That's a rather wordy sentence, so I'll illustrate it with this diagram.

d = v.i where v = velocity vector and i = hit point on surface - object centre.

We use d to weight the ImpactVelocity RTPC value.

If the object is travelling directly towards the point where it impacted, d = 1. When the ball is rolling on the floor, d is low, so the RTPC value is reduced.

This improves our sound integration further. We can clearly hear the difference between the ball initially bouncing on the floor and then subsequently rolling along the floor.

Note that the addition of the d factor will improve our RTPC calculation for other contexts as well as rolling along the floor. For example, it nicely accounts for the case when a fast-moving object just glances off the side of another object rather than hits it head-on. For dynamic impacts (when both of the objects are moving), each object has a d value. I take the maximum of these values and use it to weight both velocity RTPC values. For static objects (floors and walls), d = 1 (maximum), so the dynamic object's d will always be used when the impacting object is static and the impacted object is dynamic.

Break Stuff

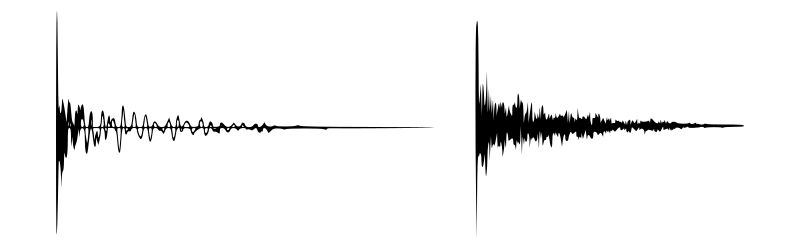

Impacter has been designed for specific types of sounds. The algorithm assumes that the amplitude envelope decays exponentially and that there is one main transient region. In other words, sounds that 'look' something like this:

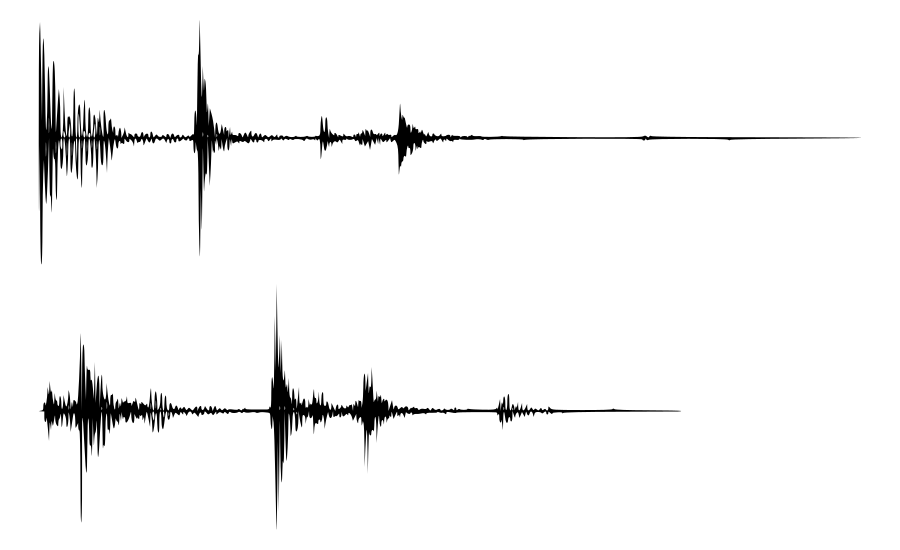

That said, it is possible to explore what happens with other types of sounds. In the Impacter Unreal demo, I made use of some 'breaking' and 'smashing' sounds that break the assumptions of the algorithm in that they contain more than one transient.

The issue here is that the amplitude envelopes vary quite noticeably from sound to sound, which opens the possibility of unnatural 'ringing' in the body component (the resonant part). The oscillator and filterbank amplitudes follow the amplitude envelope of the original sound. If they are combined with the impact component of a sound that has a clearly distinct amplitude envelope, they will sound artificial.

The inclusion checkboxes in the Impacter UI are designed for situations like this. They allow you to exclude any problematic impact/model combinations that arise when exploring cross synthesis for a set of sounds.

In Unreal, I made use of the destructible meshes to create blocks that can smash when impacted with enough force. There are Impacter instances for break sounds and, similarly to impacts, these break sounds have mass and velocity hooked up to RTPCs.

Another approach you can take when implementing these kinds of destruction sounds is to have the individual pieces trigger Wwise Impacter sounds as they break apart and impact with the environment (and each other). This way, the multiple individual impact sounds will combine together and a 'break/smash' sound will emerge as a direct result of the physics interactions that happen in the game. The breakable boxes in the Unreal demo are examples of this. Initially, I used a 'break' sound that triggered when the boxes broke.

This break sound was problematic as it had a very distinct amplitude envelope with multiple transients. As you can hear in the clip, the large box breaks and the multiple transients are heard with no corresponding physical interaction. I then decided to attach ImpacterComponents to each of the individual pieces of the box. These ImpacterComponents are initially inactive. When the box breaks, the ImpacterComponents on the individual pieces become active and start responding to collisions, triggering Wwise events. Therefore, when the box breaks, the individual impacts from the pieces naturally create the breaking/smashing sound.

Mapping Position

For the most part, the position parameter is randomised to add further variation to the sounds throughout the Unreal demo. The position parameter varies the resonance by reducing the gain of certain filters and oscillators. Non-linear frequency-dependent gain ramps are applied to the body component of the sound. The position parameter controls the position of these internal gain ramps. In other words, varying the position will produce subtle changes to the resonance of the sound. When the position is set to 0, the sound has 'full' resonance. That is, no gain reduction is applied. The internal gain ramps gradually reduce and increase the gains of the peak frequencies as the position goes from 0 to 1.

In the Impacter demo, the large glass walls map the distance between the impact location and the wall's centre to the ImpactPosition RTPC. This is mapped inversely, such that the corners and edges will be 'most resonant' and different peak frequencies will be dampened as the impacts move towards the centre.

Takeaways

When enough consideration is given to the mappings from game physics to RTPCs, Impacter can be a very useful tool for integrating impact sounds. Here are some general principles to keep in mind when integrating sounds with Impacter:

Design for scale

- Test your sounds at both ends of the Mass and Velocity parameters to ensure full expressivity when integrated with the physics of the game.

Use the force

- The Velocity parameter in Impacter should be driven by the force of the collision. This will depend on various factors including the density and momentum of both objects.

Keep changing position

- If you want to maximize variation, it's usually a good idea to randomize the Position parameter. This will cause subtle variations in the resonance of the sound, which will be especially useful for situations like bullet impacts or footsteps.

Break the rules, but keep it short

- Although designed for 'impact' sounds, it's always interesting to see what results one can get from different kinds of input. Just beware when adding particularly lengthy samples. The analysis stage is fairly quick for simple impacts, but it might take a while when analyzing longer sounds.

Stay tuned for a third blog on variations produced by cross synthesis!

.png)

Comments