I. Introduction

GameObject is a fundamental concept in the audio design with Wwise. It’s deeply involved in the entire pipeline, from the use of basic Wwise features (especially Profiling) to the development with Wwise SDK. I’ve encountered many problems due to poor audio GameObject management in my daily work. As such, I tried to figure out how to register and manage audio GameObjects.

First, let's take a look at the basic concepts.

1. What are GameObjects?

I’d like to clarify the concept first.

To avoid confusion with the GameObjects in the game engine as well as the Object-Based Mixing concept, here we will use the definition of GameObject in the Wwise documentation:

To keep things consistent with the Wwise UI and documentation, the term "GameObject" in Wwise will be used throughout this article.

2. Why should we manage audio objects?

According to the definition above, all audio management in Wwise is based on the GameObject concept.

While using Wwise, no sound in-game can be played without a GameObject (whether the sound is 2D or 3D).

Poor audio GameObject management can lead to a variety of problems - from exception or failure within Wwise to potential issues during game engine development.

3. Is audio management via GameObject unique to Wwise? What's so special about it?

Actually, audio management via GameObject (Object-based) is not unique to Wwise. There are other audio engines (or audio manager implementations) that support this approach.

- There are audio manager implementations that are purely Event-based. In this case, audio designers can only control the audio with Events.

- With Wwise, designers may control the audio with both GameObjects and Events (although the Events are not absolutely independent of the GameObjects). This brings more flexibility and expressiveness to our sound design.

Wwise Events, GameObjects & UE Components

To better understand the importance of GameObjects and how they work, let's take a simple Blueprint interface implementation as an example, and see how GameObjects are created and referenced at the code level.

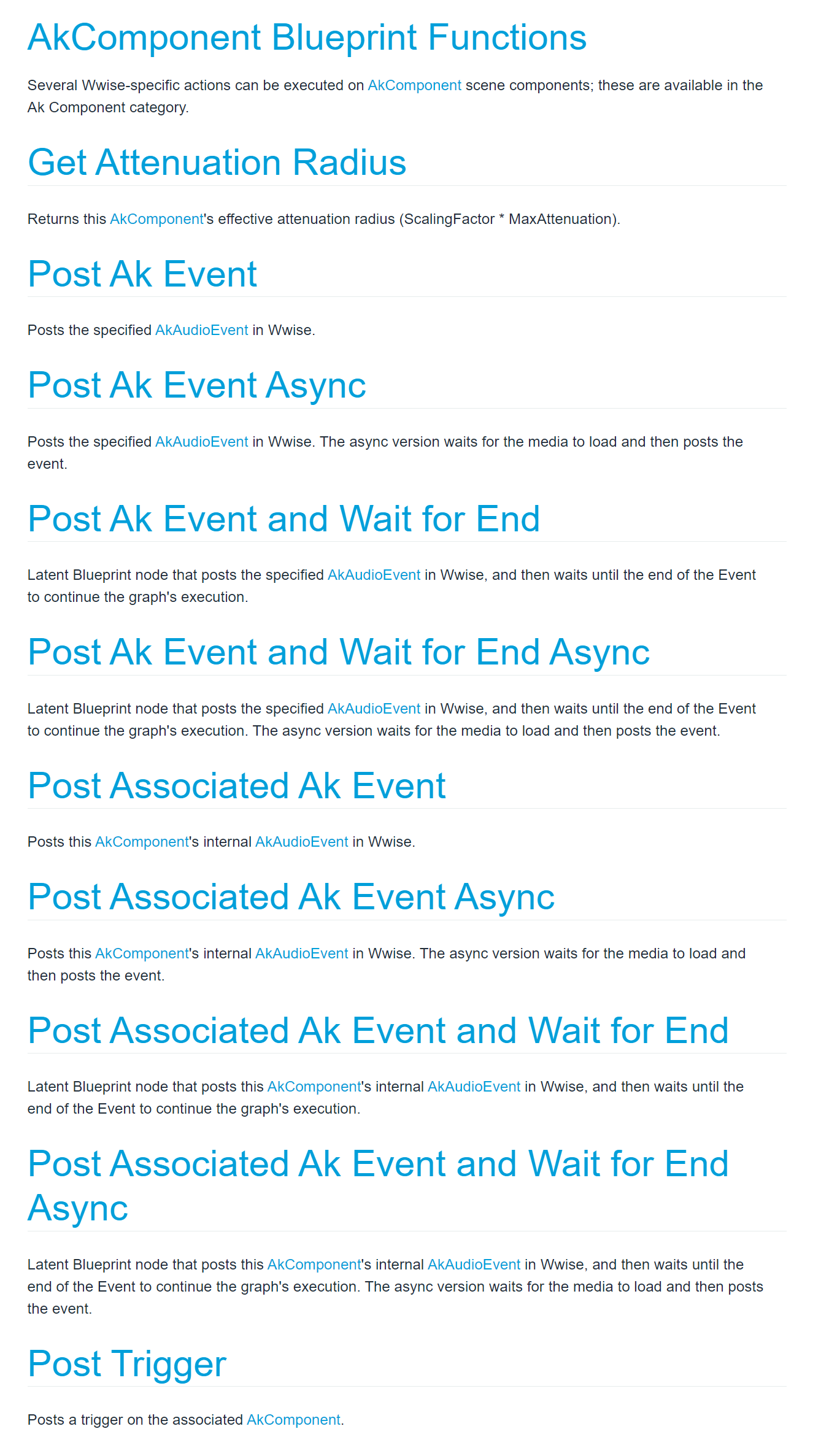

Since Audiokinetic provides a feature-rich Unreal Engine Integration set, and the usage of Wwise SDK in the Unreal Engine Integration is quite typical, here I will use the "PostEvent" Blueprint node as an example.

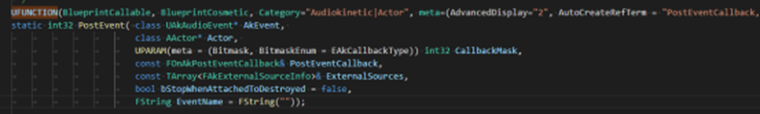

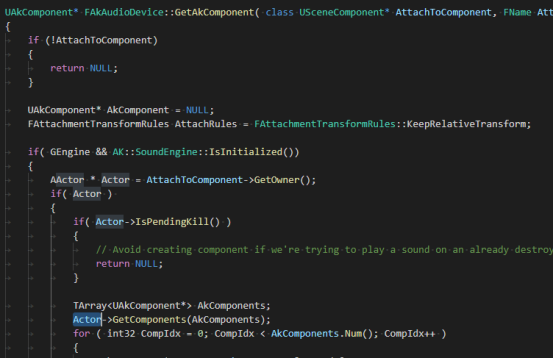

The following screenshot shows the C++ code for the "PostEvent" Blueprint node in Unreal:

The definition of the "PostEvent" Blueprint node in Unreal:

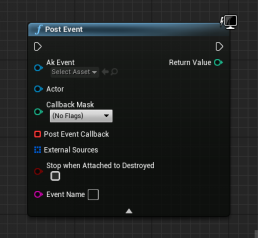

The code corresponds to the following Blueprint graph:

The "AkEvent" and "Actor" input pins on this Blueprint node must be linked to variables or specific values, otherwise an error will be reported at compilation time.

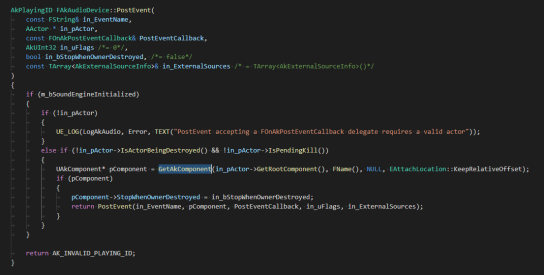

In short, here is the code logic: Running the PostEvent node will attach an AkComponent to the RootComponent specified with an Actor object.

The process of attaching an AkComponent starts by iterating through the descendants of the current Actor:

If there is an AkComponent, the attachment process will start immediately.

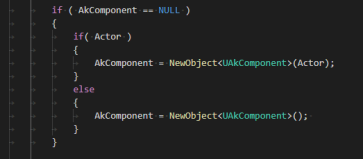

If there isn't, a new AkComponent will be created:

The AkComponent will then be attached to a GameObject within Wwise based on the attachment transform rules.

So, an AkComponent must exist each time an Actor plays sounds created with Wwise.

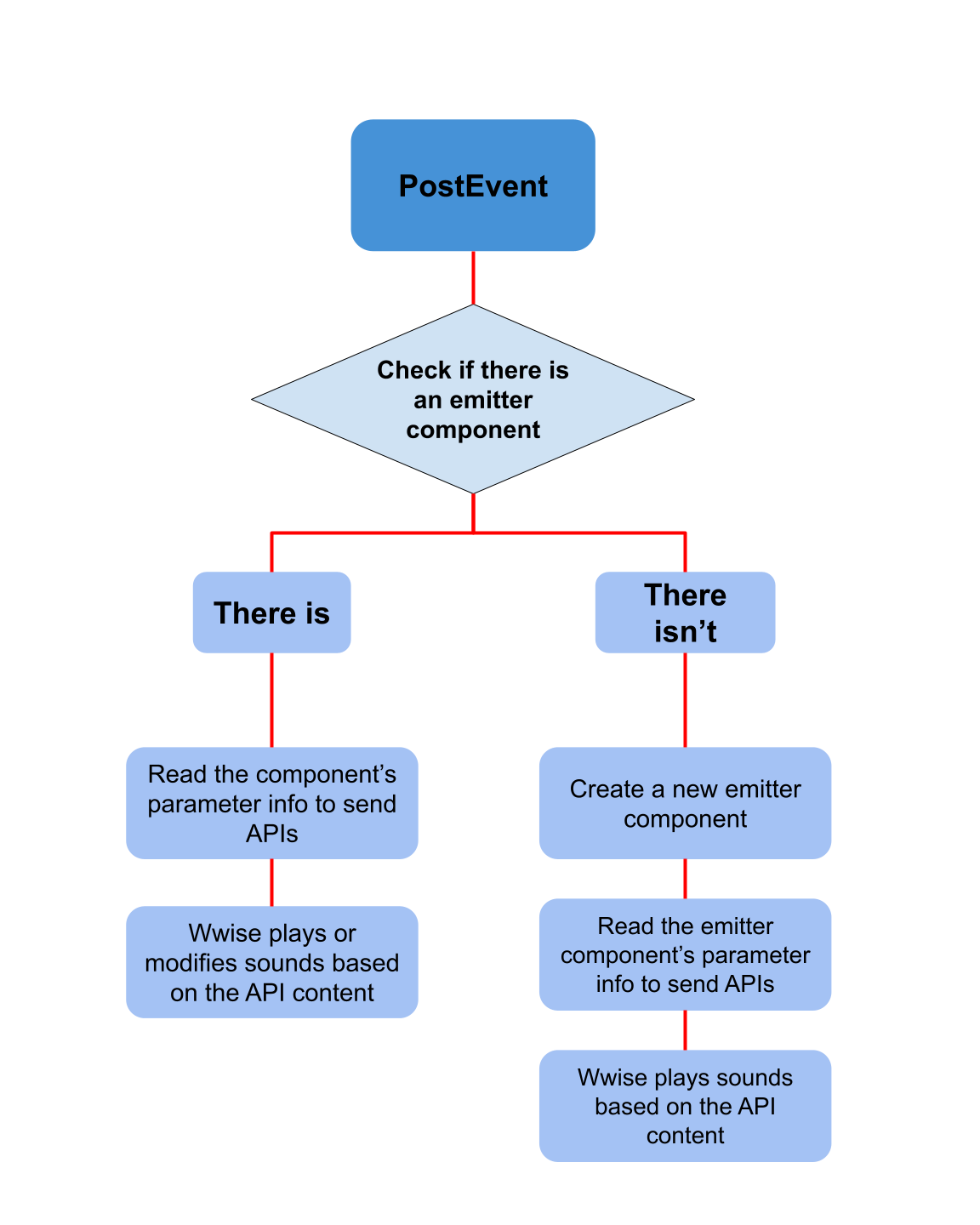

Here is how the AkComponent works:

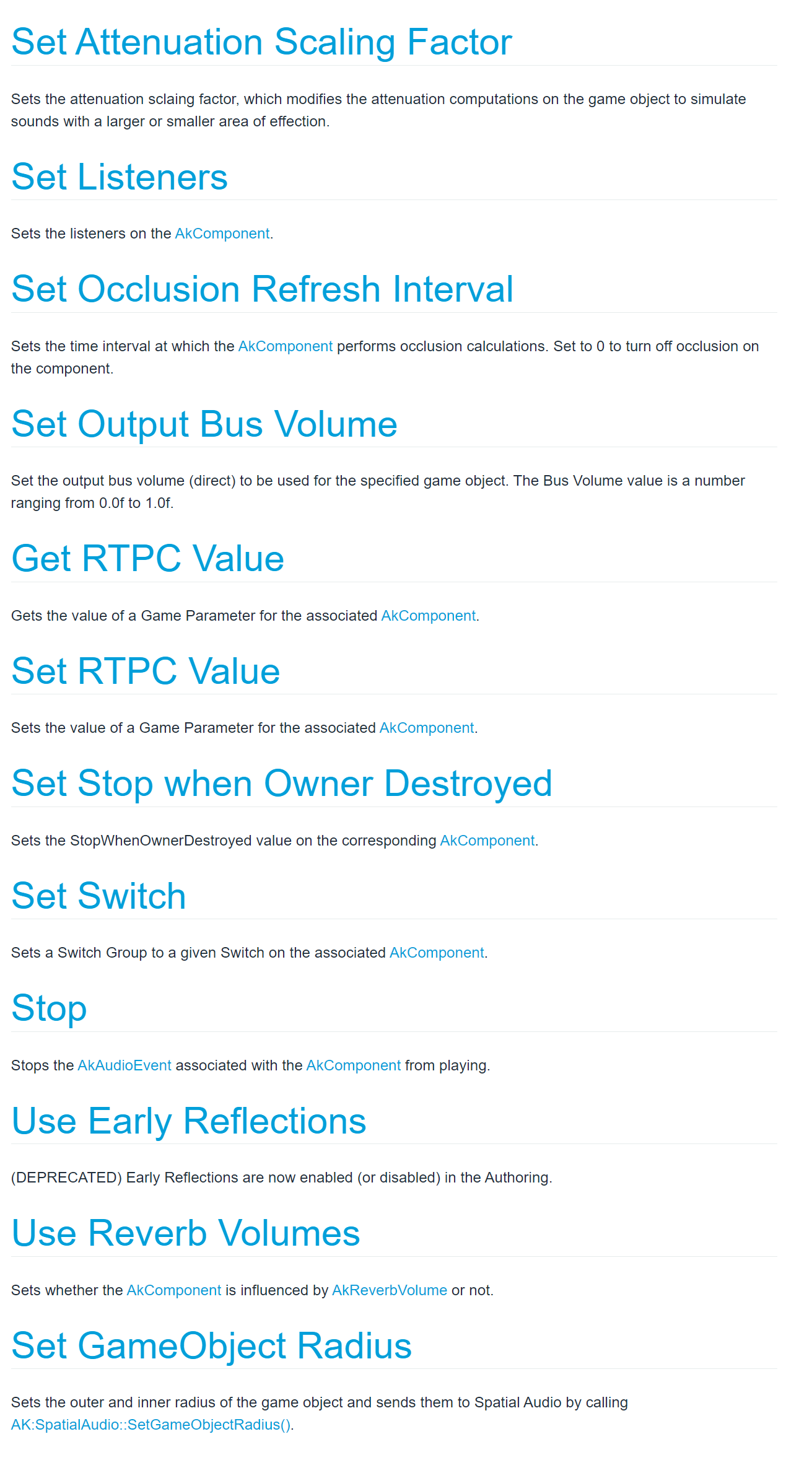

The AkComponent is a Wwise-specific GameObject container that exists in UE, which can make calls to the following functions:

For those who have no code knowledge, it may be difficult to understand this by just looking at the code.

Let's put it another way. Here is how the game engine uses the audio middleware to play sounds:

Summary (Section I)

The data source for GameObjects in Wwise is the AkComponent in the game engine, and this source is indispensable. (The data may include information on the orientation, steering, GameSyncs, etc.)

Each sound playback points to a target object, the AkComponent.

Wwise will register a corresponding GameObject within Wwise for the target object to carry the sound, with all of its basic properties inherited from the AkComponent.

All posted Events with an AkComponent as the target object are targeted to that GameObject within Wwise.

Or, we can put it another way:

The GameObject within Wwise is the AkComponent's counterpart in the sound world, which carries all Events that point to the AkComponent.

Also, the GameObject within Wwise can be registered and unregistered depending on the AkComponent.

With all this understood, what can we do?

II. Potential conflicts between Object-based and Event-based audio management

1. What is Event-based audio management?

Event-based audio management was broadly used in early game audio middlewares or audio manager implementations for small games. Nowadays, however, almost every sound in-game is an independent object.

Occasionally, some audio development personnel may choose to manage and control the audio playback in oversimplified ways:

- Register a GameObject for each sound playback, and destroy it when the sound playback completes.

- Create several game objects for the sound playback, and assign all the sounds that will be played to these objects.

I will refer to these as Event-based audio management throughout this article.

They trigger sounds without considering the necessity and complexity of controlling them further after being triggered.

While there is certain validity to the above object creation and management approaches, they certainly do not meet all our development needs.

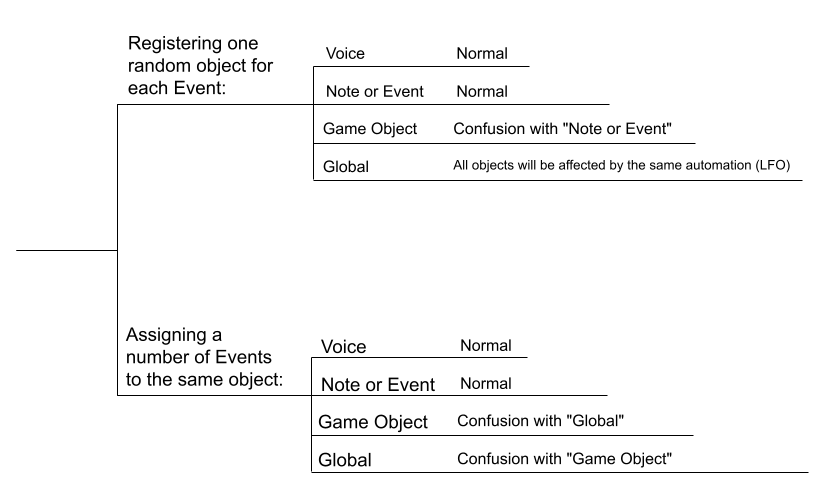

Let's look at the first approach (registering one random object for each Event):

Anyone with programming skills knows that the creation and destruction of objects in-game consumes system resources. It might be OK for low-frequency Events. But for high-frequency Events, a large number of objects will be created and destroyed in a short period of time. This can result in unnecessary system costs. And, it’s a potential pitfall for the Event-based approach in terms of performance cost.

Of course, there are occasions where this can be optimized by creating object pools, but not all sound playback is feasible for assigning random objects.

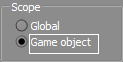

If you have a game with multiple objects triggering the same sound at the same time, the Event-based approach will have a hard time managing these sounds in a precise way. Because all the Events are independent of each other, and the audio engine cannot distinguish which Event belongs to which object in-game. As a result, for audio management like limiting the maximum number of simultaneous voices, we can only do it globally. This means that it can only be done using the Global mode in Wwise.

Now let's look at the second approach (assigning a number of Events to the same object):

Usually, when the game demands rich sounds, designers themselves have to take performance control into account.

Assigning all sounds to the same game object would result in a more subtle concurrent control of sounds via actual in-game objects not being possible (the same issue occurs with the first approach).

If an upper limit (Global) is set for this same audio object in terms of number of simultaneous voices, some voices may be killed randomly by mistake, resulting in an unreasonable listening experience.

To solve the problem of voices being killed by mistake, it’s necessary to remove the restriction on the number of simultaneous voices. However, this results in a significant performance cost.

Summary (Section II)

Event-based audio management treats the GameObject as an attachment to the Event and assigns a GameObject to each sound Event.

Object-based audio management, on the other hand, treats the Event as an attachment to the GameObject. It registers a GameObject within Wwise that corresponds to the in-game object, and the Event works as a child that inherits the GameObject’s information.

III. Possible issues if GameObjects are not managed properly

To make it easier to understand, here I will use some cases that you may come across in your work for analysis.

Assuming that the game you are currently developing triggers and manages sounds with the Event-based approach instead of the Object-based approach, then Wwise will not properly receive the GameObject information corresponding to in-game objects, but will randomly generate a GameObject for each audio Event respectively.

Then we may encounter the following issues while using Wwise:

1. All GameObject-related features in Wwise will fail or behave abnormally

- All Random and Sequence Containers with the "Game object" Scope selected will not refresh their lists when being triggered to play. It’s especially noteworthy that if a new GameObject is registered each time it is played, the Sequence Container being played will always play only its first child item.

In a similar situation, designers will have to spend a lot of time on debugging if they do not double-check the target object assignment for different sounds through the Profiler.

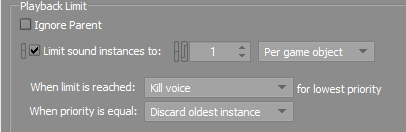

- The "Per game object" Playback Limit will fail, and there will be no way to limit the actual number of voices in-game. The voice management feature for concurrent control will have to be re-implemented at the engine level. However, this will be up to the programmers. They may not be happy to do so.

As we mentioned in Section II, concurrent control of sounds based on game objects will not be possible. Whether registering one random object for each Event or assigning a number of Events to the same object, designers cannot control the performance at the authoring tool level. This means that the programmers have to take back the work that has already been handed over.

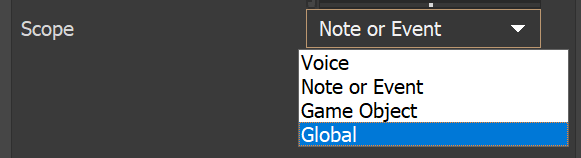

- The behavior of different automation (LFO) scopes for the plug-in parameters will be confused.

For details on how confusing it can be, see the following chart:

- When registering one random object for each Event, audio Events such as Stop and Break will not be available for actually existing Game Objects (these behaviors will have to be managed by the program), only global features such as "Stop all" can be used.

This is the same as the aforementioned Playback Limit issue. It means that the programmers have to take back the work that has already been handed over. - You cannot use the Game Object Profiler in older versions of Wwise because Game Objects are generated sequentially, and it's hard to know what the Game Object ID is for the sound that's about to be played, unless what you want to monitor is a continuously looping sound.

This can make testing and debugging more difficult for audio designers.

2. Unnecessary system costs

- When there are high-frequency One-Shot sounds, this can lead to repeated creation and destruction of sound objects. This situation, as we mentioned earlier, generates unnecessary system costs;

- Since each sound is registered with a GameObject respectively, it will also independently calculate the orientation and steering, and read its own GameSyncs. This will result in more pointless system costs.

Take the player's footsteps as an example:

- If there is a resident Game Object on the player, Wwise will only receive a call to the SetSwitch API when the ground material under the player's feet changes, then it will play the correct audio content;

- Otherwise, each time the player takes a step, it will detect the ground material and register a Game Object with a random ID. Then, it will call the SetSwitch API for this Game Object to ensure that the correct content is played, then play the audio content. After the audio content is played, the Game Object will be unregistered. This process will be repeated for each footstep.

Take the vehicle as another example:

If the vehicle's engine, tire noise, and wind noise are triggered by independent Events, they all need to read the vehicle's speed.

- If there is a resident Game Object on the vehicle, the vehicle speed parameter for the three Events corresponding to that Game Object can be controlled by the same RTPC (calling SetRTPCValue once);

- On the contrary, if you register one random object for each Event, three Game Objects will be generated. Then, you have to pass the vehicle speed RTPC for each Game Object (calling SetRTPCValue three times), even if the RTPC values needed by these Game Objects are the same.

This makes the memory/CPU cost for calculating the vehicle speed RTPC three times the reasonable cost.

This cost may not be significant, but it’s surely unreasonable.

3. Repeated instantiation of plug-ins on the GameObject

As we mentioned earlier, each sound is an independent object, so each sound instantiates a new plug-in. Each plug-in has its own runtime parameters, instead of having the same parameters for plug-ins on the same GameObject.

4. Limits the use of virtual instance technology

GameObjects cannot be registered and destroyed independently. And, there is no way to reduce the system cost by having multiple emitters that make the same sound instantiate only one of them. So, all emitters will be physically instantiated.

Summary (Section III)

If you encounter a situation similar to the one described above in your work, you can try to check whether the audio GameObject creation and unregistration mechanism in-game is normal. This could help you determine the cause of the problem.

IV. Practical GameObject management ideas

1. At the early stage of game development, an audio object pool should be created. And the sound Events that may be triggered repeatedly at high frequency should be managed via object pool. Probably all audio objects should be managed via object pool.

2. During game development, in addition to the sound triggering and stopping mechanism, attention should also be paid to the creation and destruction (reference and dereference) timing of the GameObject to which the sound is attached.

3. While developing the audio interface for the game engine, care should be taken to keep the parameter settings as uniform as possible with the official Wwise SDK. And, if necessary, a separate audio interface for Wwise should be developed.

4. While replacing or upgrading audio middleware, attention should be paid to the compatibility between the old and new middleware SDKs. If the compatibility is low, you may need to develop a new audio interface and modify the sound hookup logic during the upgrade process.

Conclusion & Acknowledgement

The above is my research on audio GameObject management as of now. There may be careless omissions, and I will keep improving.

Finally, I’d like to thank Zhengyuyang Zhang (张正昱阳) and Zheqi Wen (温哲奇) who assisted me in writing this article.

Also, I’d like to thank Chenzhong Hou, Product Expert for Greater China at Audiokinetic, for providing a wealth of helpful information and valuable advice during the revision process of the article, which I personally benefited from a lot.

Comments