Blade Runner: Revelations, an interactive mobile VR game based on the iconic Blade Runner franchise was recently released by Seismic Games and Alcon Media Group. Our friends at Hexany Audio were behind the audio work for this highly anticipated project in collaboration with Google Daydream. Richard Ludlow, Audio Director & Hexany Audio Owner; Nick Tomassetti, Technical Sound Designer; and Jason Walsh, Composer & Sound Designer, share their experience.

What was your creative approach to composing and designing audio for such an iconic auditory world, and how did you reflect on or bridge inspiration from the old and new Blade Runner movies? You seem to have favored an analog approach for the general aesthetic of your sound design over something more digital. Was this decided based on the audio stems you received from the original movie?

JASON: Early on we decided to lean toward the sounds and aesthetics of the original Blade Runner movie due to when this game takes place in the timeline. It was important to provide the game with a soundscape that fits the existing universe Blade Runner fans are familiar with. That being said, we had a lot of freedom to experiment with sounds that fit somewhere between the original film and Blade Runner: 2049.

RICHARD: This was definitely a discussion we had with the developer Seismic, deciding where this game fit into the sonic palate of the Blade Runner universe. Part of this was a reflection of where Revelations fits into the timeline and part a vision of the creative directors. Ultimately I think the analog aesthetic was absolutely the right choice for Revelations. But, as Jason mentioned, we also were given stems from Blade Runner 2049 and were able to reference those to blend the two universes together.

How much content from the original stems ended up in the game versus the new stuff you had to create?

JASON: Everything you hear in the game is original content! The stems we received were an awesome reference. I think it’s important to study the materials of a franchise like this because it really helps establish an appropriate sonic palette.

The player navigates the game by jumping to different nodes (e.g. fixed positions in the environment). How did you technically approach the mix with this “node-based” mechanism?

NICK: Blade Runner Revelations’ node-based navigation system offered some peace of mind knowing that the player is set to a fixed position at a fixed height, and the only variable that really comes into play is which way they are looking. In some cases, we were able to set up a per-node mix using RTPCs, States, and “unique to that node” Wwise Events.

The first level the player sets foot in is a busy, raining Chinatown alley in which we used RTPCs to control the level of the rain and shift the focus from one major sonic focal point to another. As the player walks towards the center of the alley they reach a node in which they can hear the chant of the Hare Krishna monks, the cooking chef, and the subtle music of the club; but, the moment they choose to head towards one of these points of interest we adjust the mix to center around that section of the level leaving room for the smaller ambient sounds to shine.

Can you elaborate on how you leveraged object based vs. ambisonics towards creating a cohesive spatial mix for the experience?

RICHARD: For this game we didn’t actually use any ambisonic recordings. Wwise has some very neat features for working with ambisonic mixes but for a number of reasons we often stick with mono, stereo, and quad files in VR. Especially given this was for a mobile platform like Daydream, we had technical constraints we had to keep in mind.

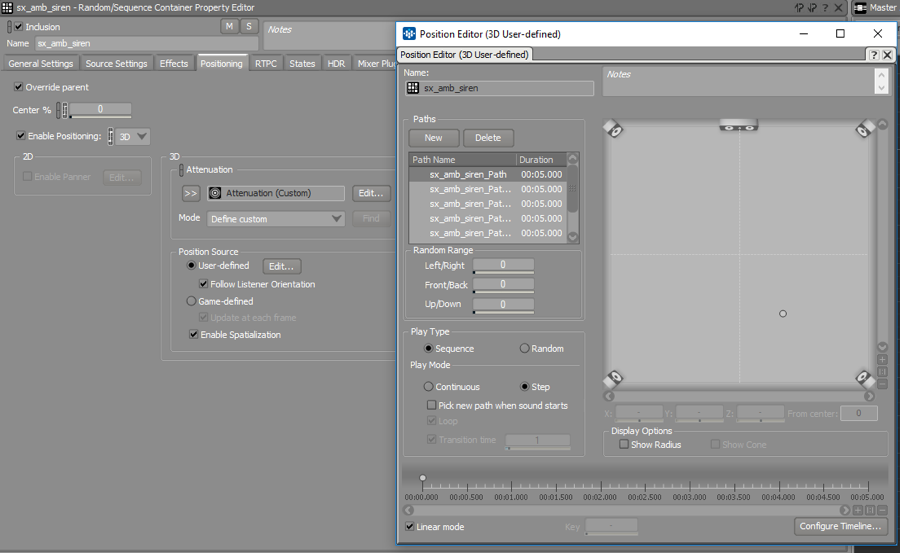

But we were able to make a very compelling mix with spatialized audio sources in the environments. And in addition to anchoring mono and stereo sources as ambient emitters in the world, we’ll frequently use Wwise’s user-defined positioning, which allows us to build spatialized ambiences without the need to place every individual element on objects in a Unity scene.

So this mix had many many spatialized elements, but we were able to achieve this without actually using ambisonic recordings.

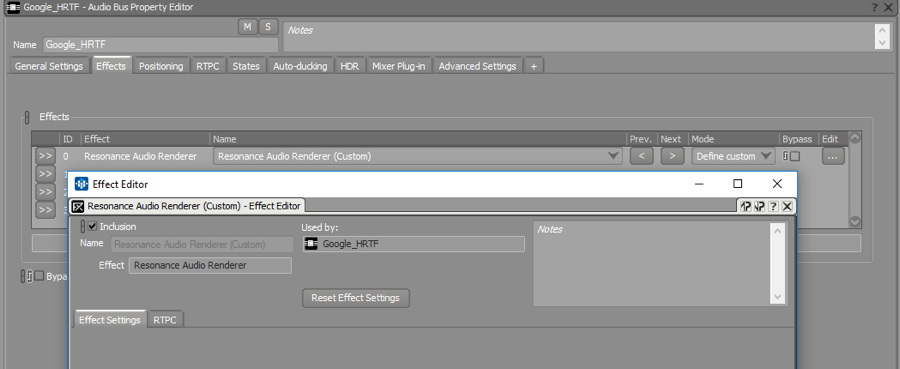

You worked with Google Daydream and Resonance. What was it like working with Wwise for the mobile VR experience? Any considerations or compromises you had to make during development to fit under certain technical constraints?

RICHARD: Wwise is actually really great for mobile experiences because if you’re going cross-platform it’s easy to do platform-specific optimizations. This was less of a concern on a Daydream title since the range of devices that support it are more limited, but we still certainly had the technical constrains of a mobile platform to work within.

As far as spatial HRTF plug-ins go, Google Resonance is certainly one of the most performant and CPU efficient, but it’s also more limited in feature set and sound quality. We were therefore more judicious with is usage, utilizing it on gameplay elements that had a clear need for players to be keenly aware of their location in the world. In Revelations this frequently meant enemy footsteps or gunshots that would help a player quickly localize where enemy fire was coming from and be able to respond accordingly.

As a whole, spatial HRTF plugins add a depth of dimension to a game by making localization of sound assets more clear. However, they often have a somewhat negative affect on the actual quality of the sound due to the filtering necessary to make them more spatialized. They are sometimes less crisp and clear, so we are always very aware of this when designing sounds. Frequently in our VR titles we will use a technique we refer to as multi-dimensional spatialization to help negate this.

For this we’ll anchor multiple layers of a sound on the same game object in the world and put only one of the layers into the HRTF plug-in. This allows it to draw a player’s attention to the right location in space without needing the entire sound to be filtered and be affected by the sometimes unpleasant effects of a spatializer plug-in.

So if an NPC is shooting at a player off to the left with a weapon, we might break that gunshot into 3 layers: an HRTF component, a regular mono 3D spatialized layer not in the HRTF plug-in, and a 2D stereo or Quad component with low frequency non-directional energy to act as an enhancement. This way you get a compelling spatialized sound that uses the HRTF plug-in but doesn’t take away from the quality of the sound.

What Wwise features if any were especially helpful in allowing you to realize your creative vision?

NICK: User-defined positioning was absolutely the champion of this project. Since navigation was limited to nodes we could essentially design entire ambiences using user-defined positioning in Wwise. This allowed us to spend more time in Wwise and less time in the Unity editor.

Were there any particular technical challenges you hit?

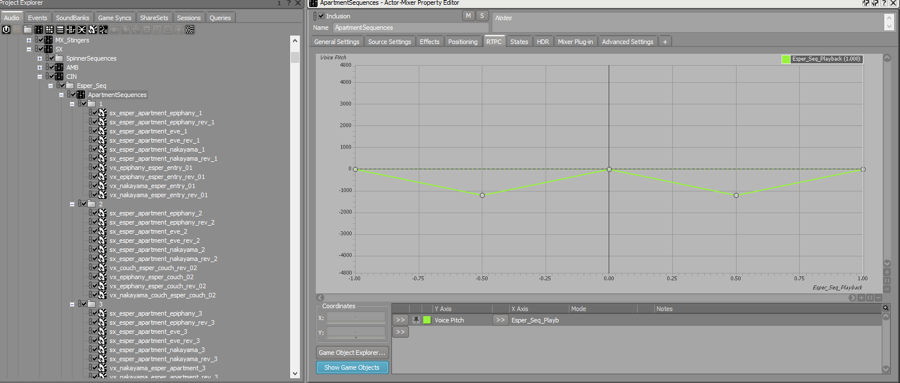

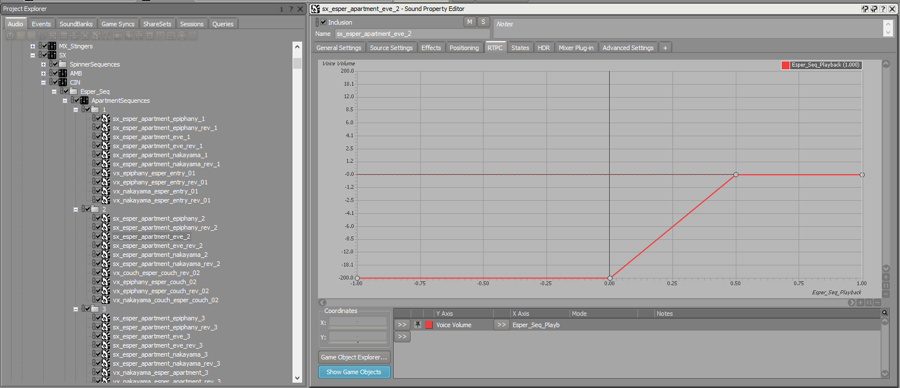

NICK: The most technical aspect of the sound was the Esper sequences where a player must investigate a crime scene using their Esper tools to peer into the past. When the player clicks on a sequence playback immediately begins of a hologram reenacting the events that took place. After the sequence finishes the player can use their controller to rewind, slowdown, and playback the sequence as they so choose and of course all this needs positional sound to accompany it.

Our method to solving this problem was to have a normal and reversed SFX, where both would play at the same time; however one or the other would only be audible based on the playback RTPC. If the player were to change playback direction, the script in Unity would check the playback position, do some math, and seek to the correct position of the reversed file. The result is a seamless playback system.

What are you most proud of when it comes to this project?

JASON: Blade Runner is an awesome franchise for how much focus the sound and music gets. Revelations is no exception. It was a pleasure to design sound that plays a huge role in the storytelling.

RICHARD: I think we were able to do quite a bit working with the limitations of VR on a mobile platform. Sometimes this meant making sacrifices but we were really able to pack a lot of stuff into the game, and I think it turned out quite well. That and Nick coming up with the awesome Esper audio tool playback system that allows you to scrub through the audio in some sequences in real time with some very cool effects.

What was the most fun part of working on this project?

JASON: Cyberpunk is one of my favorite genres and it was an amazing opportunity getting to immerse myself in Blade Runner’s universe.

RICHARD: Definitely getting to work with such an iconic IP and reimagine it from the ground up, but also stay true to the existing sonic universe. We’ve had the opportunity to work with several older franchises over the years, and it’s always fun getting to crack open some original content and assets and see how they were made and then reimagine it for our projects.

Can you tell us about your relationship with the stakeholders for this game? How did you collaborate?

RICHARD: We were brought on by Seismic Games for this project, and they were a blast to work with. Alcon was of course involved as well, but our role was primarily with the developer. We frequently work in a distributed development model where we’re set up with the game engine / project through source control so we can collaborate as part of the team. For this project, it was also very helpful being just a short drive from the Seismic offices, so we were able to pop over when necessary, and they were able to come to our studio to do a mix when needed.

We also had a great mixing setup for Daydream, so the developers could come over for mix review sessions. For these we’d have one person sitting in our VR room in the Daydream headset and we’d Chromecast the gameplay to a TV, so everyone in the room could watch together. We’d then wirelessly connect Wwise to the live game build running on the device, so we could make mix changes in real-time. Finally, we’d use a 1/8” audio out cable from a Pixel into a headphone amp / splitter so that four to five people could all listen to the game simultaneously and watch it on the TV. It made real-time mixing sessions with a group of people quite simple!

Richard Ludlow Jason Walsh Nick Tomassetti

Audio Director, Owner Composer, Sound Designer Technical Sound Designer

Comments