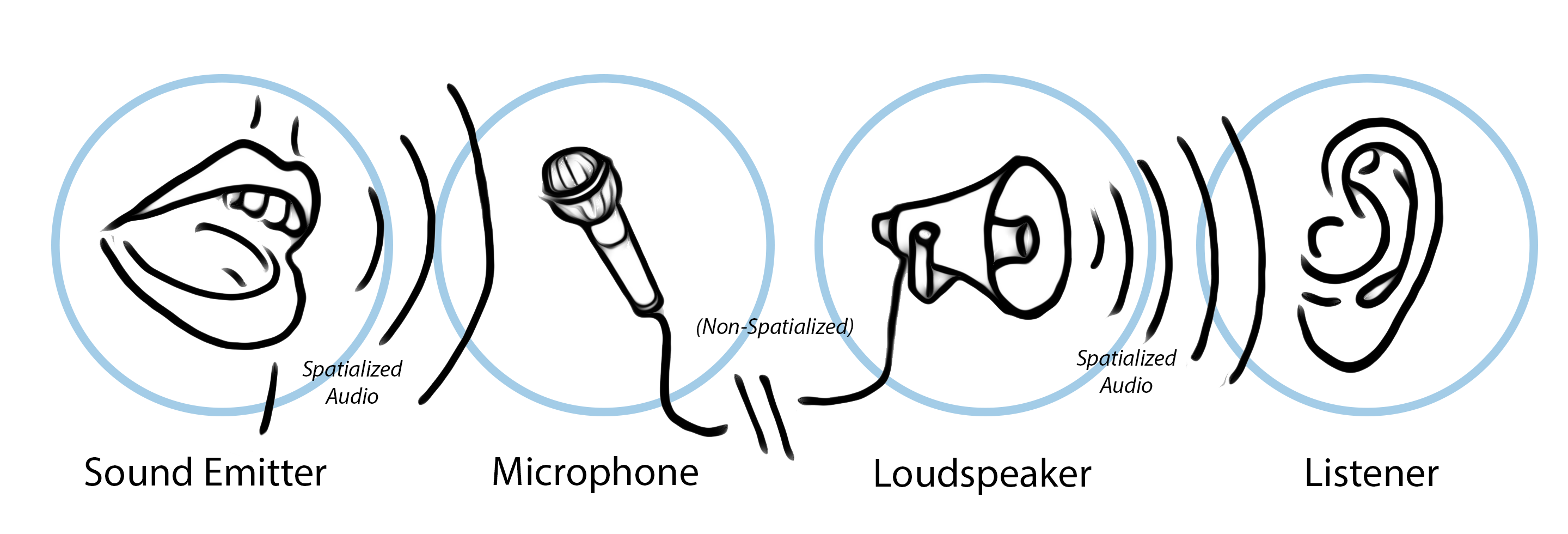

Imagine you are developing a “covert espionage” game that features an audio surveillance system – a gameplay system that allows you to plant a hidden microphone in the world. The audio is captured at the location of the “bug” or virtual microphone and then transmitted wirelessly to a surveillance van. Players in the van listen to what is going on in the bugged room, all in real time. The audio that is captured by the microphone is played through a “virtual loudspeaker” located in the van. The loudspeaker itself is a spatialized sound emitter in the virtual world – the source of the audio just happens to be a mix of sounds that are occurring at another part of the level. We will even apply a futz effect to the mix to mimic the lo-fi transmission of the signal and distortion through the loudspeaker.

Wwise 2017.1 introduces the ability to preform 3D spatialization on an audio signal, after mixing together input from any number of 3D sources. We call this "spatialized sub-mix" a 3D-Bus. This article will demonstrate how to leverage 3D busses in Wwise 2017.1 to simulate the described behavior in a game. This scenario was chosen because it highlights a number of new features and changes within Wwise 2017.1, both in the authoring tool and the sound engine. After reading, you will be able to apply the concepts learned to other audio routing scenarios, including ones suggested at the end of the article.

Game Objects

In our example, we will register a minimum of 4 game objects. In Wwise 2017.1, listeners are also game objects, and game objects can be both listeners and emitters.

- The player listener: This game object represents the ears of the character listening to the audio in the game. In our example, this is the character in the van listening to the real-time surveillance.

- The environmental sound emitter: An object in the game that emits sound. Perhaps it is an enemy character, or some other sound emitting object of interest to our covert surveillance team. Realistically, your game would have many objects like these to compose a rich environment, but we will have only one for the sake of simplicity in our example.

- The microphone: This object represents the placement of the hidden microphone, the “bug”. The placement of this object is important because only sound emitting game objects that are in range, as defined by their attenuation curves in the Actor-Mixer Hierarchy, will be “picked up” and audible to the microphone.

- The loudspeaker:The sound emitter game object that emits a spatialized mix of audio captured by the microphone. The position of the loudspeaker represents the point of retransmission of the sound. In our example the loudspeaker is in a surveillance van far away from the original source. If it were, however, close enough to the original source, the player listener would actually be able to hear a mix of the loudspeaker and the original source at the same time.

Listener-Emitter Associations

The listener emitter associations are defined in code by use of the SetListener and SetGameObjectAuxSendValues APIs. They define the relationship between game objects; that is, which game objects listen to which other game objects. In Wwise, the only thing that distinguishes an emitter from a listener game object is the listener-emitter associations that have been defined between them using the API.

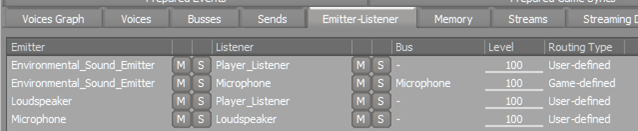

In our example, we define the following associations:

- The environmental sound emitter and the loudspeaker have the player listener as a listener.

- The microphone has the loudspeaker as a listener.

- The environmental sound emitter has the microphone as a listener, and has been assigned as such using the SetGameObjectAuxSendValues API. We set it as an ‘aux send’ listener so that we can use a specific, unique bus from the Wwise project to model the behavior of the microphone in the Wwise Authoring tool.

You can verify that your emitter listener associations have been set up correctly by examining the Emitter-Listener tab in the Advanced Profiler. Notice that the entry for Environmetal_Sound_Emitter which has Microphone as its listener also indicates it is sending to the Microphone bus and is of routing type “game-defined”. The routing type "game-defined" indicates that the emitter-listener association and output bus has been established using the game-defined sends API. Routing type “user-defined”, on the other hand, indicates that the output bus is defined by the Wwise project (in the Master-Mixer Hierarchy, the output bus is the parent bus, and in the Actor-Mixer Hierarchy, the output bus is in the General Settings tab). If the routing type is "user-defined", the Bus column will be empty because a given game object may have any number of voices and/or buses, each with their own unique output bus as defined by the project (in other words, the user). The listener game object in the associations with routing type "user-defined" is established by the SetListeners API—there is no way to establish emitter-listener associations in the Wwise project.

Setting Up the Wwise Project

The Actor-Mixer Hierarchy and the Master-Mixer Hierarchy in the Wwise project act as a template for the voice pipeline that is created at run-time. In Wwise 2017.1, a bus instance in the sound engine is always associated with a game object; however, the routing defined in the project is still respected. We pay special attention to the Enable Positioning check box—this determines if the parent or output bus instance will be spawned on the same game object or on a different game object, as determined by the assigned listeners.

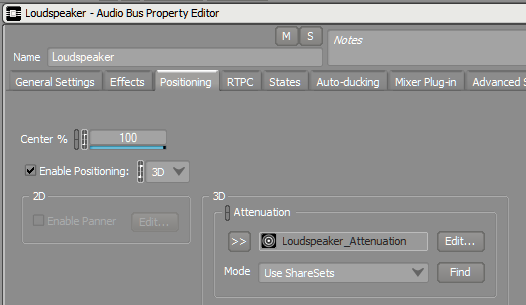

- First, we create a bus for the loudspeaker, and set it to Enable Positioning. This will allow this instance of the Loudspeaker bus to have a distinct position in 3D space, defined by the position of the loudspeaker game object. Furthermore, checking Enable Positioning tells the sound engine to connect the bus to a unique instance of the parent bus for each associated listener. In our case, the loudspeaker game object has a single listener, the player listener, and the parent bus of the Loudspeaker bus is the Master Audio Bus. During run-time, an instance of the Loudspeaker bus, when spawned on the loudspeaker game object, will connect to an instance of the Master Audio Bus on the player listener game object.

- On the Loudspeaker bus, we set the positioning type to 3D. This tells Wwise to spatialize the output of the Loudspeaker bus, relative to the downstream game object (the player listener). We create and assign a ‘loudspeaker’ attenuation to define how far the loudspeaker transmits sound. The positioning tab on the Loudspeaker bus should end up looking something like this:

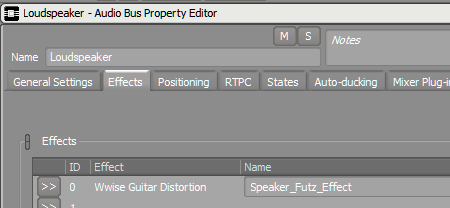

- For extra street cred, we can add a Wwise Guitar Distortion Effect on the Loudspeaker bus to simulate the transmission loss and distortion introduced by the microphone and the loudspeaker. The specific settings of the Effect are left as an exercise to the reader, or perhaps, a talented sound designer friend of the reader.

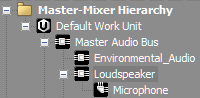

- To simulate the microphone, we create an Auxiliary Bus that is a child of the Loudspeaker bus. We use an aux bus so that we can assign it as the target of a game-defined send on all game objects that will be picked up by the microphone, including the previously mentioned environmental sound emitter. The bus hierarchy looks something like this:

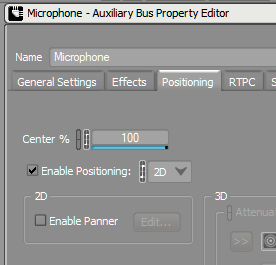

- For the Microphone aux bus, we must also check the Enable Positioning check box; however, we set the positioning to 2D. We check Enable Positioning because we want the Microphone bus instance to be spawned on a game object (also called microphone) with a position in 3D space that is distinct from the downstream game object, the loudspeaker. This little Enable Positioning check box is very important! It tells Wwise that the downstream bus, the Loudspeaker, should be spawned on a different game object (also called loudspeaker).

- We set positioning type to 2D because we do not want the output of the Microphone bus to be spatialized relative to the loudspeaker. In this case, we are simulating an electric signal between the microphone and the loudspeaker, rather than physical vibrations—we don’t want the physical distance between and relative positions of the microphone and loudspeaker to affect the panning or attenuation of the sound. This bus does not use an attenuation.

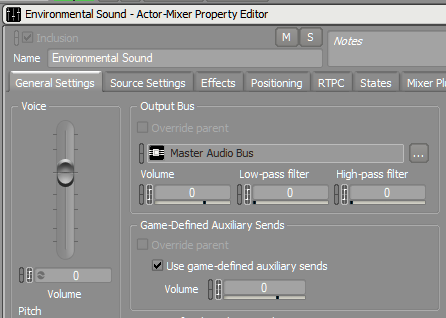

- In the Actor-Mixer Hierarchy, we need at least one sound to play that will be picked up by the microphone, and retransmitted by the loudspeaker. We create an Actor-Mixer object so that all of its children can share the same properties:

- The only thing to note here is that we must check the ‘Use game-defined auxiliary sends’ box. This allows us to define routing to the microphone game object and aux bus in game code using the auxiliary sends API.

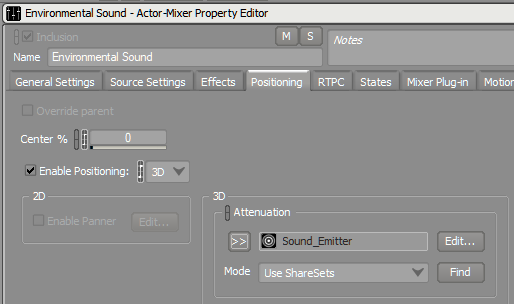

- In the positioning tab for the ‘environmental sound’ object, we make sure that Enable Positioning is checked and that positioning type is set to 3D. This will place the sound in the world using the game object’s position, and ensure that the output is spatialized relative to the listener’s position. In our example, the Event will be posted on the environmental sound emitter game object, whose listeners are both the microphone via an aux send and the player listener via the output bus.

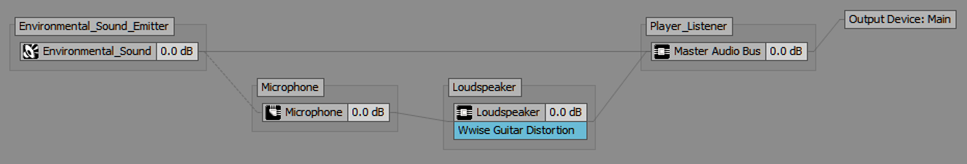

After setting up Events, building our SoundBanks and posting an Event in the game, we can confirm that our routing is correct by examining the Voice Graph:

In Wwise 2017.1, the Voice Graph is a powerful tool for troubleshooting routing issues. To reinforce associations, game objects are now represented by encompassing boxes that group sound and bus objects together. We can see in this example that we have a sound ‘Environmental_Sound’ playing on the “Environmental_Sound_Emitter” game object. We have an aux bus “Microphone” which is playing on a game object by the same name and a bus “Loudspeaker”, also playing on a game object by the same name. At the end of the chain, we have the “Player_Listener” game object that has a single instance of the Master Audio Bus.

The resulting audio in the game depends on the position of the 4 game objects and the attenuations that have been defined for sounds playing or being mixed on these game objects. And indeed, the result matches our intuition for the scenario—if the player listener is close to the loudspeaker, they will hear the sound that has been mixed into the microphone; if the player listener is close to the environmental sound emitter, they will hear the sound directly; and finally, the microphone must be close enough to the environmental sound emitter to be able to pick it up.

To conclude, I am going to leave you with a few more possible uses for the new 3D bus architecture in Wwise 2017.1. I challenge you to apply what you have learned in this article to create these scenarios in your game.

Acoustic Portals

You are making a virtual reality game with a heavy emphasis on spatial audio. It is of upmost importance to simulate acoustic phenomena realistically and convincingly to maintain the illusion. You would like the listener to be able to hear the reverberated sound coming from an adjacent room. All sounds in the room will be mixed together, fed into a reverb unit, and then the output of the effect will be spatialized and positioned as if it were coming through a doorway. The new 3D Bus architecture in Wwise makes this possilble.

Early reflections and other effects

Sometimes, applying an Effect per voice is too heavy, but applying it as a send Effect on a shared bus is too broad to be useful. What you would really like to do is create an instance of a bus for each sound emitter game object on which you can apply your Effect. Specialization may take place downstream of the sub-mix, or not, if perhaps your plug-in takes care of it. Wwise Reflect employs this strategy and leverages the 3D bus architecture in Wwise to generate a unique set of early reflections for each sound emitter. The early reflections are spatialized inside of the Reflect plug-in, relative to the listener’s position.

Clustering sounds to reduce overhead

You have a vehicle sound that is made up of a significant number of component sounds that are driven by a complex RTPC system. The components originate from different physical locations around the vehicle, so you use individual game object for sound emitters. However, it is costly to apply Effects and spatialization per individual emitter, so you want to do this only when the listener is suitably close by. If the listener is far enough away that they won’t notice subtle changes in angle, you want to mix all the sounds together first, before applying processing and spatialization to the entire group of sounds. The 3D bus architecture in Wwise enables this flexibility.

댓글

Bradley Gurwin

February 01, 2021 at 04:17 pm

Hi Nathan! Just discovering the concept of 3D Buses in Wwise from this article, and I'm finding it super interesting. I have to admit that I'm struggling to re-engineer your example, and was wondering if you'd be willing to go more in depth on how you used the API SetListener and SetGameObjectAuxSendValues. Some code examples in C# would be awesome. I'm trying to build this in Unity, and I've struggled with figuring out game-defined aux sends on previous projects. Thank you!

Richard Westbrook

January 30, 2023 at 10:13 am

Hi, I have a que, "The microphone has the loudspeaker as a listener." Why is that?