We have wanted to build a general purpose granular synthesizer for Wwise for a very long time due to the wide range of sounds it can produce, and the many ways it can be used in games.

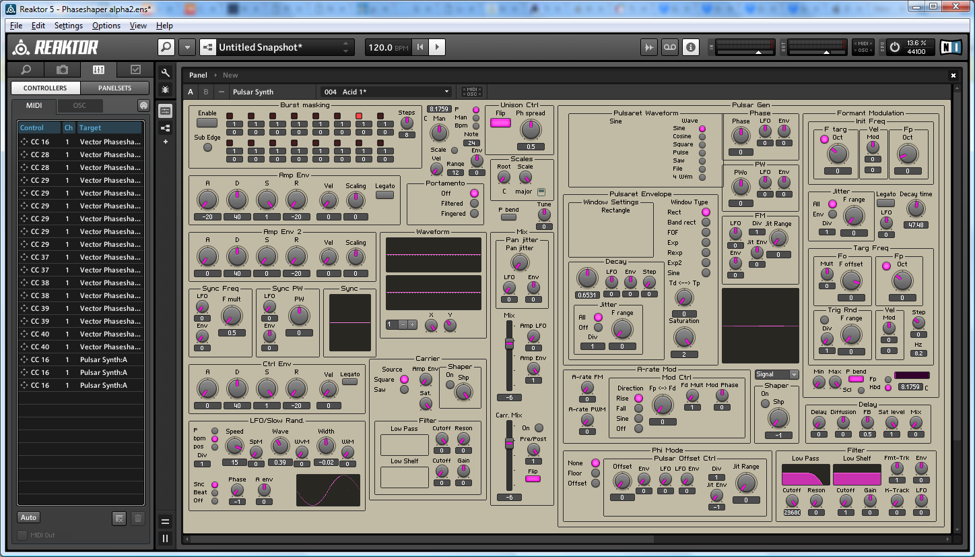

I remember having made a granular synthesis-like patch in Native Instruments’ Reaktor for myself, several years ago. I used it a little, and then found that it could not do exactly what I wanted, so I added new features. And then added even more features. And again. And it ended up like this...

A horrible wall of buttons (not Reaktor - Reaktor is cool, my design wasn’t)

Because granular synthesis can produce a wide variety of sounds, I think that it is very easy to design a granular synthesizer organically, so that each feature is piled up on top of the others in what ends up being a big giant mess.

Focus versus Flexibility

It is commonly accepted that an audio plug-in is usually more successful when it is efficient at doing one specific thing very well. For example, Crankcase Rev uses granular synthesis, along with very sophisticated signal processing in order to successfully emulate the sound of combustion engines. Their UX and choice of user controls is thought out to make these kinds of sounds easy to create. Obviously, we wouldn’t want to make a plug-in that specializes in combustion engines, as Rev already does it very well.

While it infringes the rule stated above about being focussed, we wanted to create a plug-in that would cover most applications that require granular synthesis, without necessarily being specialized in any of them. Hopefully, a critical mass of users will be able to tweak it so that it works for their applications. So, we started with this simple question: What do people really expect from granular synthesis for their game audio?

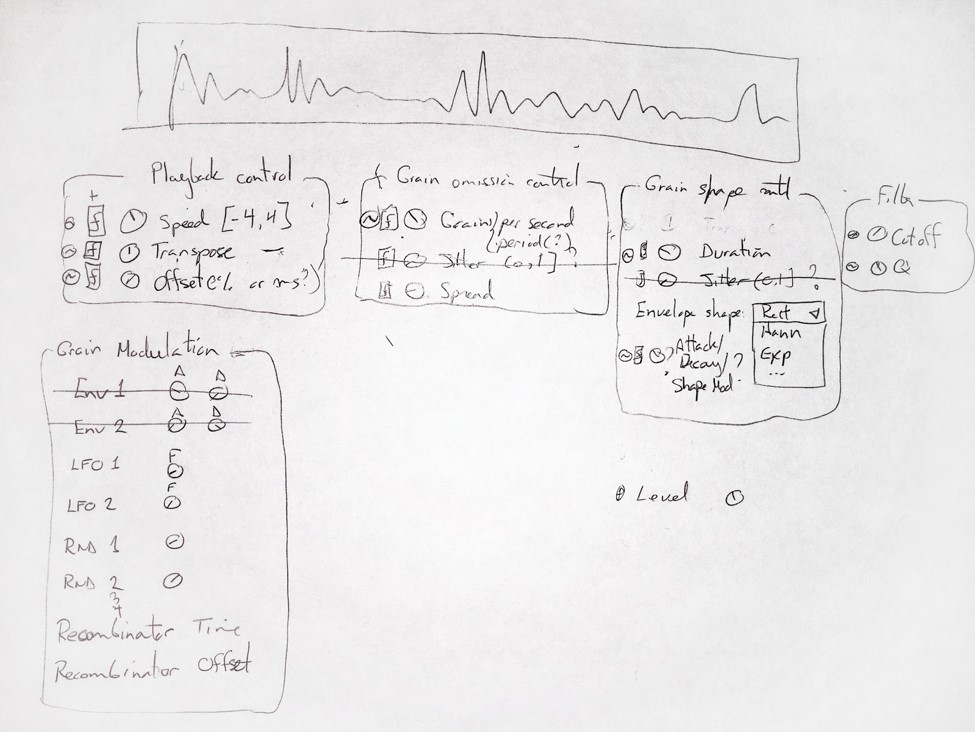

Mock up of the simplest granular synth

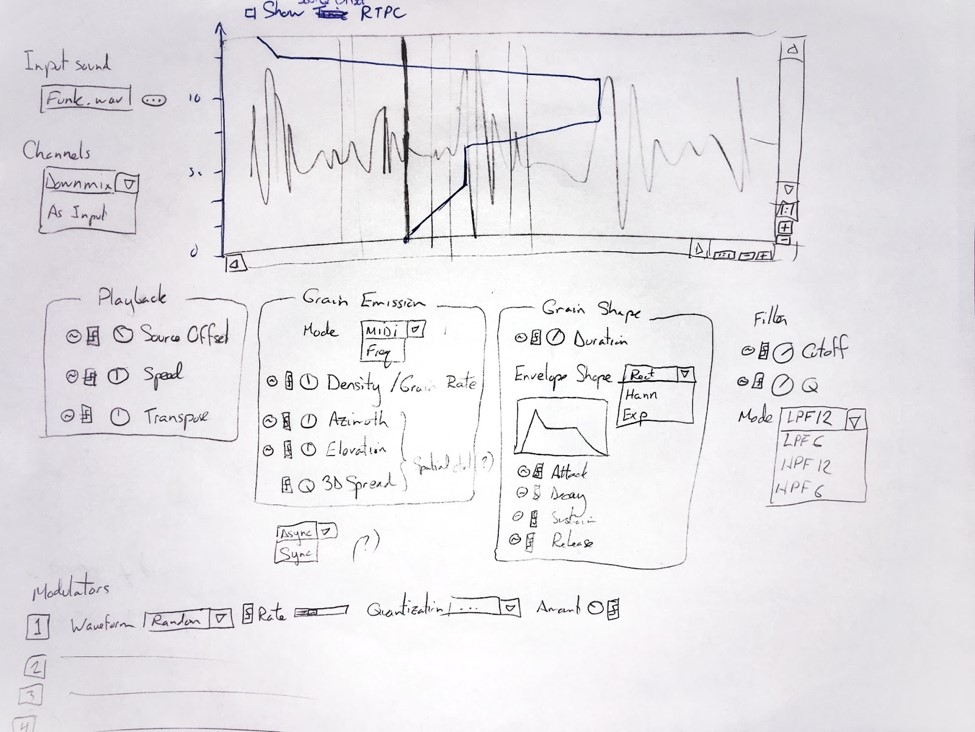

A later mock-up.

User Research

While attempting to design a synthesizer that can do everything without being specialized in anything, it became obvious that we needed to get our community of Wwise users involved.

We asked the question: “What would you use a (traditional) granular synthesizer for (in your work)?” Here’s a sample of answers we received:

-

“car engines, textured ambiences, rolling sounds, rain, fire”

-

“explosions, destruction, oneshot, crumbling sounds”

-

“for evolving ambience bed, for sci-fi or ‘semi-natural’"

-

"weird, unnatural sound"

-

“wavetable synth caps for making music...”

-

“implementing run-time / interactive ‘effects’ with lots of control. For example: ‘make the monster hit sound more grainy / scarce as the monster scales are ripped off its skin.’"

-

“generating some cool granular sounds that I'd want to bounce back into a DAW and edit”

Many said that they “did not know yet”, and one person even said that they couldn’t find any use for it!

Certainly, the use cases would span a wide range. So, reaching out to the community did not stop at gathering use cases.

-

We gathered written conversations we already had with designers about granular synthesis before even starting the design.

-

We carried out interviews in-house.

-

We built a quick prototype and brought it with us to GDC, where we hoped to find people interested in trying it.

-

We made a second prototype that we shipped to users and carried out follow-up interviews where they would share their thoughts and ideas with us.

-

We shipped an alpha to get more feedback.

It was a long process of learning, testing, and validating, and we gained valuable knowledge at every step of this process. Questions users responded to, and topics of conversations during interviews we had with them were centred around:

-

Use cases;

-

Workflow and pain points;

-

Specific questions about how features were presented;

-

Missing features.

Conversations would often go way beyond that!

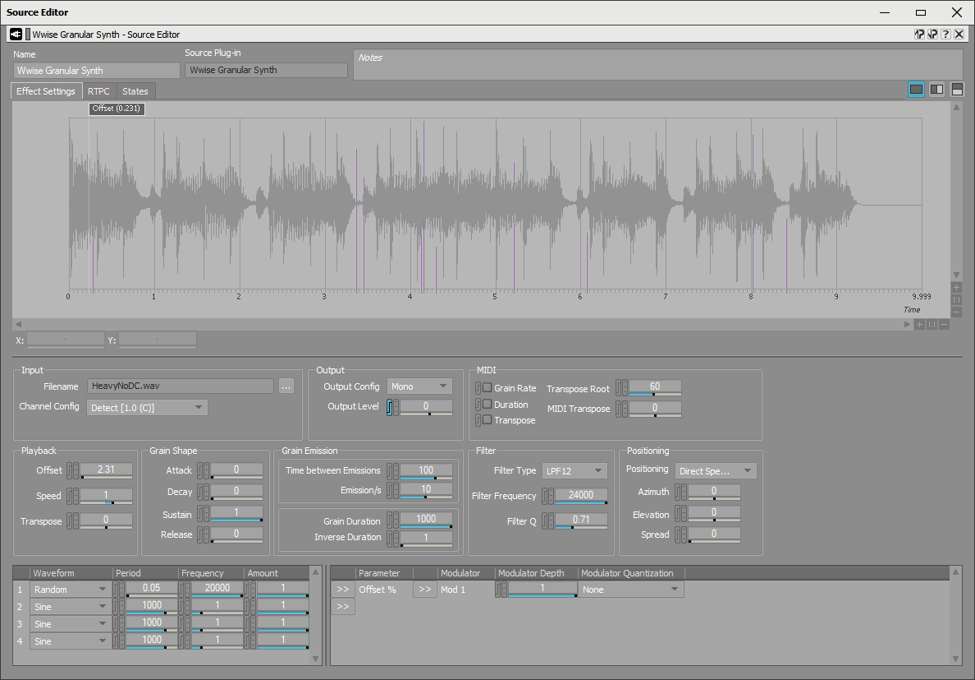

The first functional prototype (GDC)

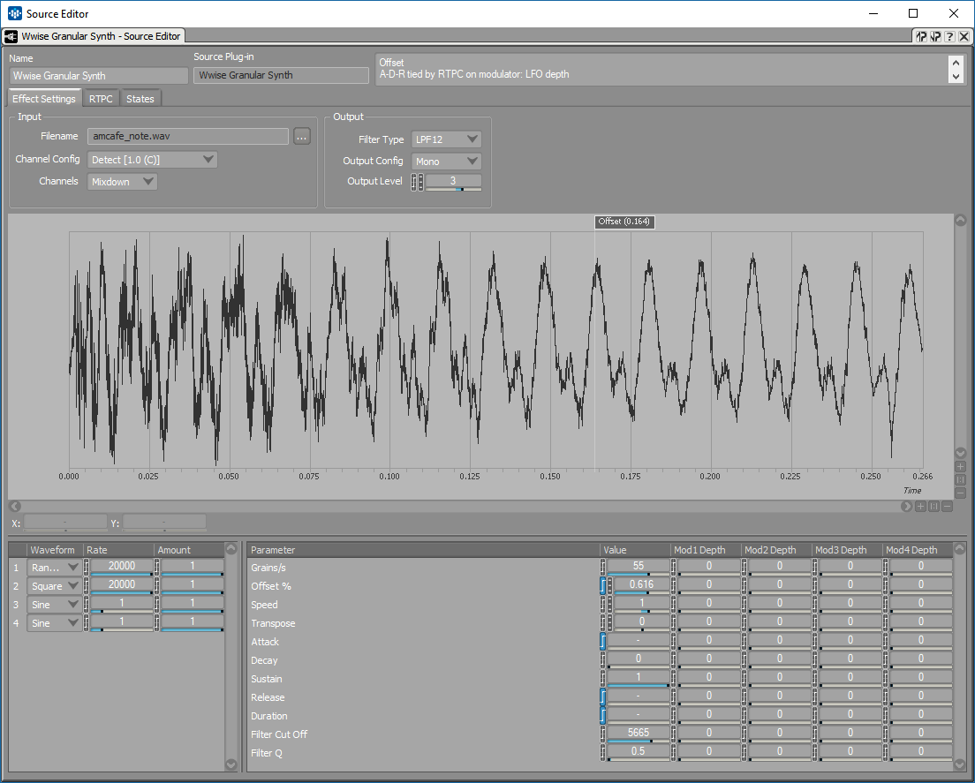

The prototype sent out to users.

While I could go into detail about a gazillion things that came out of these interviews, I’ll just mention one example to give you an idea about what this process is like. (Just in case you’d like to be involved next time!)

Example: Grain Window

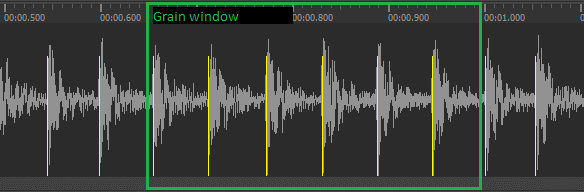

One user’s ambition was to design a car engine.They explained to us how they expected to see a “window”, on top of the waveform, from which grains would be randomly picked up. Also, these grains should correspond to explosions in the original file. And the window itself should move with an RTPC that is tied to some game-driven RPM.

Expressing allowed grain start positions with a window and markers.

A special feature “grain window” for selecting grain start positions would be appropriate for this kind of application but not for others such as time-scale effects and wavetable synths. The same behavior could be equivalently set up by using random modulators on the Position cursor, but it would be difficult to visualize how modulation would translate into such a window and impossible to have grains start at explosions.

Alpha

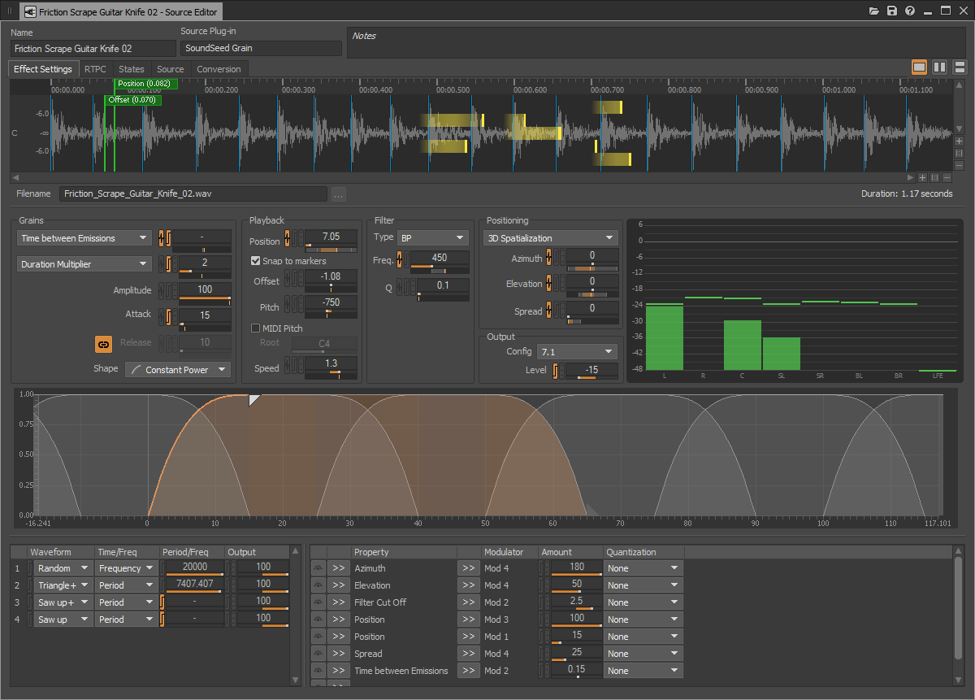

So, while we decided not to add a special “Grain Window” feature, which would be optimal for this car engine use case, this concept and the discussion around it ended up inspiring the features Snap to Markers and automatic transient detection, and Grain Visualization (overlaid on the waveform view). We think these features would cater to a larger variety of use cases. You can see how these features, along with modulation of Position can be used to emulate a “grain window” here.

This is instead how you would set up the synthesizer to emulate a “grain window”:

-

Assign the Position property to an RTPC, which you will bind to an appropriate game parameter, say, RPM. This RTPC defines the left boundary of the macro window.

-

Assign the Position property to a Random+ modulator with some Amount. The chosen Amount defines the width of the macro window, in percentage of the file's duration.

-

If the width of the window should change with the RPM, bind it to RPM using an RTPC.

You may force grains to always start at precise positions in the original sound, for example at each explosion, by adding markers and using the "Snap to markers" option. Automatic transient detection may save you time.

2018.1.4, showing in action envelope visualizer, grain window, and live monitoring under sliders.

To conclude, SoundSeed Grain was shaped by continuous valuable user feedback throughout development. Other concrete examples of features we were able to tweak as a result include:

-

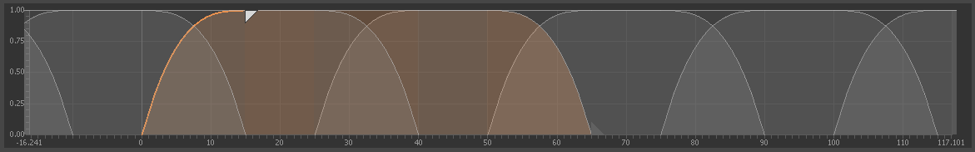

The Envelope Visualizer (center of the window), without which users would struggle to understand the interactions between grain duration, emission, and envelope shape.

-

The slider feedback (live monitoring under each slider), necessary to work with modulation.

The Envelope Visualizer

Slider with live feedback

We will certainly pursue this user-oriented approach for other plug-ins, so if YOU would like to be part of the discussion about the next one, by all means, register here!

Acknowledgment

Audiokinetic would like to thank everyone who participated in the design process, our wonderful community who gave us feedback, and, in particular, Tom Bible, Nicholas Bonardi, Mike Caviezel, and Andrew Quinn for their contributions and immense help towards figuring out where we needed to go!

Before you go, here's a quick video on SoundSeed Grain!

댓글