Imagine taking a Virtual Reality experience out of its virtuality, and placing it in the real world, yet still keeping all its virtual world elements. It's a kind of a mixed reality set in a room where interactions should happen as they would in a regular VR experience, however people get into it without any goggles or headphones.

This was the challenge faced by Riverside Studios/Lucha Libre Audio team in Berlin for the XR Room experience developed by NEEEU, for Factory Berlin. NEEEU is one of the most renowned design studios in town, and Factory Berlin is Europe’s largest international innovation community and co-working space gathering creative people and helping them thrive with their ideas and concepts. It's similar to Andy Warhol’s NY Silver Factory.

NEEEU developed a 3D experience, but, instead of delivering it on a regular VR goggle, NEEEU implemented eight projectors in a big room, so that people can interact with the experience in real life. Our first challenge was how we were going to correctly add sound and music in such a presentation for VR elements. The solution was building a 64-speakers array behind fake walls and implementing all audio in Ambisonics. The fake walls were built, and the speakers were implemented by WeSound, a company based in Hamburg which delivers massive soundscapes for many projects around Europe. WeSound used ICST Ambisonics tool from Zurich’s Institute for Computer Music and Sound Technology at the Zürcher Hochschule der Künste.

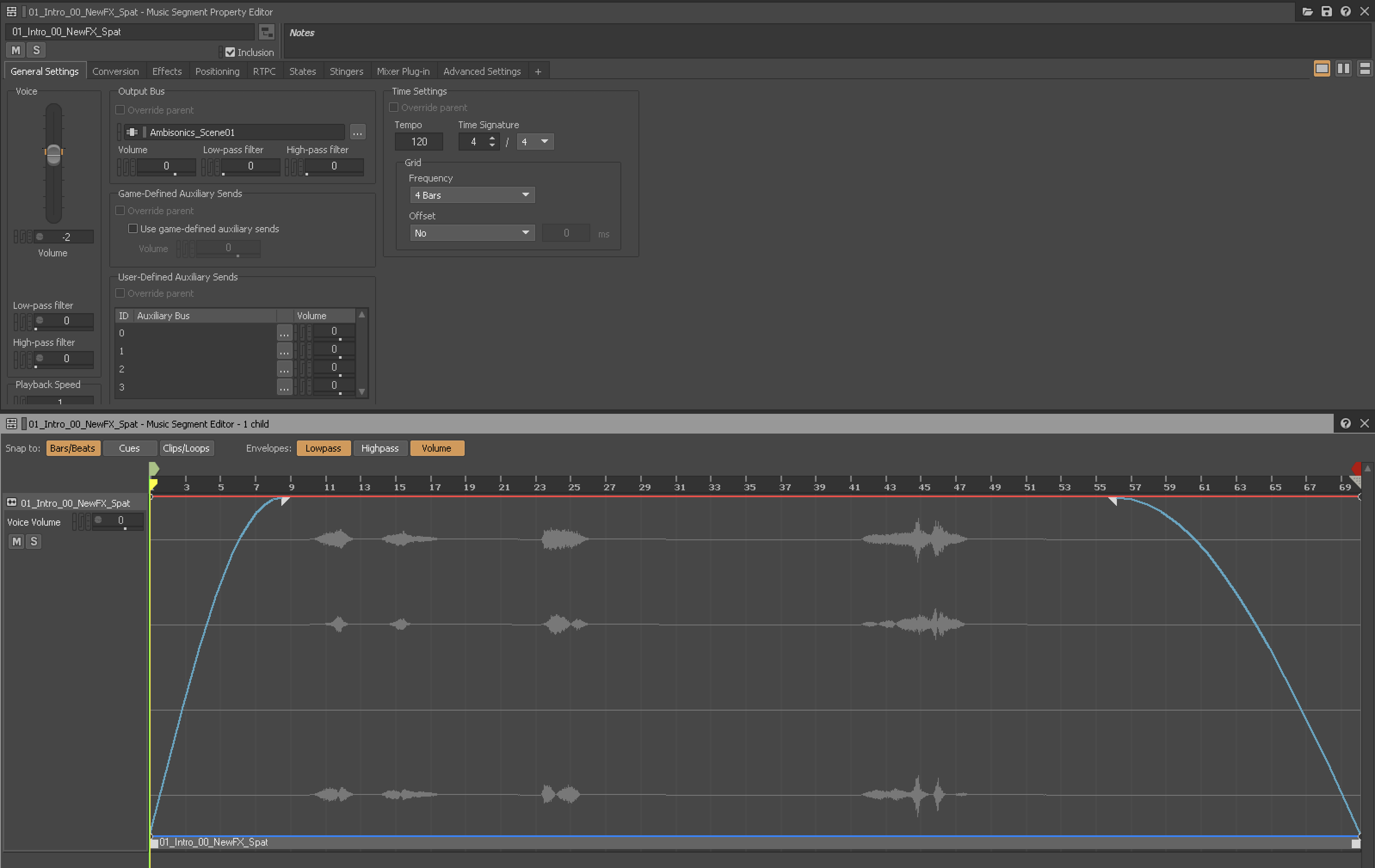

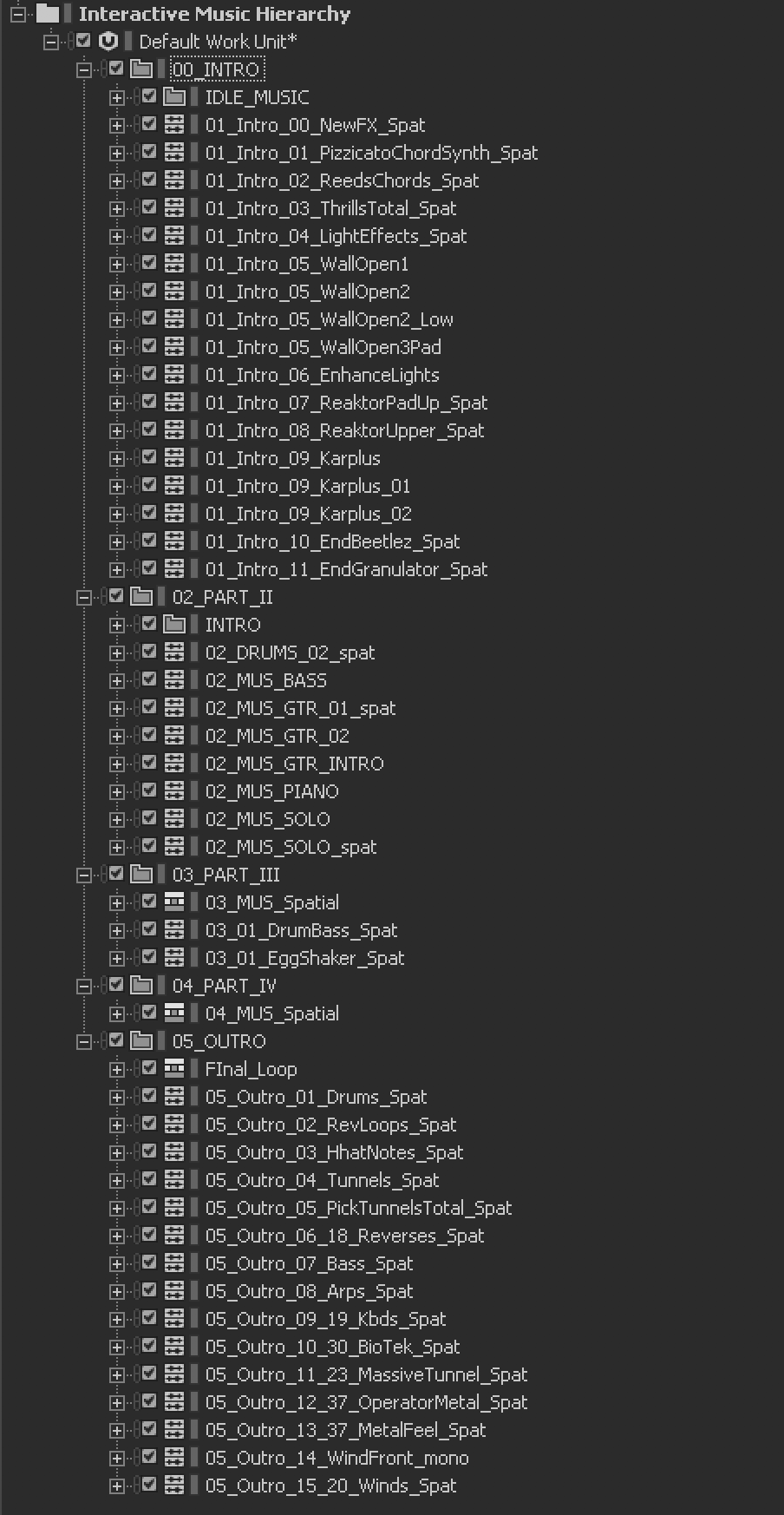

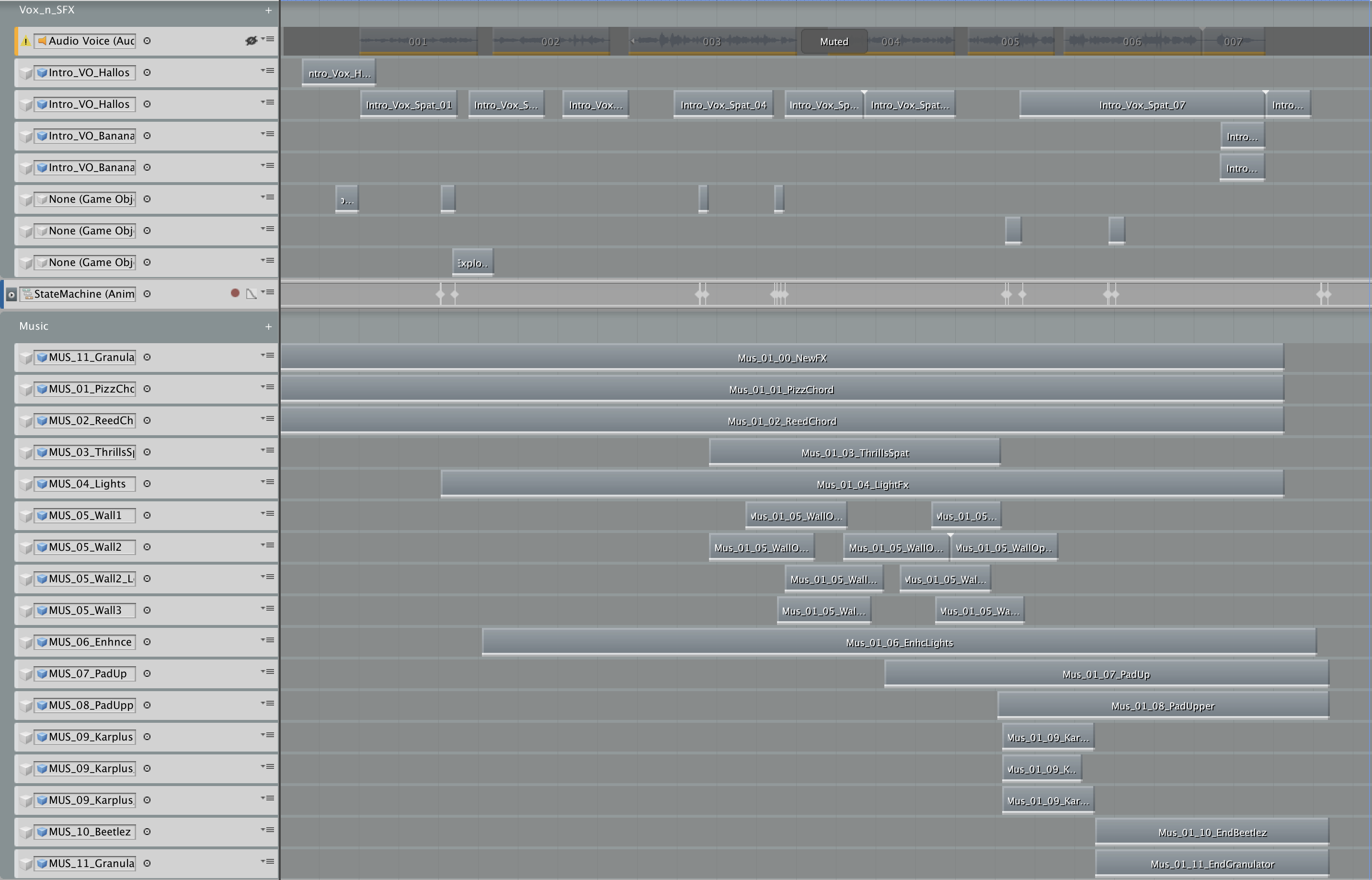

When all the hardware set up was completed; it was time to start adding music and sound effects onto the project. The project was developed in Unity, and based on the complexity of the project, I decided to use Wwise for the audio. Wwise helped me organize all Music and Sound Effects for each of the “levels” of the experience. I had to use all 64 speakers in the best way possible, so I decided to separate the music for each of the levels in stems, place them spatially in Unity and Wwise according to the positions of the visuals, and also add spatialized sound effects and Voice-overs which were performed by the amazing actress/voice talent Emily Behr.

Since the XR Room was a reactive room, meaning, people could interact with the walls using the HTC Vive controllers, all sound effects needed to be precisely placed. I separated everything and created multiple attenuations, and Wwise helped a lot with delivering proper sound effects for each scene since all scenes are different in size, duration and intention.

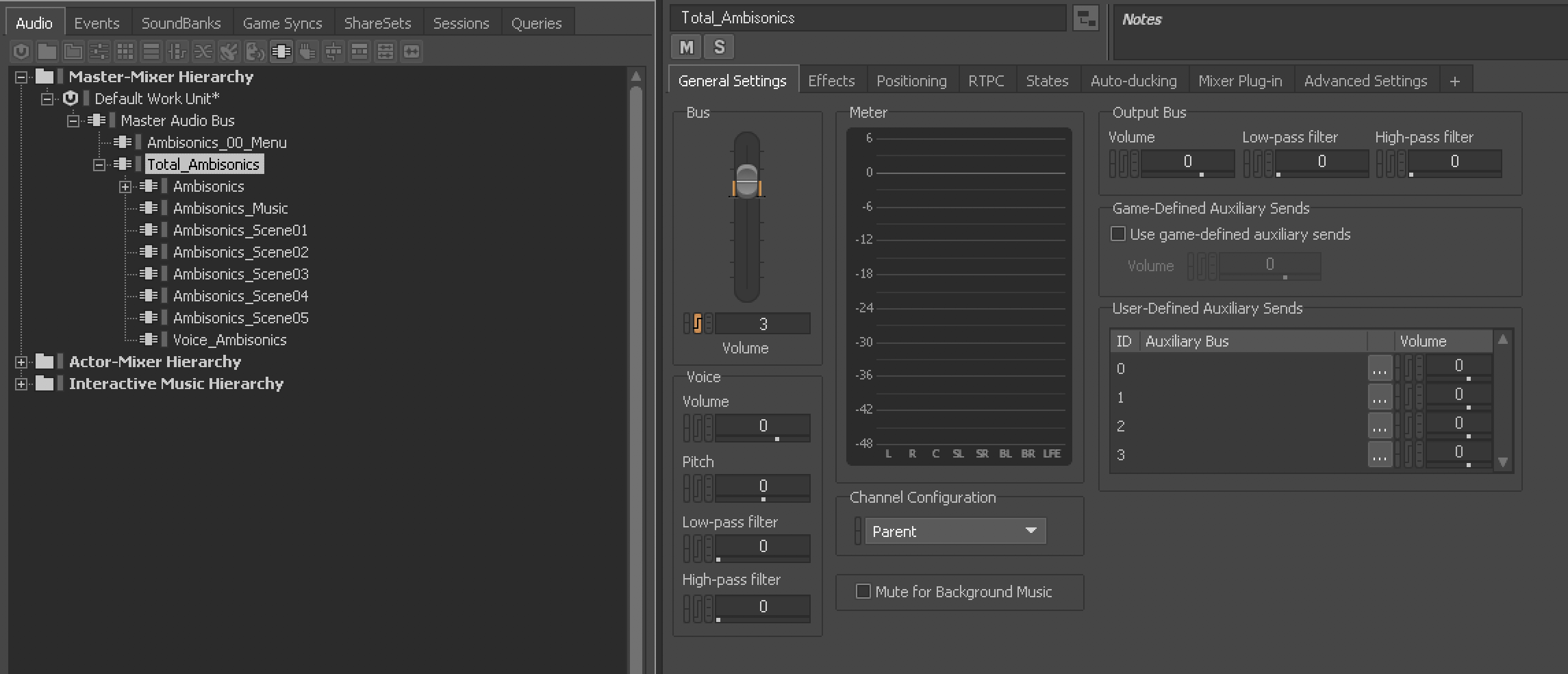

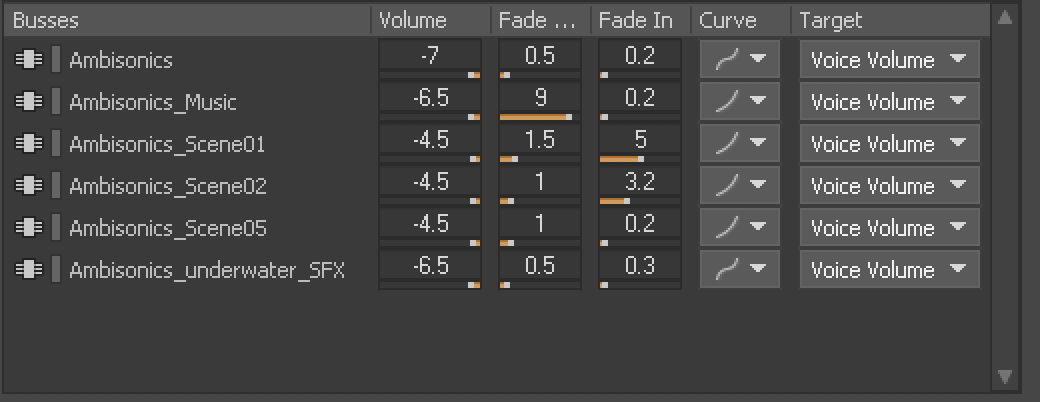

We were on a tight time-frame, and I couldn’t ask too much from the developers who were busy figuring out how to make everything work flawlessly on their end. All music and sound effects were different for each one of the scenes with no repetition, so using Wwise, I separated each scene into Audio Busses to avoid any sounds bleeding from scene to scene. I kept the voice in its own separate Audio Bus however since it was the only constant through all levels, and there was some Auto-ducking implemented for that.

For the music, I needed to create the soundscapes for each of the scenes and also use objects in Unity. I mixed some instruments and some stems in AmbiX 1st Order, which also helped a lot when prepping the room and mixing the sounds. And while all the base foundation was in AmbiX, some specific instruments and sounds that needed precise positioning were delivered in mono and added to the objects of each scene.

Another important task was to add the Audio precisely into the animations, which I made inside Unity’s timeline animation processes. I just separated each instrument in stems and went into Unity to add them precisely when and where things happened during the presentations.

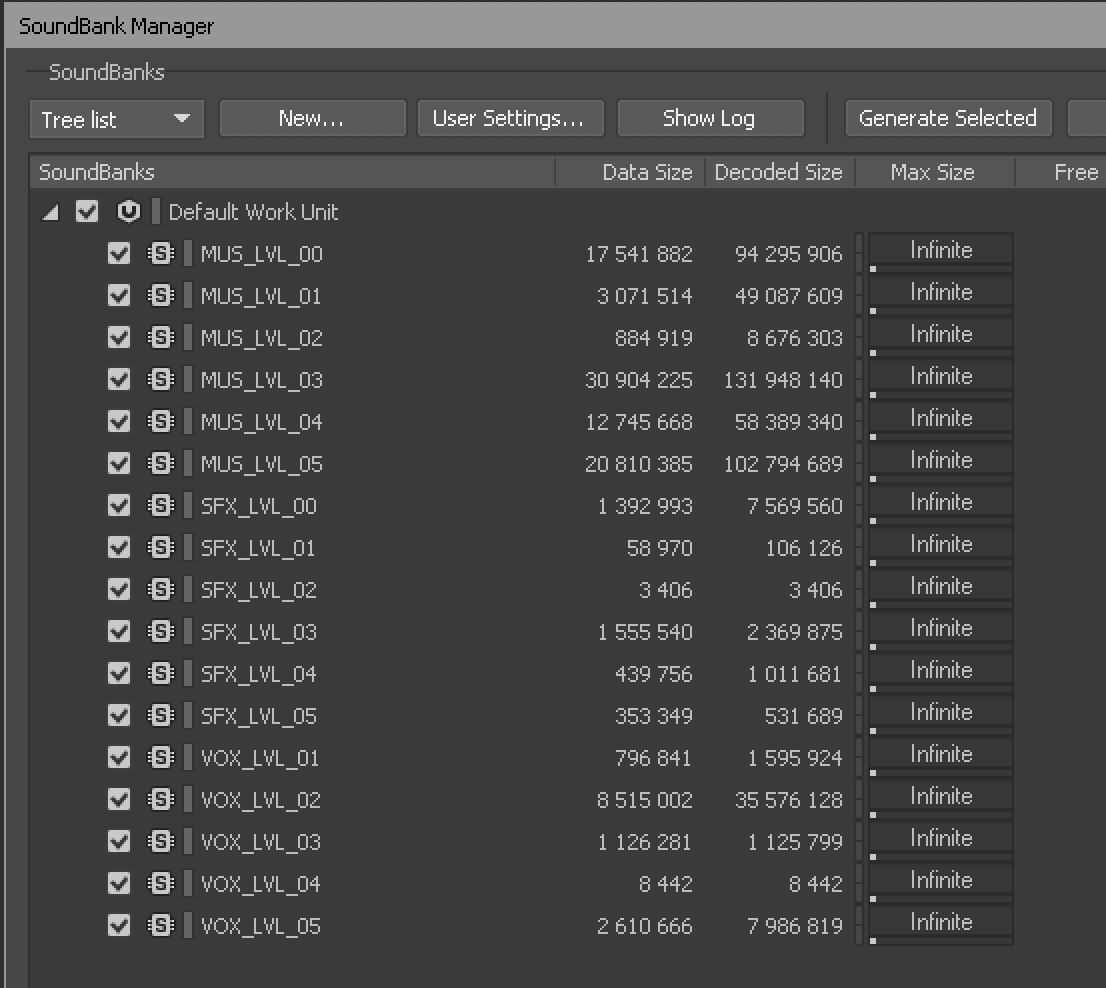

In the end, SoundBanks were separated into Music, SFX and Voice, and also each one of these divided into levels. This was the easiest way to track everything and to help the Unity developers not get lost in naming while I began implementing everything. Although the developers didn’t do anything sound-related (apart from when we discussed some specific Voice Overs happening based on scores at Level 4), they were able to read and understand which sound were where.

The whole experience was implemented only a few days before its opening, and while the room was ready, I was working at my studio the entire time and had only one day to mix things on-site before it started. Resonance Audio Renderer was the chosen plug-in to help me with that. I was able to have an overall idea of how things would sound in the room using my headphones during the creative process. I just had some minor changes during implementation.

Put your headphones and check out these three scenes from the experience. You can only hear 1st Order with these youtube links, but if you have the chance to come by to Factory Berlin to listen and experience this project for yourself, you'll be my guest. :)

Scene 1

Scene 2

Scene 5

-

Client - Factory Berlin - Paul Bankewitz

-

Architect - Anna Caspar

-

Construction - Ralf Norkeit

-

Acoustics - Rummels Acoustics

-

3D visuals and concepts - NEEEU

-

Speakers Conception - WeSound

-

Music Composers - Oliver Laib/Billy Mello/Paulinho Corcione

-

Sound Effects and Sound Design - Billy Mello (Lucha Libre Audio)

-

Voice Over - Emily Behr

-

Project Manager - Ima Johnen (Riverside Studios)

댓글