After a few years of studying electroacoustic music at the University of Montreal, I couldn’t help but wonder about what I would do with my degree. How would these teachings apply outside of academia? How could Pierre Schaeffer’s Étude au Chemin de Fer have anything to do with foley? How could Ake Parmerud’s Grain of Voices be related in any way with mixing and editing in the “real world”? Or how could Karlheinz Stockhausen’s Kontakt have anything to teach me about sound design? While very little seemed to make sense, I focused purely on getting into game audio. In retrospect, it was arrogance on my part to think that electroacoustic music studies were useless. I should have opened my mind to how electroacoustic music can certainly be related to game audio, especially when I had access to a faculty of accomplished professors in the field who were ready to share their wisdom on this subject.

Fast forward: during the time I was working at a Montreal studio called La Hacienda Creative, a contract came in from Capcom concerning a horror game, which ended up being Resident Evil 7: Biohazard. The mandate was to create a virtual instrument that was to be used to compose the score. Our task was to make samples for their in-house music system called R.E.M.M. Because our involvement was not directly linked to game content, we did not get much information with regards to what was actually developed on their side. So, needless to say, attending this year’s GDC talk The Sound of Horror - Resident Evil 7 Biohazard was very special for me, given my history at La Hacienda and, of course, because they covered how musique concrète was used in the game!

On location, recording bees for the R.E.M.M library

On location, recording bees for the R.E.M.M library

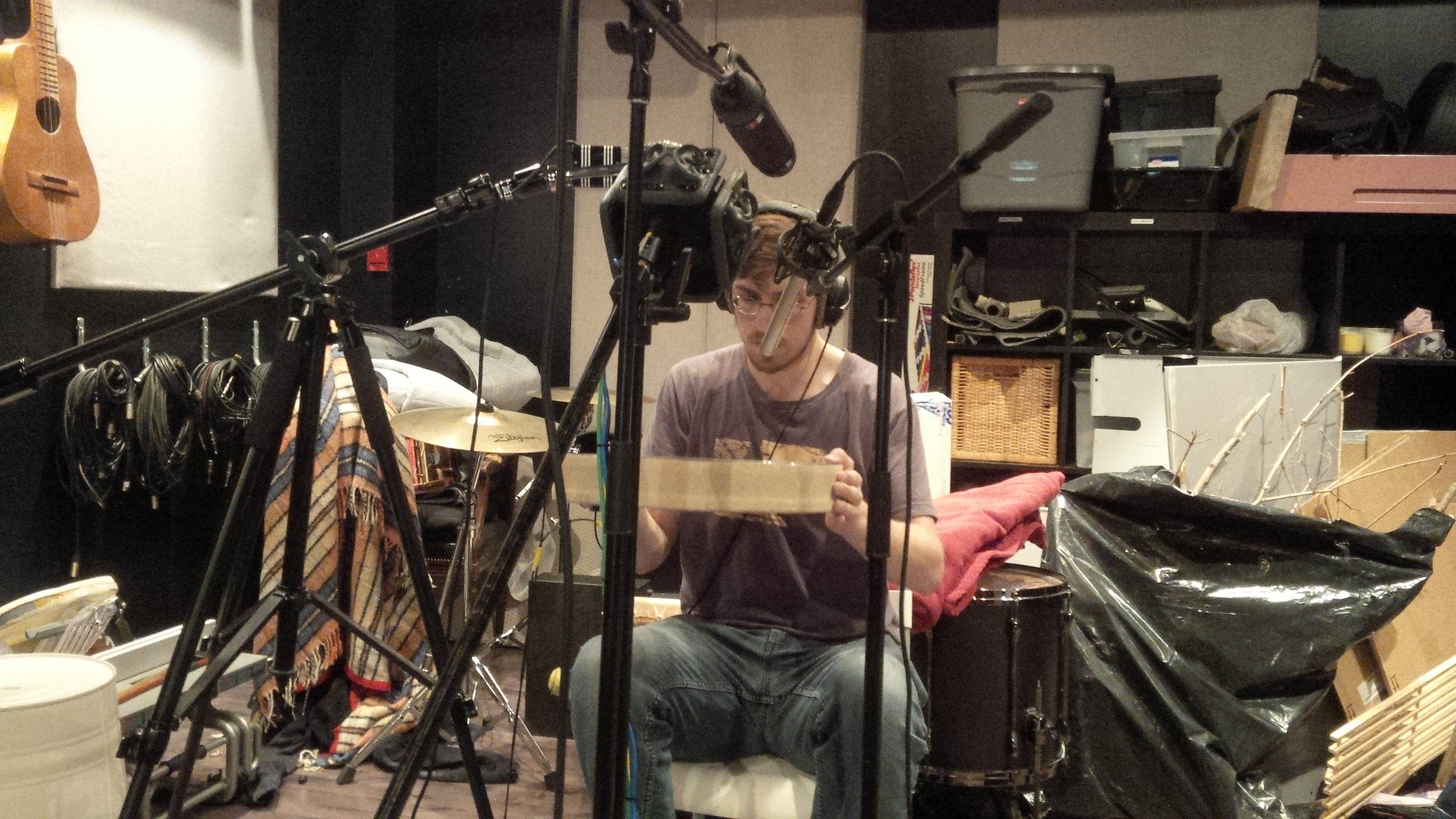

Pierre-Luc Sénécal, a sound designer on the project at La Hacienda Creative studios

Pierre-Luc Sénécal, a sound designer on the project at La Hacienda Creative studios

For a quick refresher, the theories and esthetical practices behind musique concrète were first established in France, in the 1940s, by Pierre Schaeffer. The concept came from audio recording, literally. This form of artistic expression gave way for sound to be experienced differently, because it was broadcast on radio with no visuals. Much like non-diegetic music, one is detached from a visual representation in relation to what is creating the sound. Listening to sonic material without being “influenced by what is behind it” remains the essence of what acousmatic listening is; it is a “veil” of sorts that leaves room for interpretation. For example, when you see a door being slammed in real life, you also hear the sound as it is. But, when you hear that same sound through a speaker, do you hear a door being slammed or do you hear a creaking texture, followed by an impact and resonating decay? We listen differently when sound is heard through speakers, especially when we actively concentrate on what the sound is and not where the sound is coming from. Of course, with recorded sound, the latter would always be the speakers anyway!

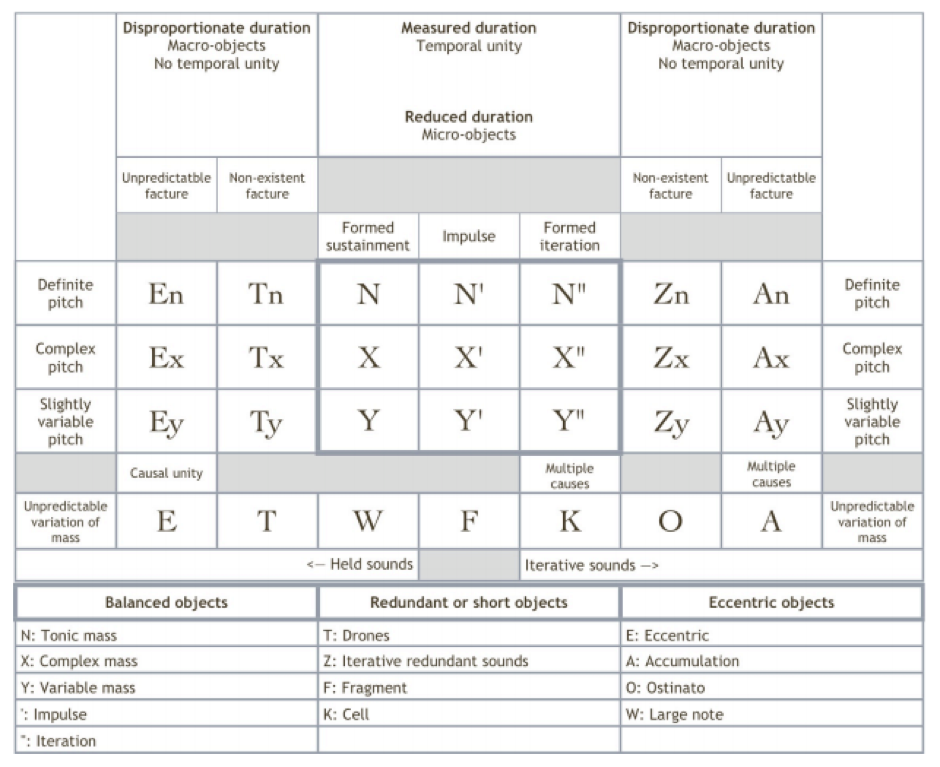

Later on, Pierre Schaeffer elaborated on a method to categorize sounds from musique concrète, which would be used for ear training and as a way to “score” a performance. The table was known as TARTYP (“TAbleau Récapitulatif de la TYPologie”). It was later revised by Robert Normandeau.

Normandeau’s revised proposal The original Shaeffer TARTYP

Normandeau’s revised proposal The original Shaeffer TARTYP

In essence, Schaeffer’s TARTYP table proposes a vocabulary for sound design. It becomes much more effective to say “I’d like this spell casting sound to have an X’ element followed by W, than “Could you make it more orange please?"

In light of acousmatic listening, the obvious link with games lies in diegetic and non-diegetic sound. To me, this ties in directly to RE7’s soundtrack and soundscape. Spend any number of times listening to musique concrète and you will notice something: a lot of the sounds, well… sound like sound effects or a montage of them. But actually, in some cases, the lines are blurred.

An example from Bernard Parmegiani’s landmark piece De Natura Sonorum. If you listen to part IV : Étude Elastique (from 13:27) and then part III : Géologie Sonore (at about 11:00), you’ll hear moments in the piece where the concept of SFX and music might be closer than one thinks.

How does one go about using, in a broad sense, a musique concrète aesthetic within a game, while keeping a clear separation between the music and the sound effects? Capcom’s sound team did a great job of keeping the right balance between diegetic sounds and the non-diegetic music created by the game’s instrument palette, which also includes concrete sounds. They also master focusing the player’s attention with carefully chosen sound cues: sounds with the specific purpose of driving the attention of the player so that the story progresses constantly.

Here is a clear example that demonstrates a musique concrète approach being used in the game. Notice when the character opens the door. There is a mixture of door hinge sounds with creaking reverberated texture in the music. The piece was composed for the game, yet also works as a standalone piece in the game soundtrack. You can find it here. This piece is titled Haunted House and was composed by Akiyuki Morimoto.

I can’t confirm that the decision to use musique concrète in this way was intentional, I can’t even say for sure that it crossed the minds of folks at Capcom to look at musique concrète beyond a fixed aesthetic or palette while working on the score; however, it’s interesting to think that music concrète strongly influenced the creative direction for this soundtrack, overall.

A great talk for me is what makes my brain start to go on a tangent, and boy, with this one my brain lost itself when it realized "I’ve never heard of musique concrète being used in this way!" It makes so much sense to look into applying the principles I’ve covered in this article to an interactive score.

From left to right: Dominic Hamelin-Johnston (Beekeeper), Maximilien Simard Poirier (Lead Sound Designer), Adamantio Klonaris (Audio Editor), Jera Cravo (Engineer and Studio Manager), Pierre Friquet (VR Filmaker)

From left to right: Dominic Hamelin-Johnston (Beekeeper), Maximilien Simard Poirier (Lead Sound Designer), Adamantio Klonaris (Audio Editor), Jera Cravo (Engineer and Studio Manager), Pierre Friquet (VR Filmaker)

I also would like to take the time to acknowledge the work behind all the foley and SFX samples, and thank Pierre-Luc Sénécal and Adamantia Klonaris for their valuable work on the library, and without whom I could not have completed the task. I would also like to thank La Hacienda Creative for the opportunity to work on this project.

댓글

Lenny Ovo

April 04, 2017 at 03:59 pm

Great to see mention of acousmatics in the context of sound design for games etc. Now if only there were more jobs for all of us "composers" with degrees in this area ; )