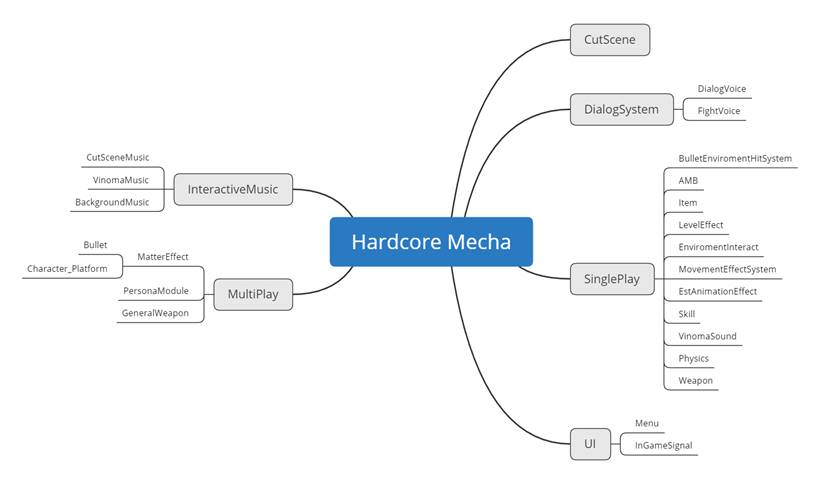

Hello everyone! I’m Jian’an Wang, a composer and audio designer from RocketPunch Games. For the Hardcore Mecha project, I was responsible for composing the music, creating sound effects, and implementing with Wwise. Today, I’d like to share with you how we designed the audio system for Hardcore Mecha.

About the Game

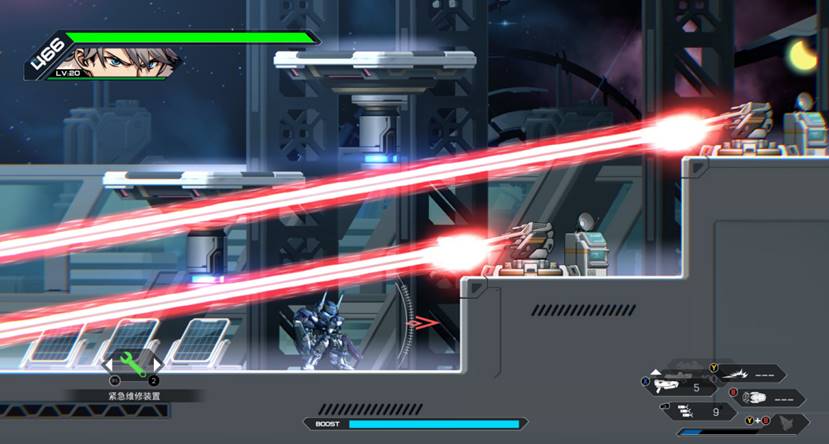

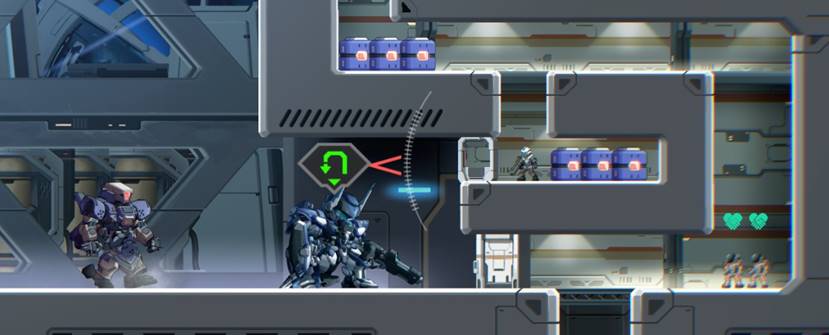

Hardcore Mecha is a 2D side-scrolling action game featuring fast-paced Mecha battles and stunning animations. In the game, you can choose your favorite Mecha and experience the competitive 'Battle of Steel'. Hardcore Mecha has two modes: Singleplayer Campaign and Multiplayer Battle. The Singleplayer Campaign mode includes 8 chapters and 18 levels. Players encounter more than 50 types of enemy Mechas each with unique designs and in-game environments like, Mineshaft, Underwater, Urban, and Space. The Multiplayer Battle mode offers rich gameplay and seamless cut-scenes for an immersive experience. Apart from online PVP battles, Players can also choose to play against one another in local split-screen! It’s available on PS4 and Steam. Hardcore Mecha has been highly rated by Famicom (33) and IGN Japan (8.8).

Behind the Project

2D side-scrolling Action games are usually short, quick, and simple. However, Hardcore Mecha is different, it has rich and in-depth content/story-lines. The Singleplayer Campaign mode offers 8 hours’ of gameplay without repetition. It isn't comparable to a 3A console game, in terms of development scale; however it’s not a small project either. It was quite challenging for a small indie team to deal with all the development issues of a full game, but we pulled through, successfully.

To ensure smooth development and on-time delivery, we used Unity 2017.4.27 and Wwise 2017.2.9 (this blog is related to these versions).

The Audio Design

Adapting Wwise 3D Positioning to Virtual Real-time Attenuation Design

When the Wwise pipeline was introduced in Hardcore Mecha, I encountered our first challenge: How would I position the sounds?

I researched other games and conducted experiments to learn more about our game needs. I discovered that when the sound sources within the games' visuals were positioned from left to right, the sounds were emitted/played at the edges of the screen or even off the screen! This was not a natural way of positioning sound. It distracted the player’s attention and affected the overall immersive experience. If the sound source(s) were out of sight, whereas not panned in a precise manner, the Player’s judgment on sound positions would be greatly misinformed.

Mecha games are different from other games. Usually, there are only a few Foley sounds or normal-sized sound sources in a game. In contrast, most Mecha sound effects are attached to large-sized objects. If we defined the stereo width according to the size of sound sources as we did in other games, they would be presented as simple point sources. The Mecha battles would be less exciting and immersive. I wanted to ensure the sound effects would reflect the stereo width without impairing the players' judgment on sound positioning.

In Wwise 2017, there are two panning methods:

- 2D positioning, applying an RTPC with a 2D panner to each sound effect, and setting the RTPC to control the positioning during playback.

- 3D positioning, enabling Game-Defined, and defining the relationship between the listener and the emitter to calculate the distance for attenuation.

According to my research, the first method is simple and intuitive, but there is no way to treat the stereo sound sources as points. To calculate the panning, I needed to pass the RTPC values for each sound effect and update them by each frame, however when there are a large number of sounds, this would surely effect game performance.

With the second method, we can simply use the Unity Transport feature in combination with Wwise to do the positioning calculations. However, it would be difficult to deal with the listener positions and manage the 2D boundaries.

After careful consideration, I decided to use the 3D positioning method anyway.

As the first step, I made a requirement list:

- I wanted to use the Wwise 3D positioning and attenuation features to apply seamless panning to movement sounds. In this way, we would get smooth panning results and low performance costs.

- I wanted to reflect the intense Battle of Steel gameplay naturally, therefore we needed to treat some sound sources as collection blocks for clarity.

- I wanted to enable the Player to identify off-screen sounds.

I used our customized ProCamera2D plug-in to manage the camera. This gave us the possibility to change the camera position freely and optimize performance. However, this also made it impossible to locate the camera on the Z-axis. Therefore, all content modules are on the same plane, and when in-game visuals change, there is no actual camera movement, only the camera size changes. In this case, ProCamera2D has an object snapped to the center point of the screen. If the listener is attached to the camera, all sounds will be panned to the far-left or far-right.

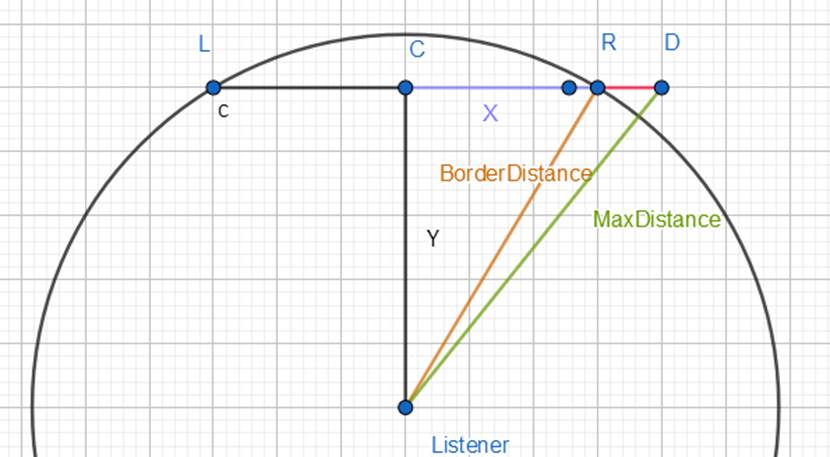

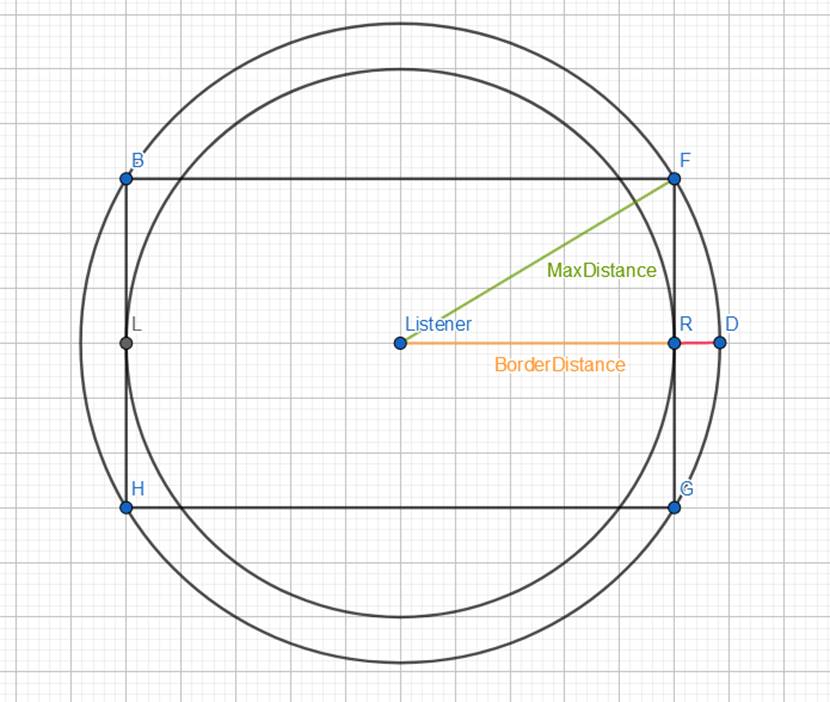

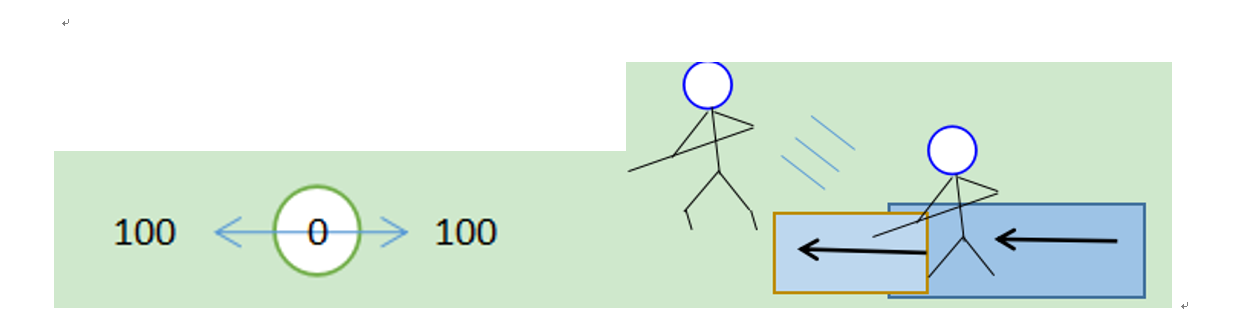

After further brainstorming, I defined a unique game object as the listener and positioned it on the Z-axis, while following the XY coordinates of the center point. As shown in the image below, the relationship between the listener and the sound source becomes the hypotenuse of a triangle. To reduce the volume if the sound sources go out of sight, the Max Distance in the 3D attenuation settings could not be linear. The problem was solved.

However, this made the designing and debugging of attenuation curves more complicated. To solve this next problem, I came with the following solution. Assuming you are playing the game in a Top view, where L is the far-left point, R is the far-right point, C is the center point, and X is the distance from C to R. In fact, as the story develops and the player advances, the length of the game visuals scale, and so does the X value. I defined the distance from the listener to the far-left or far-right point as the BorderDistance which equals √(X²+Y²). This is also the distance from the sound source to the listener. Wwise cannot adjust the attenuation MaxDistance through the RTPC in real-time, so we had to modify the overall attenuation scale via script. As such, I targeted the BorderDistance value directly in the script. When the game visuals zoom in, the X value scales down. To get the right attenuation effects in a side-scrolling game, all I had to do was derive the Y value (i.e. the distance from the listener to the center point C) through a reverse calculation, and position the actual listener according to the Y value.

In this way, no matter how the camera moved, the Players' listener would always be correctly positioned because it was based on the calculation value derived from the visual size and the BorderDistance. As long as the 3D attenuation MaxDistance equals the BorderDistance, we would get smooth left or right panning effects. On this basis, I could adjust the attenuation against the sound volume and frequency response.

However, there were two other challenges...

First, the attenuation range against the game visuals would be presented as an arc, and the sound effects at the four corners would reach the Wwise Attenuation limit.

Second, once the sound sources go beyond the far-left or far-right point, the sounds could also reach the Wwise Attenuation limit. Even-though important things won’t happen at the corners, the sounds played during battles would undoubtedly pass through these places!

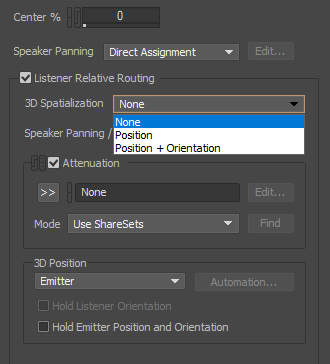

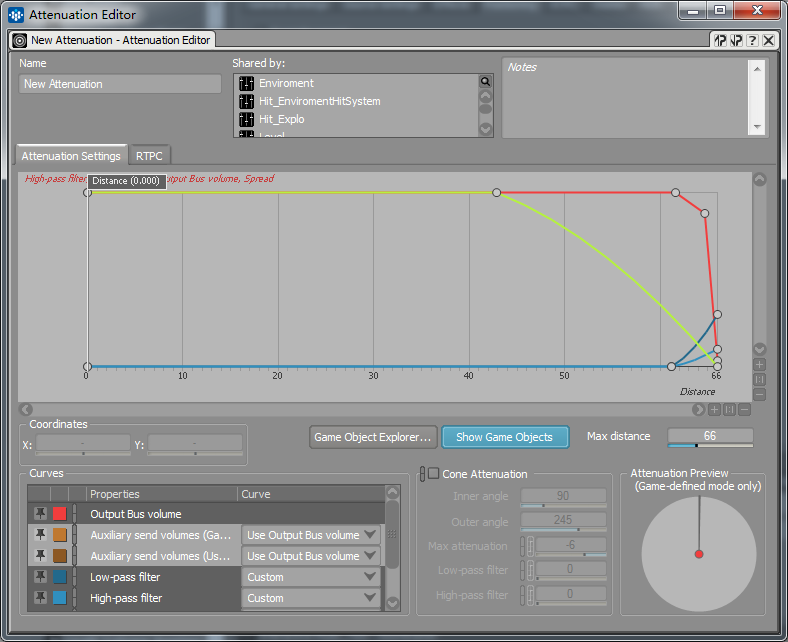

Therefore, I wanted the sound sources at the four corners off the screen to attenuate in a certain range. So, what did I do? I increased the MaxDistance in Wwise, making it a little bigger than the BorderDistance, then defined some parameters for the attenuation curves (such as Volume, LPF, and HPF) to render the sounds that are beyond the BorderDistance. I also added Spread curves. When the sound sources were within the game visuals with a large stereo width, this would add more layers to the sounds and make them even more impressive.

In the latest version, Wwise replaced its previous 2D/3D settings with the Listener Relative Routing option, which enabled users to choose position and/or orientation freely. This would make a big difference to the project. However, due to the reasons mentioned previously, I didn’t have the chance to use this version.

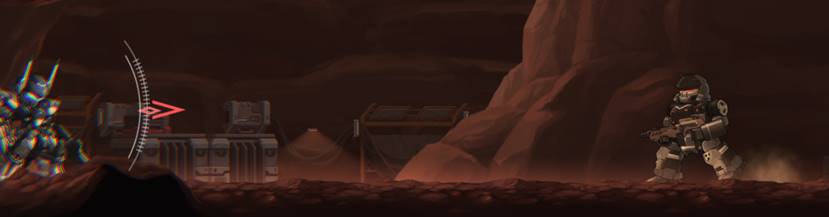

For general Mecha movement sounds as well as various shooting, hitting, explosion and other sounds, I specified several different Attenuation ShareSets. Take the long-distance beam weapons (see the image below). I used a MaxDistance that is 5 times longer than that of a normal Mecha sound effect (i.e. BorderDistance), so that players would be aware of the danger even though the enemies were out of sight. Although, for some elements such as fires, I reduced the MaxDistance to not distract the player’s attention. The volume of these sounds would be reduced rapidly as the sound sources fade away from center screen. Also, I applied different attenuation templates for different hitting effects. Normally, beams would have a longer attenuation distance than bullets. Generally, the MaxDistance value is bigger than the distance from the listener to the center point C, it defines how far a sound could be heard.

Building the Matter Effect System

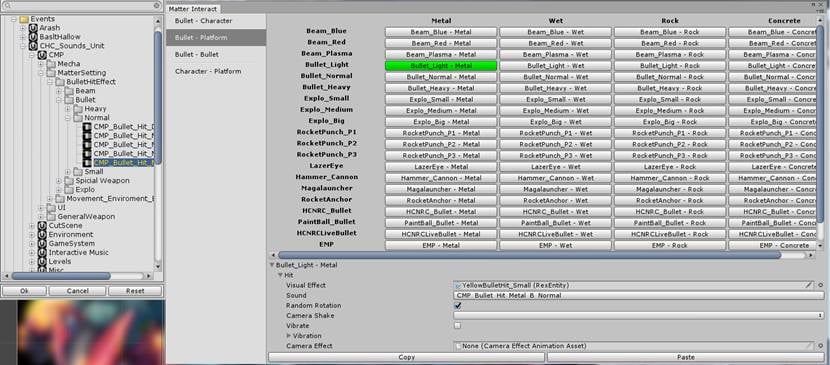

For Hardcore Mecha, there are dozens of Mechas, weapons, and bullets. These elements involve a large number of materials which produce lots of sounds and visual effects. With the Wwise Switch feature, we could easily manage these assets. To create an effect system, we created a Matter Effect System in Unity to manage the sound and visual effects in a single place.

In the Matter Effect System, we divided the materials into three types: Platform, Mecha, and Bullet. According to the interaction between these types, I configured the walking, running, landing, and hitting, and grouped them into four categories: Bullet/Character, Bullet/Bullet, Bullet/Platform, Character/Platform. Then, I combined the sound effects with Wwise-Type properties, visual effects, screen shake, gamepad vibration, and camera effects. Also, to create large configurations quickly, I optimized some editor features such as copy-paste, bulk edit, etc. (I used similar structures to organize the mixing in Wwise, but I'll get to that later.)

The interaction between the Mecha and the Platform is not limited to moving and jumping. I also created swirling dust sounds for when the Mecha's glide and approach the ground to add more detail. And, I categorized these sound effects as a Tracking Moving Sound. I attached an RTPC to the Blend Container with the dust sounds included. When a Mecha glides and approaches the ground of a certain material (like mud or sand), the sounds are played with proper sample loudness and thickness based on the materials gliding speed. I defined this RTPC parameter as Speed, which would be calculated according to a certain ratio. I combined it with the Matter Effect System to render the sound effects. This parameter also affects some characters that move by gliding instead of walking. I created an engine sound for when they are moving and defined the X-offset rate of the player’s joystick or the dynamic value of the AI enemy movement as the locomotion speed. Then, I mapped the parameter to the RTPC to control the sound intensity of the Mecha engine. I set the actual movement speed to control the sound loudness by using different tracks in the Blend Container.

During implementation and testing, I also found that these values changed dramatically. Players could toggle the joystick very fast. This was reflected in the character’s movement during close combat. As such, I specified a slow rate for each RTPC that was involved. Their values would be gradually changed over time. In this way, I simulated the physics damping and got a more realistic result.

Creating the Immersive Details

In the Single-player Campaign mode, there are all kinds of scenes and levels such as mineshaft, campsite, secret factory, and space platform. They are meant to create a strong sense of immersion that include exciting battles and stunning animations. In order to provide a better immersive experience, we had to try our best to explore audio elements in these levels, create certain real-time sound effects using Wwise, and add some content variations.

Stealth, Underwater, and Space

In chapter 2, the Player sneaks into an enemy camp on a rainy night. I wanted to introduce some common elements in common stealth games. I created two pieces of music based on the player’s action phase (exploring or exposed), and used a dynamic music system to switch between them. If the player is exposed/in view of enemies, the music switches, sound effects are suppressed by a low-pass filter, and an intense music segment plays. I also created a rain sound to add medium-high frequency details. This was designed to match the games' visuals, for example when the Player hides in a bunker, all the music and sound effects that are higher than 500Hz are filtered out, enhancing a stealthy gaming experience.

Stealth and Underwater

In chapter 3, the Player falls into a huge reservoir. For this part, I also added medium-high frequency details and filtered them out in a particular situation. I mapped the player’s distance to the water surface to an RTPC and applied it to the audio bus. As the player dives deeper into the water, more medium-high frequency details are filtered out. In contrast, as the player swims up, the medium-high frequency are quickly recovered. There is also a high-frequency splashing sound!

Lastly, In chapter 5, the Player is in a space-station platform. We specified an RTPC range to mark the player’s movement within a trigger. Within this range, the player’s X/Y coordinates are mapped to an RTPC value. We created a realistic environment by blending outdoor wind sounds, battlefield ambiances, and indoor ambiances.

In the end, all these efforts helped to enhance an Hardcore Mecha's sound experience.

In Hardcore Mecha, there are real-time sounds and animation sounds. On one hand, we wanted to add more details. On the other, we wanted to preserve some old-fashioned elements. We utilized various processing methods including noise modulation, sampling synthesis, and live recording.

The Audio Mixing

Building the Sound Structure

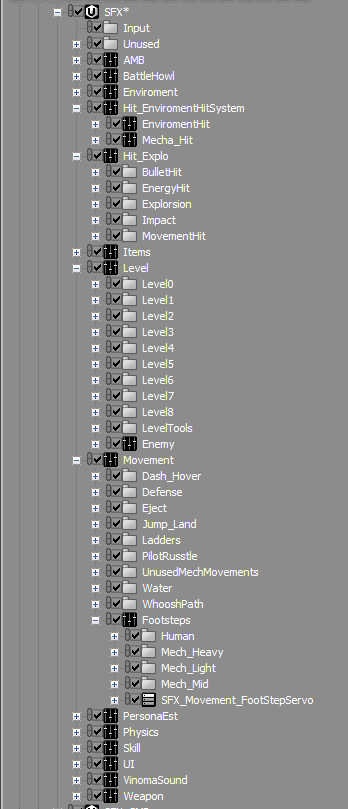

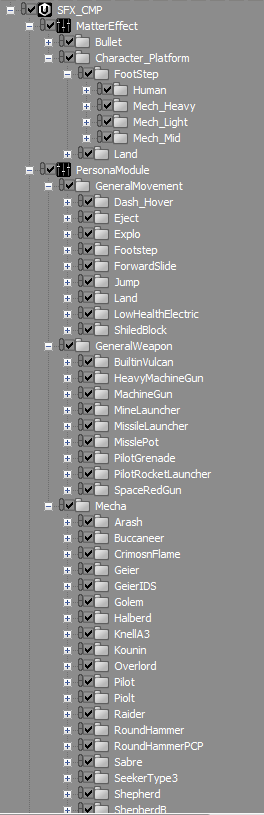

For the Single-player Campaign mode, the logic for Player sounds is different than that of other sounds. Player sounds are focused on the character itself. There are various types of enemies and all kinds of commonly used RPG elements such as ambiances, music, and sound effects. Therefore, I manage samples based on the use of these elements.

For the Multiplayer Battle mode, players need to concentrate on the fast-paced battle and know the status of their teammates. In this case, the sounds are supposed to help players identify their surroundings and guide them to make quick decisions. They should be organized based on characters position.

With this in mind, I mixed the sounds separately for these two gameplay modes based on the auditory priority, combat status, and sample type. Also, I created different Actor-Mixers to organize them.

To better implement the project, I decided to build the sound structure in Unity. For the Single-player Campaign mode, there are lots of weapons, objects, and other interactive elements. As such, I organized the assets according to these elements and distinguished the Mecha's by movement, animation sequences, platform interaction, weapon(s), bullet material, and hitting the target. I treated these parameters differently by mixing. As for the Multiplayer Battle mode, we categorized the sounds by Mecha types, their skills, and equipped weapon(s). We managed them based on the attenuation mode and online/local configuration.

Dynamic Mixing and Focus Point

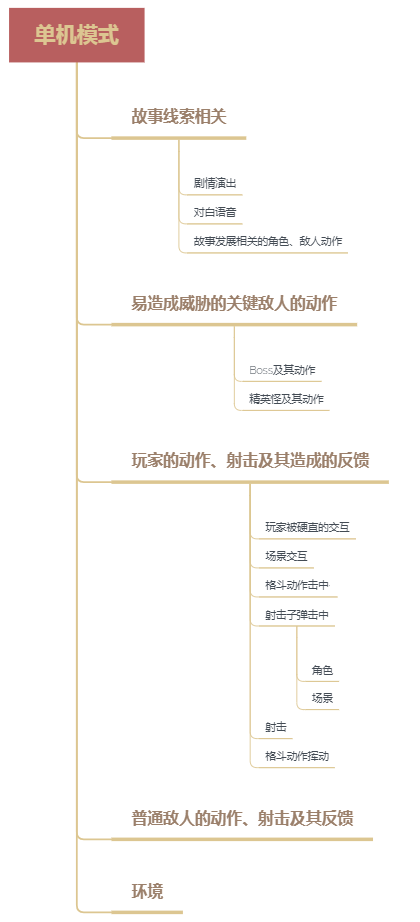

In the Single-player Campaign mode, players continue exploring the story-line. A Sound designer should always pay attention to a Player’s point of interest and design the sound effects with this in mind. A Player will most likely be focused on the narrative clues first, then key enemies (such as boss, rare enemies), combat, and shooting actions, and finally ambiances.

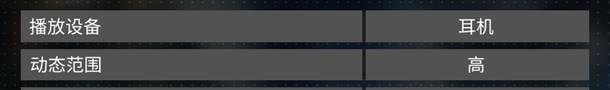

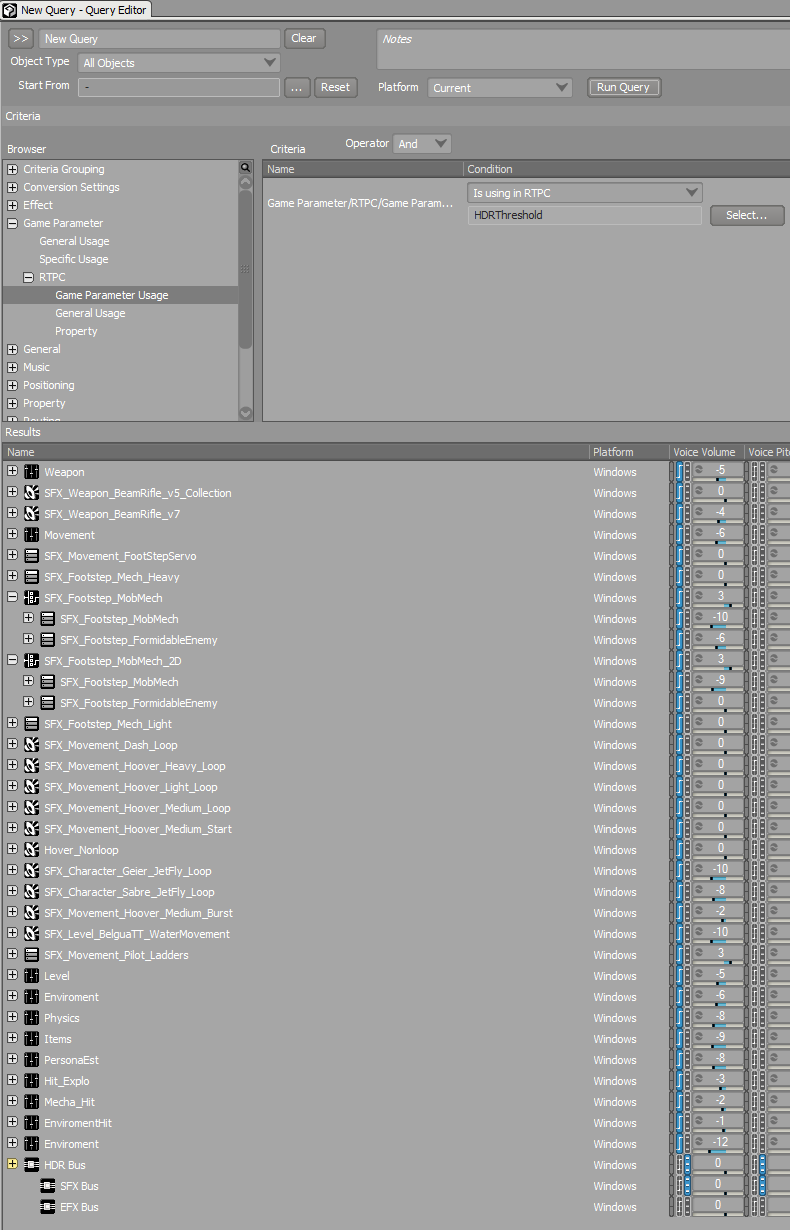

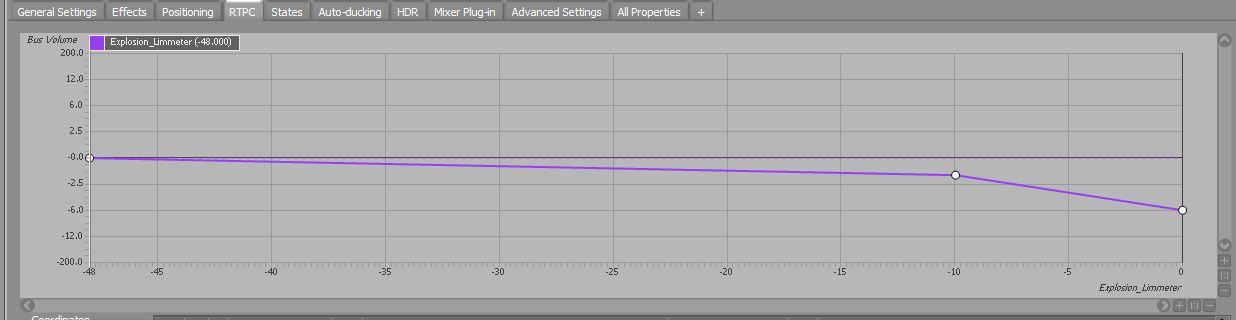

With these in-game actions in mind, we could easily specify the mixing volume and define the audio elements. Adjusting the volume of audio buses/Actor-Mixers directly in the project, I also created some RTPCs to coordinate different kinds of sound effects. For example, I applied the Wwise Meter plug-in to some critical explosion and mass movement sounds, side-chained the music accordingly, and used the HDR system to prioritize their volume. This is similar to ducking, only more flexible. When there is a sound with a higher priority, other sounds will be ducked. However, this didn’t make the sounds more impressive as I expected. Therefore, I created some other RTPCs and States. These were used to coordinate elements that occurred within the game visuals in real-time and highlighted important content.

Side Image: From top to bottom Single-player Mode, Narrative Clues (Diegetic animations, Dialog voices, Narrative related character & enemy actions), High-threat Key Enemy Actions (Boss actions, Rare enemy actions), Player actions, Shooting and Enemy Reactions (Interactions when player is stunned, Platform interactions, Combat action - hit, Shooting bullet - hit, Character, Platform, Shooting, Combat action -swing) Regular enemy actions, shooting and player reactions Environment

For example, this is the first Mecha enemy in the level. He is no different from hundreds of other minions, however to exemplify him being the very first enemy, I created a State to increase the volume of his movement and shooting sounds (the same was done for narrative-related characters in the game).

There are two STG levels in chapter 6, in which bullets and enemies are presented differently as opposed to previous levels. Earlier levels only have a few objects, weapons and enemies, now the enemies emerge in large numbers, and as such, we combined the States and the Switch Containers to respond to the Matter Effect System. In the meantime, we reduced the volume of some samples, and applied a compressor and limited the number of voices.

In some levels, players can even take off their Mechas' and infiltrate behind enemy lines. So, there could be circumstances where unequipped humans fight Mecha enemies. However, Mechas are bigger than humans for sure. It would be unnatural if we set their voice loudness on the same scale. After a second thought, we decided to treat invert them, when a human is not in their Mecha, their voice will be louder, otherwise, the voice will be quieter.

Thoughts on Loudness, Dynamic Range and Output Devices

Hardcore Mecha is supposed to be launched on game consoles and PC platforms. However, these two have different audio output devices! For the console version, players mainly use their home audio system/TV output, and sometimes a headset. For the PC version, there are a lot of options including a stereo system, headset, monitor, or notebook speakers.

For PS platforms, Sony provides the following loudness reference during TRC check: The average loudness throughout a game should be around -24 dB LKFS which is the same as the reference standard in the film industry. As required, I used the Wwise Loudness Meter and routed the recorded audio signal to DAW for testing, then adjusted the volume of audio buses/Actor-Mixers accordingly. For PC platforms, this average loudness is too low, especially for an indie game. Devices like monitor output/notebook amplifiers usually have a lower output capability which will impair the listening experience. To overcome this challenge, I made a different mixing strategy for the PC version. The average loudness was increased to around -18 dB LUFS, and the dynamic range was lowered a little as well.

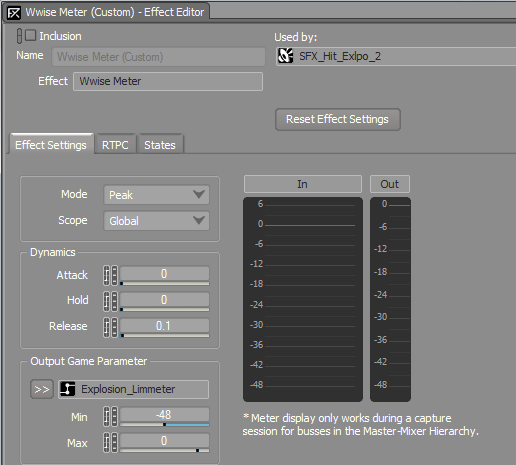

Dynamic range is not only subjected to the loudness difference between various output devices, but also different gameplay. Players are allowed to change settings according to their actual needs. With a high dynamic range, the volume would be relatively lower for less important sounds like bullet fly-by, Mecha joint movement, background ambiances, whereas it would be higher for critical sounds like Mecha body movement or close combat; with a low dynamic range, the situation would be the opposite. We used a Dynamic Range RTPC to control the overall threshold of the HDR system. However, an inflexible, automatic HDR system cannot identify exactly which sounds should be lowered down or raised. So we attached that RTPC to all kinds of Actor-Mixers to control their Voice Volume. In the end, we differentiated various kinds of audio elements according to the mixing strategies mentioned previously.

(From top-left clockwise, Playback Device, Headset, Dynamic Range, High)

In addition to the Dynamic Range parameter, we provided playback settings for players to adjust. They are allowed to select different playback devices. Considering that the background noise is relatively low when players use a headset, we increased the Dynamic Range for the headset mode. There are three options for players to choose and set. This is a common practice for console games with diverse audio outputs.

There is another factor involved here, the stereo width. It affects the Wwise channel configuration and panning rule (speaker or headphone angle).

Audio Elements Involved in Dynamic Range Adjustment

Audio Elements Involved in Dynamic Range Adjustment

Ducking and Side-Chaining

In a tangled battle, noisy fighting sounds probably would surpass critical audio elements such as Mecha explosions, enemy movements, and dialog voices. Blindly increasing the sound volume does no good to sound balance, it could even startle players or worse cause ear damage!

In order to highlight critical sounds and voices, the first idea was 'ducking'. Unfortunately, the Producer didn’t like the ducking results. What I needed to do was to make the mixing more natural so that players could concentrate on the fast-paced battle, while being aware of their surroundings without any discomfort. In this context, I decided to use side-chaining instead. First, I used the Wwise Meter to map the volume from some explosion or cut-scene sound to an RTPC, then I tweaked and corrected the SFX volume in turn. For dialog voices, it was more complicated. I roughly measured the fundamental frequency of several key characters including the player, their teammates and old enemies. In order to attenuate certain frequency bands, I used the Wwise Meter to map the volume of relevant Actor-Mixers to an RTPC and applied an EQ effect to the SFX bus.

The Split-screen Issue

There was another tricky issue: local split-screen!

In local split-screen mode, four players are allowed to the share the screen. It may seem that you only need one camera to focus on each player, however each split-screen has its own sound source. The sounds would be multiplied by 16 times! I knew that simply setting a Playback Limit wouldn’t have solved the problem. Therefore the solution; when there were at least two split-screens, I disabled the 3D attenuation for elements (for example bullet and explosion sounds), then I lowered the volume of relevant Actor-Mixers based on the number of players. For each additional player, the volume would be reduced approximately 6dB depending on the elements involved. Overall, it worked!

Commentaires