Jaime here again with the second part on Murderous Pursuits’ conversation and dialogue systems.

If you haven't ready part one, click here to read it!

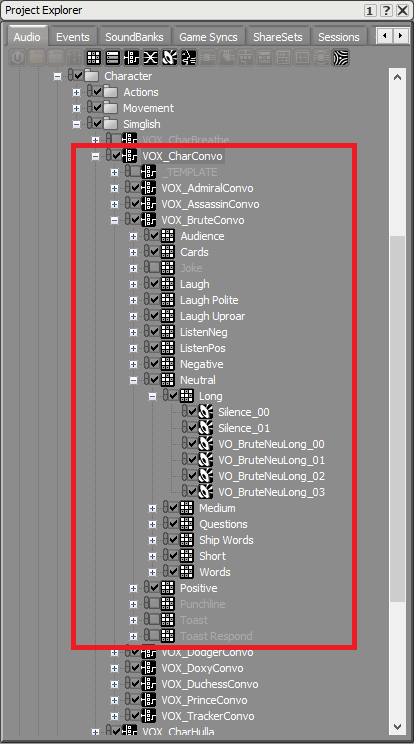

Last time we went over the thinking behind using Simglish in the game, as well as some of editing and processing that went into it. Now let’s talk about how it works inside Wwise, the audio middleware solution we used. Firstly, here’s the hierarchy for the conversation container to give you a quick overview of what it contains.

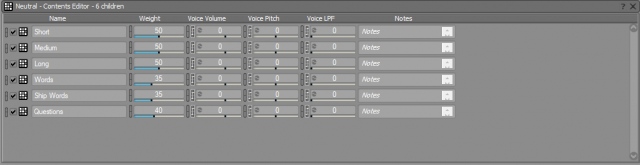

Then at the top is a Switch Container, VOX_CharConvo, which is used to set which character should be talking, and the named Switch Container selects which type of Simlish or conversation audio we want to be played. In this case, I’ve branched out the Brute’s: VOX_BruteConvo. Each of the conversation types are contained in a Random Container, which allows us to randomly select an audio file from a group of sounds when an audio event is triggered.

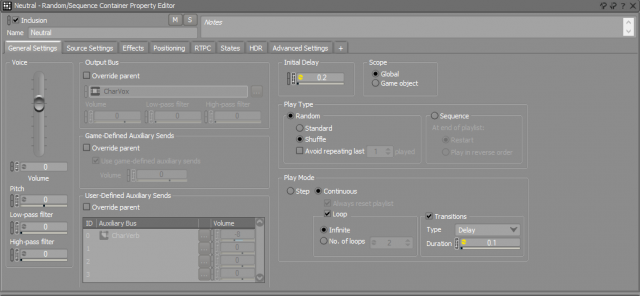

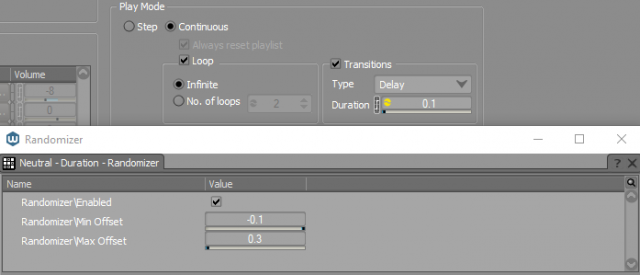

However, as you might have guessed with the references to them above, the Neutral, Positive and Negative containers are a little different. We need these to not just pick a file at random, but also between Simglish phrases of varying lengths and words, while maintaining the flow of conversation. Picking a random phrase type or word is pretty simple, we just use more Random Containers. As for the actual flow, that took a little bit more refinement. Here’s the Property Editor of the Brute’s Neutral Convo container:

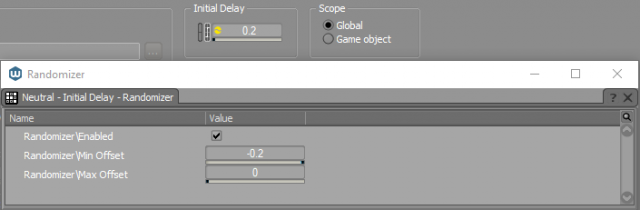

There are a few things going on here. First is the Initial Delay setting.

This is used as a basic starting offset for the looping animation, with the randomizer being used to pick a value. Nothing fancy.

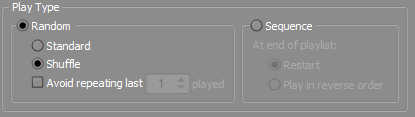

Next, the Play Type.

This is set to randomly pick one of the 6 possible options in the Neutral Container. In normal usage we’d want to avoid repeating previous audio clips that have been played to avoid the “machine gunning” repetition effect. Here though, we want to hit any of the sub-containers even if they’ve already been used. This helps mask the transitions between each type, and allows for more dynamic and varied conversations.

Play Mode is set to Loop Continuously.

They’ll keep talking until we trigger an event to tell them to stop, such as someone else piping up to speak, or reacting to someone being murdered nearby. We don’t want monologues after all, even if the Admiral could spout one easily. The Transition Delay is set between 0 and 0.4 seconds when the random offsets are accounted for, giving the characters a little bit of a breather outside of any natural gaps and pauses in their phrases.

We initially tried cross fading between each clip, but that felt more like a stream of audio and not quite natural enough in practice. There’s also a little bit of silence at the end of each Question snippet of Simglish which, combined with the actors’ inflections, helps create a more natural flow to their conversations by letting it hang in the air a little.

Finally, the weightings.

Characters are slightly more biased towards Simlish, as we wanted each word to pop and minimize the potential for a string of words occurring while not removing it entirely.

Outside of the playback settings each Random container has two silent audio clips using Wwise’s Wwise Silence. These are also varied in length depending on which container they’re in and introduce additional pauses in speech to reduce fatigue and make the conversation flow more naturally. There’s also a chance for multiple silence clips to be triggered in a row, but that’s okay. Not everyone has the stamina to talk for a while.

Here’s how the whole thing sounds for a single character in Wwise:

It’s not too bad on its own. But what about in game?

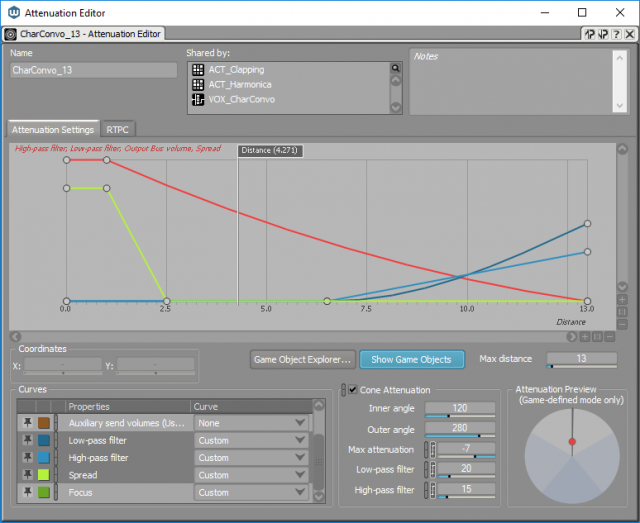

That’s a bit better. The little snippets of conversation as you walk past groups of people help the world feel a bit more populated and alive. We also made use of Wwise’s Shareset function to control how the sound changes over distance. Sharesets are rolloff or attenuation values over distance that we can attach groups of sounds to instead of setting those values individually. Here’s how the Conversation one looks:

The curves control the volume drop off, and introduces some high and low pass filtering at further distances. The green curve is for Spread, which determines how much of the soundfield a sound takes up. At its minimum, it’s a point source and easy to determine the direction in which it’s coming from. At its maximum it fills the soundfield, regardless of its position around you. I have it set to around 75% when you’re next to someone as it still gives a sense of directionality to the speaker, while reinforcing their proximity to you and making you feel like part of the conversation by filling out the soundfield.

I also used the cone attenuation feature, which can attenuate the volume and introduce filtering based on which way a sound source is facing. As sound is projected from a person’s mouth on the front of their body, it made sense to have things a little quieter if you’re behind them. Here’s a quick little video demo (watch the bottom right corner to see what’s changing):

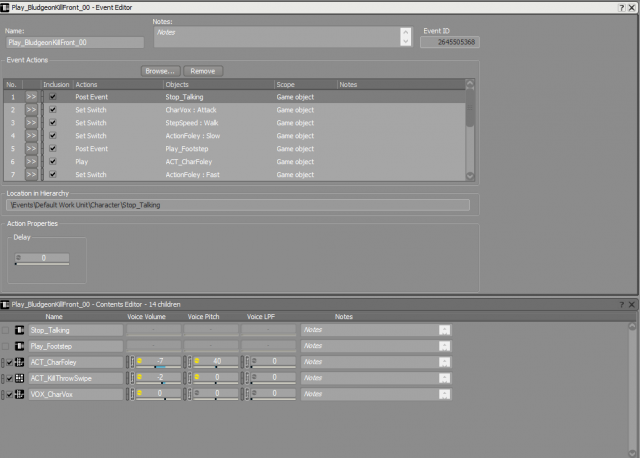

In game playback relatively simple too, at least on my end. The coders made a custom Anim Event in Unity that allowed me to attach Wwise events to an animation, with looping animations having a separate start up animation so that they don’t constantly re-trigger the audio. The Wwise events contain a switch action to select the type of conversation and a play action for the VOX_CharConvo container. We handle the character selection through code. We also have a generic Stop event with a very slight fade out for all dialogue types that’s triggered when characters start moving and other animations (such as attacks). It’s not pretty but it works.

The Anim Event takes in a String argument, in this case the Wwise event name, and uses that in an AKSoundEngine.PostEvent call. We use this for every animation that requires audio outside of locomotion, and have all of the required actions baked into a single Wwise event instead of multiple Anim Events scattered across the animation timeline. We discovered that using that method lead to some slowdown and misfires and was generally unreliable, so the folks as Audiokinetic advised us to go down the single event route which worked a treat (thanks Max!). As a quick example, here’s the event for a Bludgeon Attack, which has 14 different Actions going on:

And let’s stop there. Audio in games can be a bit of a black box at times, so I hope this peek behind the curtain gave you a bit of an insight into what goes into part of the process and all the things we need to work out into order to make it to your ears! If you’re interested in Wwise itself, it’s free to download and comes with a lot of tutorials and resources. You can grab it here.

If you’re looking to try it with Unity then check out Berrak Nil Boya’s videos, which start with Unity’s own tutorials then go into more interesting things. Some layout things may have changed since they were made, but everything still works the same way!

Also a big thank you to our actors, be sure to look them up:

Ally Murphy

Amelia Tyler

David McCallion

Jay Britton

Kenny Blyth

Kim Allan

Toni Frutin

Don’t forget to check out Murderous Pursuits on Steam too!

Thanks for reading,

Jaime

Commentaires