Introduction

Hi there, I’m Matt, a sound designer at Rogue Waves. We typically work on sound effects libraries for game audio and post production. Recently, however, we branched out and released Step Sixteen, a step sequencer app made with Unity and Wwise.

Over the years, I’ve made many step sequencer prototypes. Some of these were hardware sequencers made with Arduino, while others were made in Pulp, Processing and Unity. Step Sixteen is the culmination of those prototypes and combines all the things I learned along the way. It was also a great opportunity to improve my C#, Unity and Wwise skills.

Step Sixteen

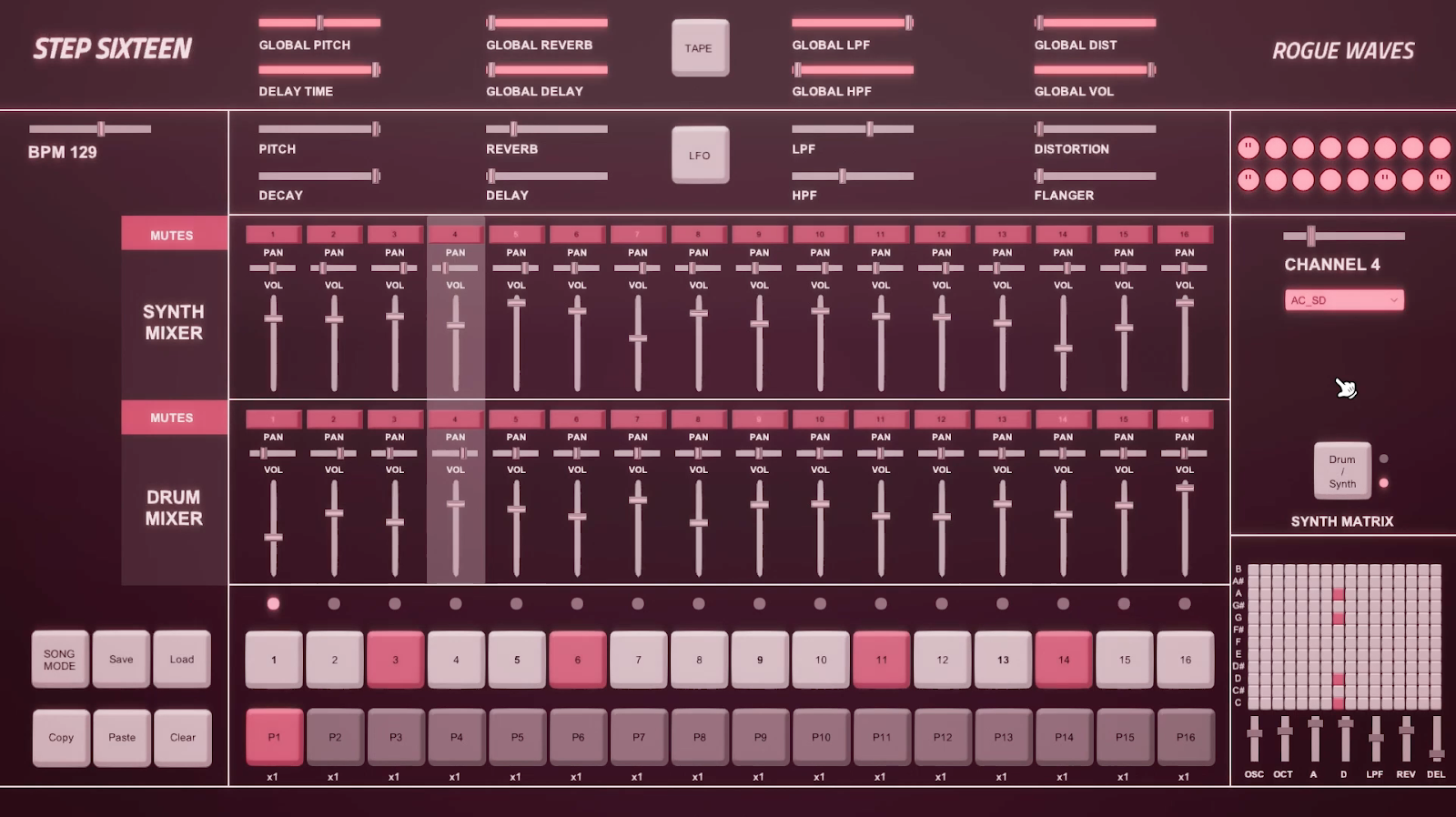

Step Sixteen is a 16-step sequencer music-making app inspired by classic hardware drum machines like the TR-909 and the Machinedrum.

In Step Sixteen, the user can create their own beats like with a drum machine, but we added synths that run alongside it to play bass lines, arpeggios, chords or melodies. Having everything in one app makes it very easy to get something going quickly, without having to go too deep into learning to use a full DAW. Users can shape individual drum and synth sounds using the channel sliders or use several global performance-based controls to shape the whole mix.

Wwise

In order to achieve all the features we wanted to include in Step Sixteen, we required a versatile audio engine and Wwise was the perfect choice.

Wwise provided us with a great audio backbone for making an instrument-based app. The ability to add RTPCs, Modulator Envelopes, Switch Containers and built-in effects were all integral to making the sequencer we wanted.

Clock

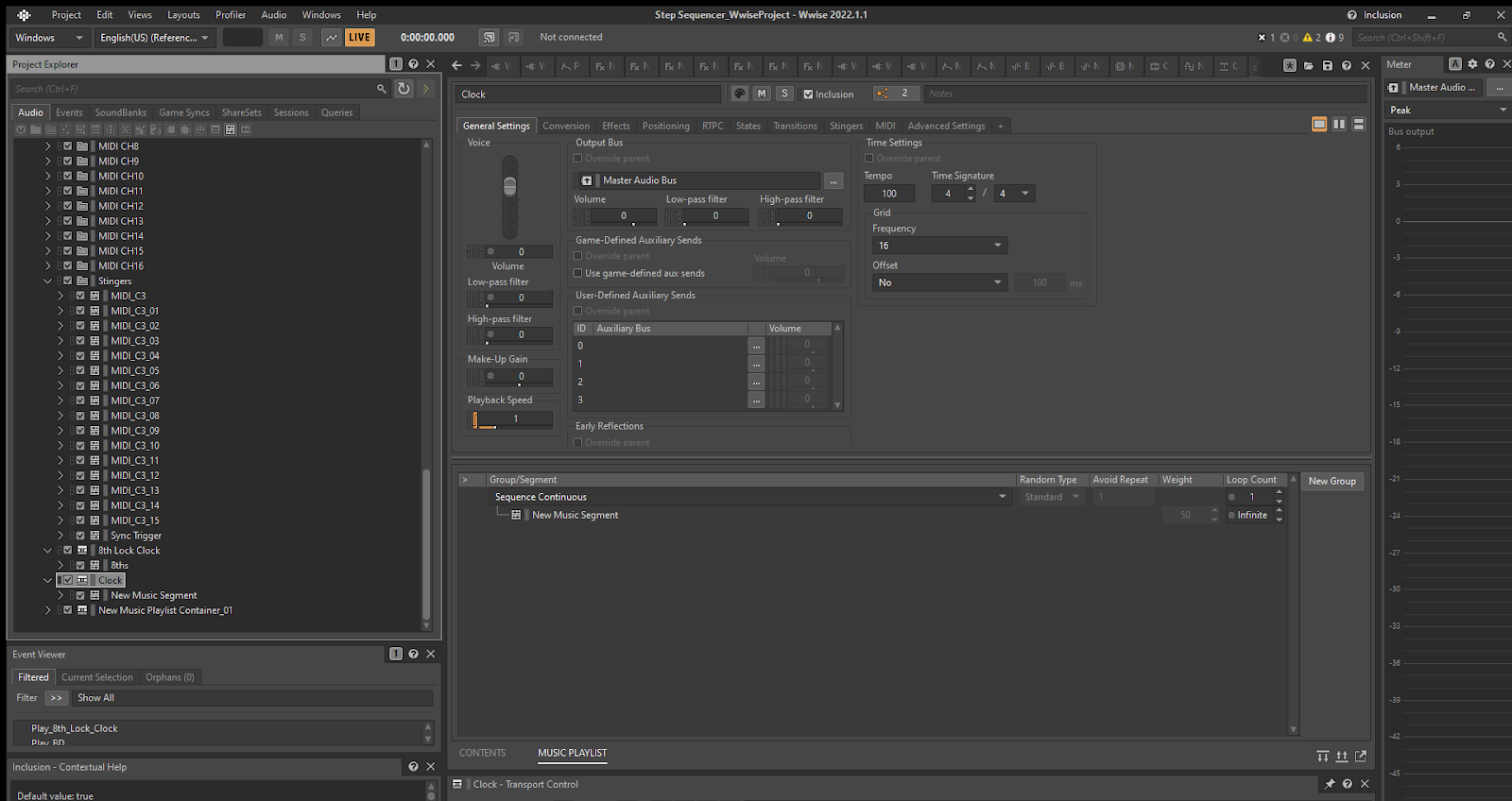

Early on in the project, one of the main issues that needed to be solved was achieving a solid clock to drive the sequencer. I tried using various methods of timing in Unity but these proved unstable; Ultimately, I came to the conclusion that it would work better to use Wwise for timing using an Interactive Music system.

In Wwise, I created a Music Playlist Container (with grid divisions set to 16ths and tempo at 100 BPM) which also contained an empty dummy Music Segment. The Music Playlist Container allowed us to infinitely loop the dummy Music Segment.

With Music Playlist Containers in Wwise, you unfortunately can’t add an RTPC to the BPM value. However, you can work around this by controlling Playback Speed with an RTPC. To start, we set the actual BPM of the Music Playlist Container to 100. The range of Playback Speed in Wwise is between 0.25 and 4, so a playback speed of 0.5 would be equivalent to 50 BPM.

In Unity, we were able to create a bpmPercentageTime variable which equaled the app’s BPM slider’s value divided by 100. So, for example, a BPM of 120 displayed in-app would then set Playback Speed in Wwise to 1.2.

bpmPercentageTime = bpmSlider.value / 100.0f;

AkSoundEngine.SetRTPCValue("Playback_BPM", bpmPercentageTime);

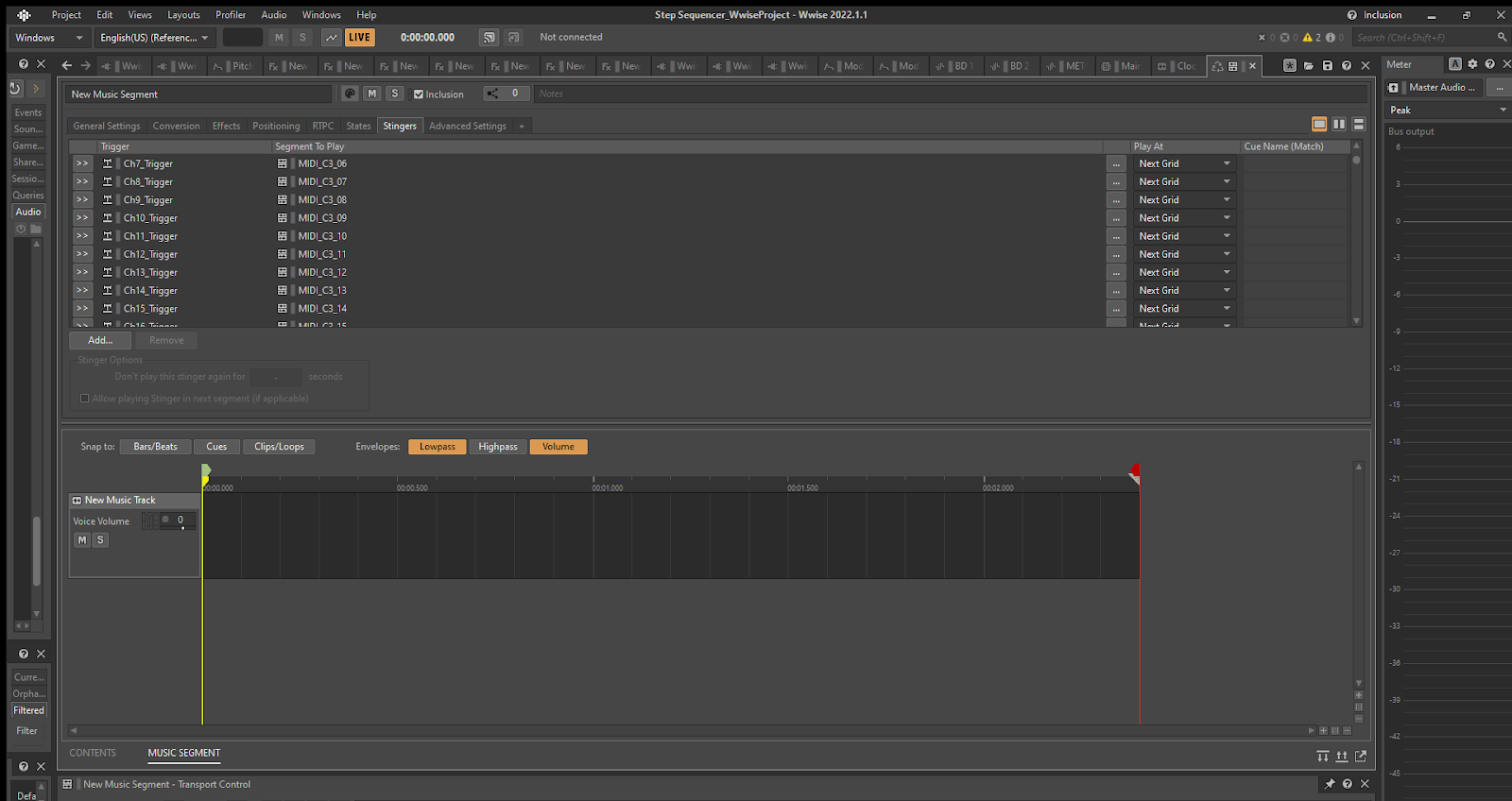

Callbacks

Because we were making a 16-step sequencer, we needed to create a callback that would occur every 16th note. To solve this, we used the MusicSyncGrid callback type and our Music Playlist Container had its grid frequency set to 16. Now we could tell Unity to do something every 16th interval of our Music Playlist Container.

In our callback function in Unity, we created a counter which would reset after each bar. This allowed us to keep track of each step of the sequence and when sounds should play.

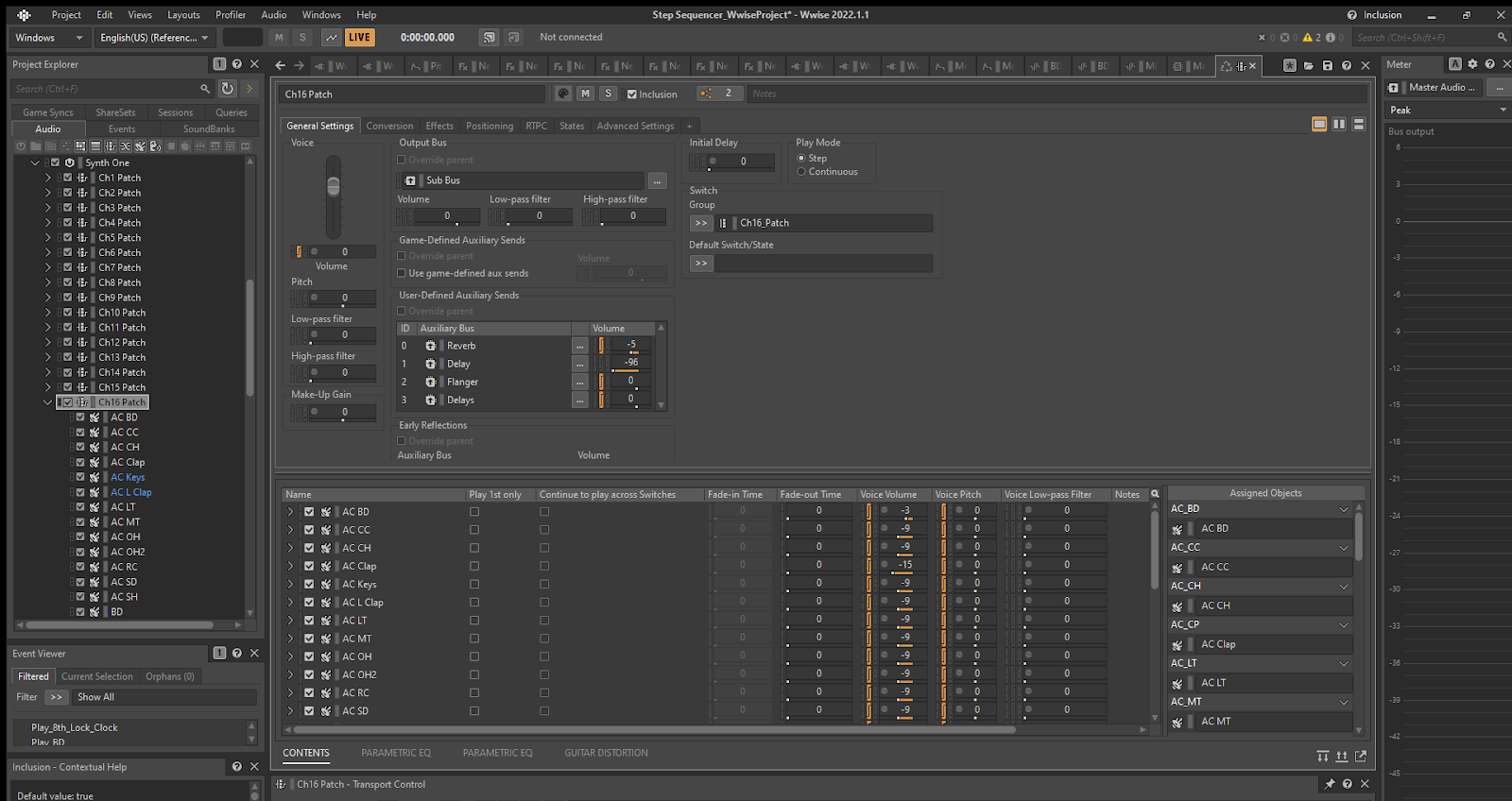

Initially, we tried posting our sounds immediately in our callback function; However, timing issues would arise, so we instead chose to trigger stingers set to Next Grid. This meant our sounds were scheduled in advance and would playback correctly.

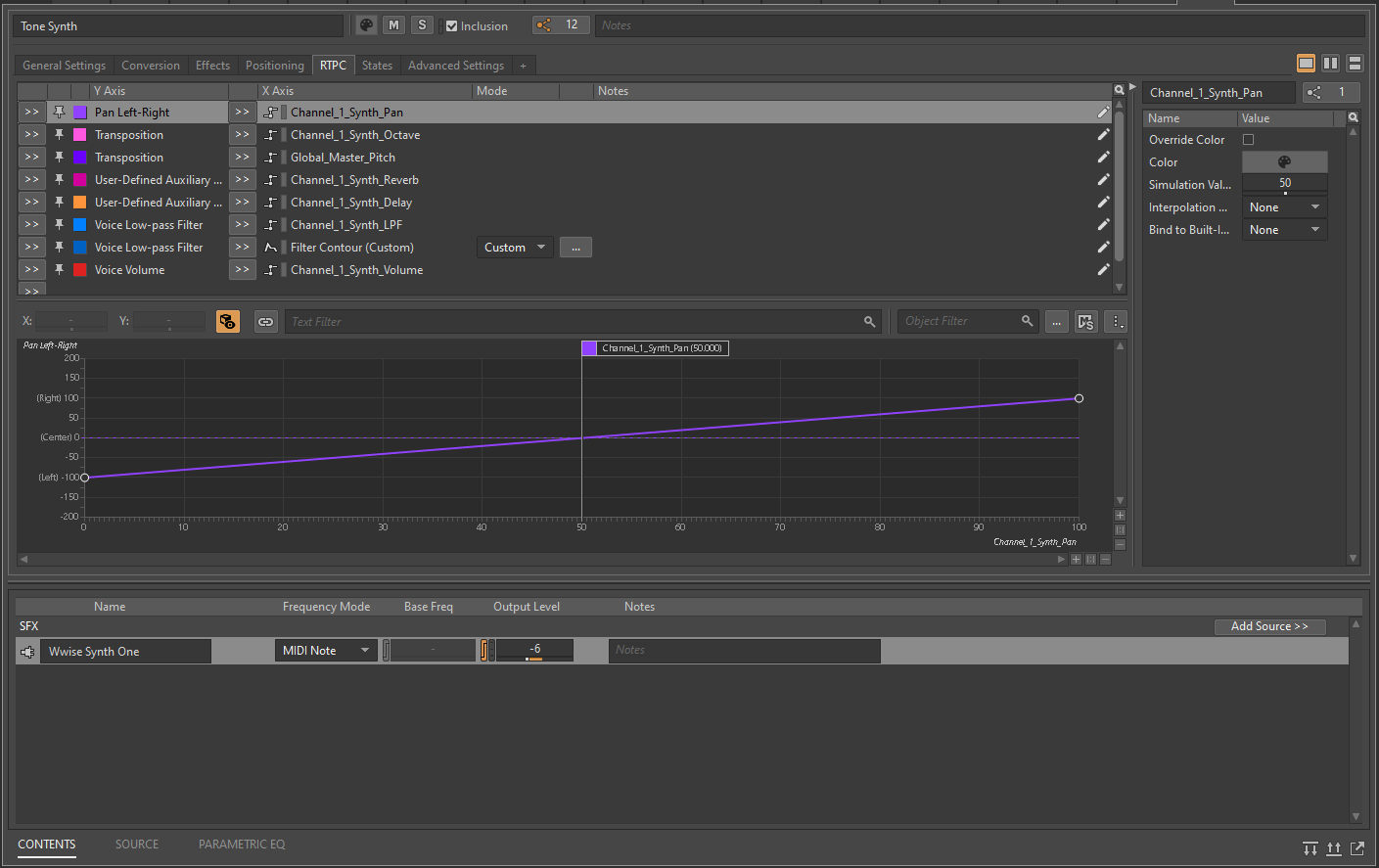

RTPCs

Step Sixteen needed to give the user a lot of control over the various sliders for shaping sounds, so I ended up having to create a lot of RTPCs. The 16 channels alone required 19 RTPCs each, which was 304 RTPCs total.

On top of that, we had additional RTPCs for delay time, the global sliders, playback BPM and tape stop.

Effects

The built-in effects in Wwise offered a lot of versatility in shaping individual sounds and the whole mix. We made use of delays, reverb, flanger, distortion, parametric EQs and pitch shifters.

I’ve always really enjoyed the tape stop effect button on Teenage Engineering's OP-1, so I wanted to create a similar effect button for Step Sixteen. I was able to achieve this by using the Wwise Pitch Shifter plug-in on the Master Audio Bus. This was achieved by ramping down the Pitch Shift value via an RTPC and a timer in Unity.

The second effect button in Step Sixteen is the LFO button. With this, I wanted to create a sort of random drunken pitch. This was achieved by using a random LFO assigned to pitch and a random LFO modulating the frequency / rate of the first LFO.

Parametric EQs

In order to achieve more musical filtering, we made use of parametric EQs. By using two bands (one band set to low-pass/high-pass and the other band set to peaking) we could change the values in tandem and were able to create low- and high-pass filters with resonant boosts at the cutoff point. This is something you’ll often hear in electronic music and provides a better solution than the built-in low -and high-pass filters for shaping sounds. We used this method on the low- and high-pass filters for the drums for shaping sounds and on the global low- and high-pass filters for performing filter sweeps.

Delay Time Workaround

One limitation with Wwise is the delay plug-in not being able to assign an RTPC to delay time. There are ways around this by modifying the plug-ins xml file, but this leads to pops and clicks when changing delay time, so that method was out. If we had more time to spend, it is possible to create your own fractional delay plug-in that doesn’t produce artifacts when changing delay time, but this was outside the scope for this project.

We opted instead to let the user choose between delay times by fading between 4 separate delays with different fixed delay times. This wasn’t the ideal solution, but it did enable us to have a degree of variable delay time in Step Sixteen without pops and clicks.

Conclusion

Step Sixteen was a challenge but a lot of fun to work on. Wwise allowed us to achieve the solid clock we needed through callbacks, stingers and the interactive music system. The built-in effects, RTPCs and modulation envelopes made it possible to give the user full control over shaping sounds. We love making music with it and are excited to hear what you’ll make with Step Sixteen.

Step Sixteen is now available on Steam: https://store.steampowered.com/app/2407480/Step_Sixteen/

Comments