In today’s post, we will do a deep dive into an interesting acoustic phenomenon called “phasing” which may be encountered when modeling the acoustics of a given environment. We will first take a brief moment to examine some of the underlying physics of phasing before we showcase the new tools that Reflect offers in Wwise 23.1 that help minimize some of the undesirable qualities of phasing.

What is Phasing?

Phasing is typically described as if you were listening to a sound playing through a pipe. Other descriptions include sounding like a jet airplane flying overhead, sweeping, hollow etc. In the examples below, we take an unmodified drum beat clip (top) and apply phasing to it (bottom):

Notice how the clip with phasing has a hollow quality to it! Phasing* is often used as a creative effect in music but the effect itself is not limited to audio plugins or pedals. Phasing can be observed in real life too! In the video below, we can clearly hear the phasing on the fountain noise:

Basic Phasing Physics

First, let’s talk about wave interference which is fundamental to understanding phasing. When two waves meet, there will be some form of interference. This interference can be constructive (the resulting wave’s amplitude is boosted) or destructive (the amplitude is reduced). The type and magnitude of interference depends on the waves’ amplitudes, frequencies and phases.

In the animation below, the blue wave meets the orange wave. When the orange wave is lined up with the blue wave, there is constructive interference and the resulting wave’s (red) amplitude is boosted. As the orange wave moves to the right (increasing time delay/phase), the resulting wave’s amplitude shrinks to zero before growing again.

Simple Wave Interference Demonstration

This simple demonstration shows the effects of interference of two signals at one frequency but most audio signals in the real world are complex and are composed of many different frequencies. Therefore, it is more useful to examine a signal’s spectrum.

The animation below recreates the fountain scenario. There are two paths that the sound waves take to reach the listener. The first is a direct path while the second path contains a reflection off the wall. The top right graph shows the resulting spectrum of the mixed waves while the bottom right graph shows the time of arrival (ToA) of each wave:

Simulation of the Phasing Fountain

As the listener moves closer to the wall, the difference between the ToA of each wave becomes smaller and the spectrum begins to have a prominent pattern of peaks and notches. This pattern tells us that the amplitude of different frequency components will be boosted (peaks) or reduced (notches). In other words, the spectrum tells us about the kinds of interference each frequency component experiences. This comb-like pattern** is what we perceive as phasing!

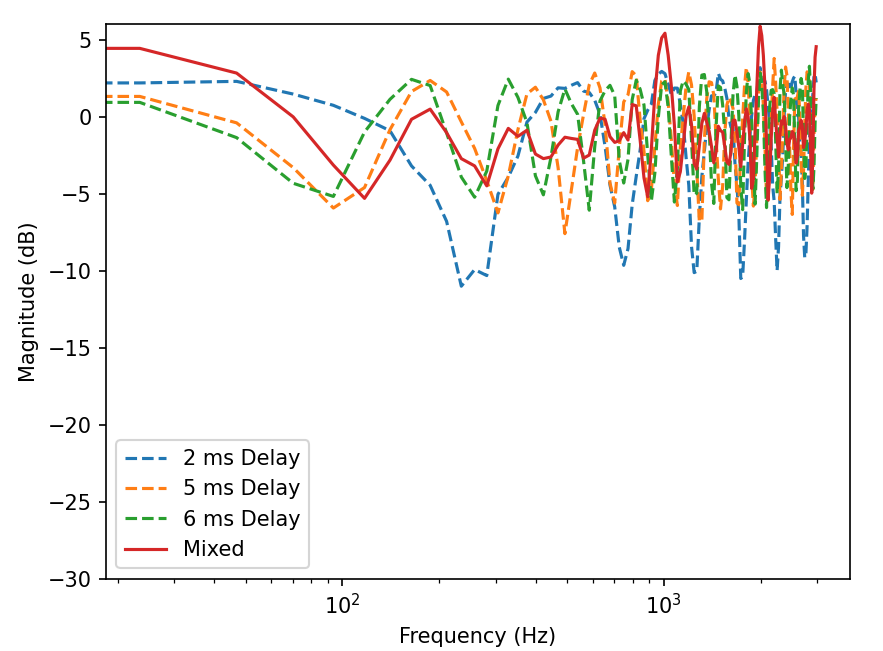

There are a couple things to note here. First, phasing becomes very prominent when the ToA difference between sound waves is short (within 20 ms). Second, the model presented here only uses two sound paths but there are many, many more in reality (infinitely many but we can render only so many paths). Each path has a different ToA and can have spectral peaks that overlap with notches in other paths, canceling each other out. The graph below shows the effect of mixing signals with three different ToAs. Notice how the resulting spectrum becomes flatter:

Effect of combining spectra of multiple reflections

The takeaway here is that while phasing does indeed occur in real life, it is probably rare due to interactions of many, many reflections which subdue the effect. In the acoustic modeling world however, we need to reduce the number of reflections to reduce computational expense. As a consequence, phasing is more likely to occur.

Bringing it to Reflect

The Reflect plugin is an early reflections renderer that gives the listener a sense of space for sounds in an environment. As sound waves travel from its source, it is reflected off different surfaces before reaching the listener. The first few reflections that reach the listener is a big contributor to our perception of the space and this is what the Reflect plugin renders.

Since Reflect works with reflections, you may be asking: does that mean that Reflect can render audio with phasing? The answer is YES! The demo below uses the Reflect plugin to simulate the fountain’s environment shown earlier:

As the listener moves closer to the wall and away from the fountain, we hear a distinct phasing effect very similar to the fountain video! It’s great that Reflect can replicate what we observed in real life but in gameplay, the effect can be quite distracting. Luckily, Reflect offers tools to help reduce these phasing effects.

Mitigation Tools

Reflect in Wwise 23.1 introduces two new tools to reduce phasing.

Clustering

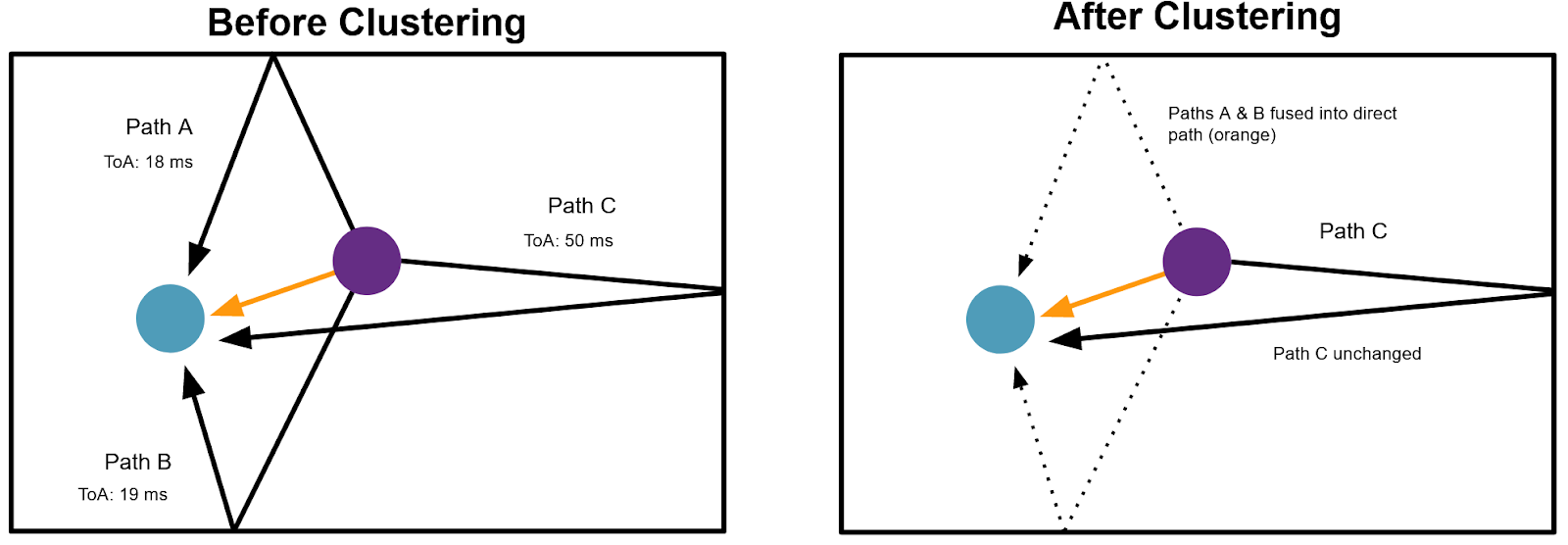

If we know that phasing can occur when a group of reflections have ToA differences within 20 ms, then we can “cluster” these reflections into one effective reflection:

Clustering Concept

In the above diagram, there are 3 reflection paths arriving at the listener. Paths A and B have ToA differences within 20 ms (TOA Difference = 19 - 18 ms = 1 ms). Therefore, paths A and B are clustered and “fused” with the direct path (orange). Path C however has a ToA difference greater than 20 ms and is left unchanged.

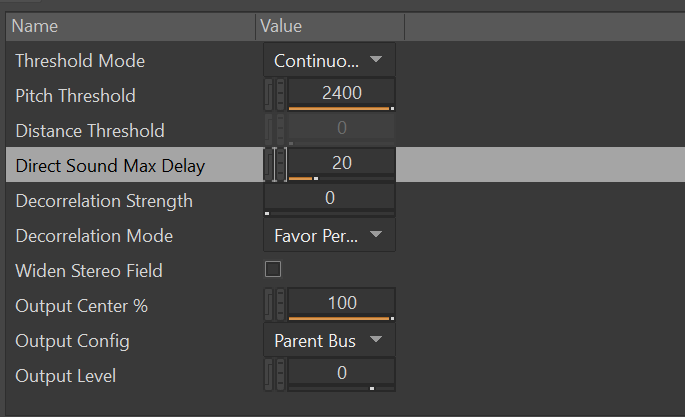

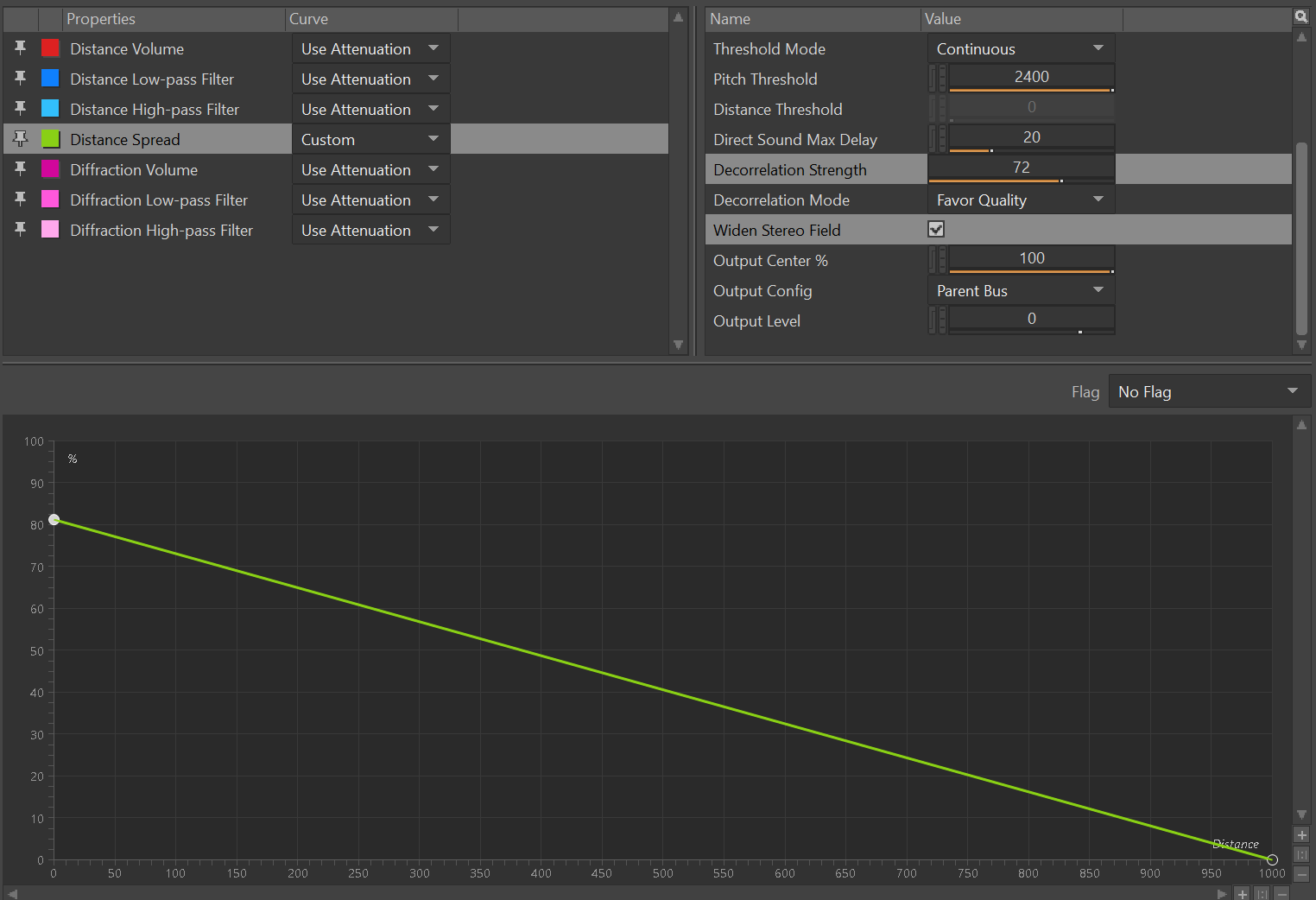

Clustering is enabled by setting the Direct Sound Max Delay property in the Reflect UI to a value other than 0. This property represents the maximum ToA difference in milliseconds. All reflections with ToA differences equal to or less than this value are clustered.

Direct Sound Max Delay Controls

Clustering is demonstrated in the video below:

Clustering is a simple but effective approach which has the added benefit of less computation load as there are less reflections to render. The downside of this approach is that reflections are only clustered with the direct path. Groups of reflections centered around another ToA difference cannot be clustered.

Decorrelation

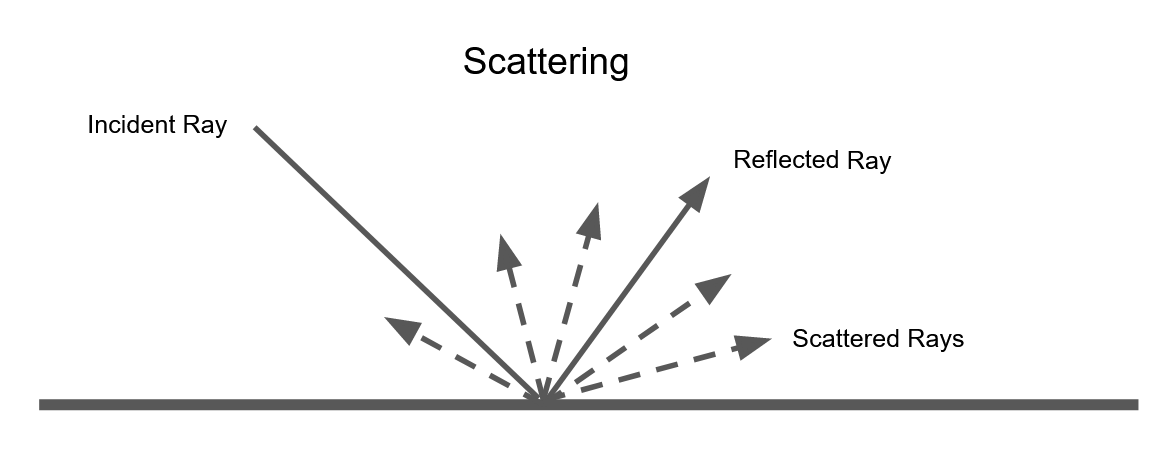

In real life, most surfaces will not only reflect sound waves but also scatter them. In the previous section, we saw how mixing more reflections with variations in ToA resulted in a flatter spectrum. We can think of the scattered waves as mini reflections that combine with the main reflections.

Wave Scattering

Scattering is modeled with a filter and is part of a larger family of filters called decorrelation filters.

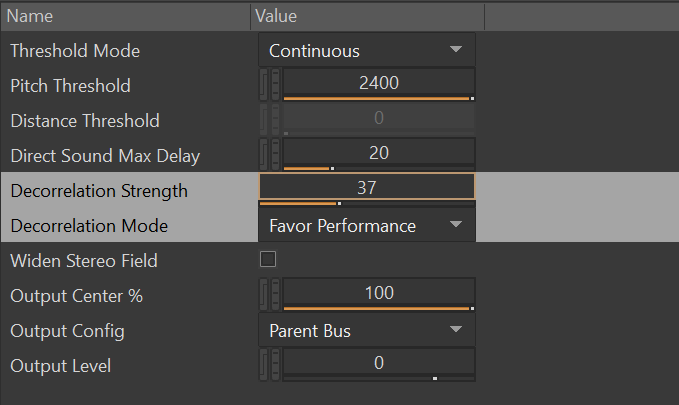

Decorrelation filters are enabled by setting the Decorrelation Strength property in the Reflect UI to a value greater than 0. There are two decorrelation filter types in the Decorrelation Mode dropdown box:

Favor Performance uses decorrelation filters based on a physical model of acoustic scattering.

This option is computationally cheaper but less effective at reducing phasing compared to Favor Quality. High decorrelation strengths can impart some spectral coloration: a boost or reduction in some frequency components (similar to phasing).

Decorrelation Filter (Favor Performance) Controls

The video below demonstrates the effects of the decorrelation filter using Favor Performance:

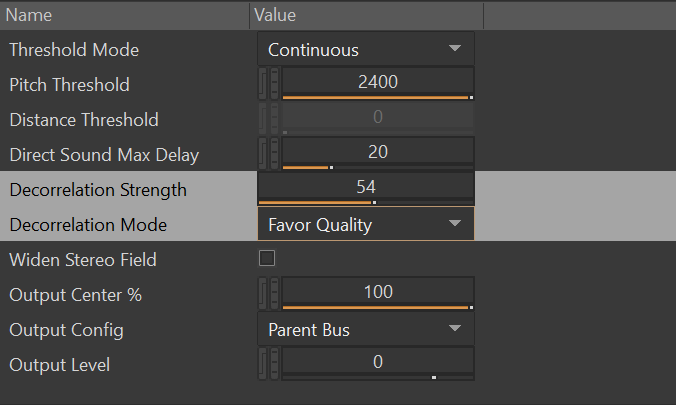

Favor Quality uses another type of decorrelation filter but based on an algorithmic design. It is quite effective at reducing phasing while minimizing spectral coloration effects. However, it does come at a higher computational cost.

Decorrelation Filter (Favor Quality) Controls

The video below demonstrates the effects of the decorrelation filter using Favor Quality:

Performance vs Quality

You may have noticed that from the previous examples, Favor Quality was generally more effective at reducing phasing without imparting spectral coloring compared to Favor Performance. However, this comes at the cost of CPU resources. It is worth noting that Favor Performance can still deliver favorable results for less resources especially when other kinds of sounds are involved. In the example below, the sound source used is a drum beat instead of pink noise and phasing is significantly reduced with minimal spectral coloring when the decorrelation strength is set to a low level of 15!

Feel free to experiment with different decorrelation modes with different sounds. You may find that certain sounds may be better suited towards certain decorrelation modes!

The table below summarizes phasing reduction approaches, their advantages and disadvantages:

|

Mitigation Type |

Concept |

Advantages |

Disadvantages |

|

Clustering |

Fuse all reflections with ToA differences within some value to direct path |

Computationally cheaper than decorrelation filters, simple to operate |

Reflections are clustered and fused only to the direct path. Some possible loss in spatial perception may occur |

|

Decorrelation: Favor Performance |

Pass reflection’s audio signal through a decorrelation filter designed by acoustic scattering model |

Computationally cheaper than Favor Quality decorrelation filter (computation costs increase with decorrelation strength) |

Not as effective at reducing phasing and can add spectral coloring |

|

Decorrelation: Favor Quality |

Pass reflection’s audio signal through a decorrelation filter designed by an algorithmic process |

More effective at reducing phasing with less spectral coloring |

More computationally expensive than Favor Performance filter |

Stereo Widening

Decorrelation filters have other uses. One of them is to widen stereo images which is a feature available in Reflect. To enable, simply click the Widen Stereo Field checkbox, ensure that the Distance Spread curve is some non-zero value for a given distance and that Decorrelation Strength is greater than 0. Increasing the Decorrelation Strength further widens the stereo field.

Stereo Widening Controls

Summary

As acoustic reflections with small ToA differences are mixed, the resulting spectrum can have a comb-like pattern and we perceive this as phasing. Since Reflect renders early reflections, phasing can occur in certain situations. In Wwise 23.1, Reflect offers several tools to reduce the more distracting aspects of the effect.

Footnotes

* The effect of similar waves creating interference patterns is more typically referred to as “flanging” in the world of music production but we will use “phasing” and “flanging” interchangeably here.

**This comb-like pattern gives rise to perhaps a familiar term: “comb filtering”

Comments

Artem Korovkin

January 30, 2024 at 03:28 am

Those articles are a great addition to ever-evolving Spatial Audio tech. Kudos! P.S. I think drum beat example mentioned in "Decorrelation" was meant to be attached, but got lost :(