Translator's Note

This is the second chapter of the Loudness Processing Best Practice for Games article series by the Chinese game audio designer Digimonk, originally published on midifan.com .China topped the world gaming revenue charts in 2016. And the Wwise Tour 2016 China stop not only featured popular (50 million daily active users) Chinese games using Wwise, but also boasted over 200 attendees from the local game audio community. Therefore, we were certainly intrigued to take a deeper look into game audio practices in China. By translating blog articles by one of the most influential audio designers within the Chinese gaming industry, we aim to help better understand the audio community and the culture of this vast territory. To the best of our knowledge, this series has been the first-ever effort in translating Chinese audio tech blogs into English.

Translation from Chinese to English by: BEINAN LI, Product Expert, Developer Relations - Greater China at Audiokinetic

'Loudness' that we talk about here is not the same as urban noise loudness we measure for example and loudness read on sound pressure level (SPL) meters. SPL meters measure sound pressure. Sound pressure is an air pressure metric that is actually slightly greater than the true air pressure. It uses a totally different measurement from the 'loudness' we will discuss below.Loudness, although measurable from an electrical power standpoint, cannot represent our loudness perception. In practice, loudness is subjective no matter what, i.e., related to psychoacoustics. However, other than emotional and psychological factors, there are patterns that determine whether a sound is more or less 'loud' or 'quiet'. They are mainly:

- ADSR

- Duration and reverberation

- Frequency and frequency response

- Rhythm and movement

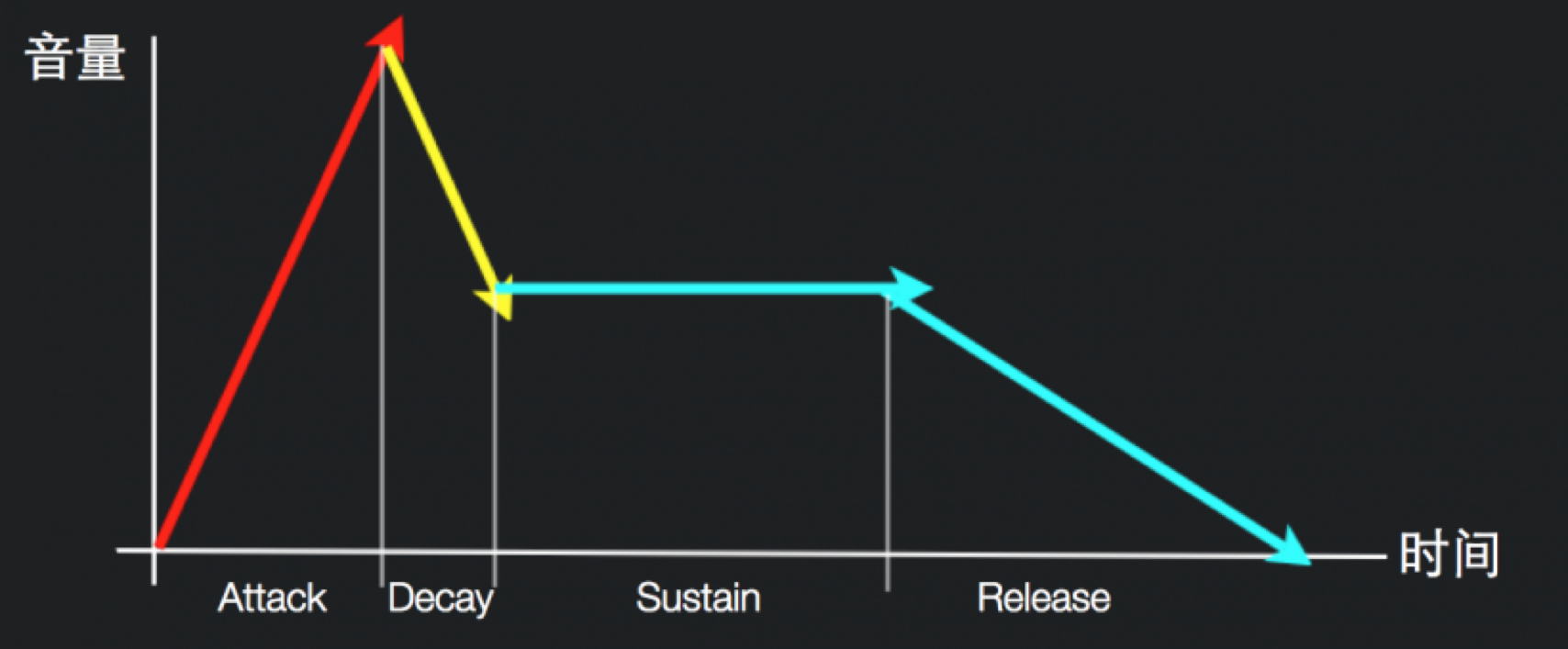

ADSR = Attack, Decay, Sustain, Release

ADSR is also called Envelope. It describes the lifecycle of a natural sound from its birth to its death. These four phases are all represented by time, usually ms (or milliseconds). The shorter the Attack, the greater the loudness will be. For example, the attack of the percussion instruments can be as short as 15ms. They will sound louder, and sometimes much louder at the same volume level, if mixed with the string instruments (with an attack usually above 50ms). Another example is a gunshot which has an attack shorter than 10ms. It will sound even louder than the percussions. So, sometimes loudness problems can be fixed by just tweaking the asset's attack.

Duration and Reverberation

The duration of a sound is usually determined by the Decay, Sustain, and Release times. The longer the Decay, the longer the duration of the louder portion of the asset. So, it will sound louder. Similarly, a longer Sustain will make a sound appear louder. But this duration is not fixed! It is mainly due to human 'aural adaptability', a human instinct. Think about this scenario: When you walk into your office and notice the air conditioning sound, you may find it very loud; but after a few minutes or when your attention is diverted, you get used to it and won't notice the air conditioner anymore (until the next time you feel bored). This sort of aural adaptability is part of 'selective listening'. Simply put, humans need to be fed with sustained novelty and changes in order to stimulate aural attention! But as a sound designer, sometimes you have to fight this instinct.

The Release here is not a 'delay', but the time it takes for a sound to keep propagating and gradually dissipate. It often has much to do with reverberation. Release is interesting in that we tend to ignore how it impacts the loudness of a sound effect. We may not even be able to locate the so-called Release section. Take an explosion for example. Its body is actually its Release, because the explosion itself ends in 10ms. In fact, when we say "I want this sound to be crisp and punchy", Release becomes a key to achieving this! For instance, more often than not, we would want a sound to be punchy but not so loud as to mask other sounds. If so, we first need to consider tweaking the duration of its Release to avoid having it disappear 'in a natural way'. This is especially true when working on a soundtrack. It is usually an amateur approach to allow the cloth sounds during a fast-paced combat to take time to gently fade out, unless you want certain special effects. Such a Release will make the sound appear dragged. Think about consecutive explosions and big impacts, using a layering approach. Although independent asset layers' Release levels may look low, they can quickly build up at the pace of 3dB per layer, resulting in an inseparable mess. This applies to gunshots too. Yet, certain types of sounds will aesthetically need 'long-enough tails'. In those cases you can consider making the Decay faster so that the Sustain level can decay faster too, then a longer Release can still be easily masked by other sounds. Because layering multiple sounds requires that the volume differences between them be even, we can prevent sounds from 'stomping' onto each other by maintaining a Release at an appropriate low volume level.

On the other hand, reverberation can make the tail of a sound longer. Early reflection can make the entire sound louder when heard on top of the dry sound. It is not necessarily a bad thing! In either case, the situation can be properly exploited to control loudness. For instance, the UI sounds in the typical Japanese games, especially the system sounds of PS3 and PS4, are pretty quiet, but you can clearly hear them. Why? Two things come into play: First of all, their reverb is usually long, between 1-1.5s, which extends those extremely short sounds (they appear quiet because they are too short), so your perceived loudness increases. Secondly, an appropriate reverb type (relative to the reverb applied to the BGM) can place sounds in a novel or an 'unnatural' sound field. The unnaturalness or novelty attracts your active listening, making it appear louder.

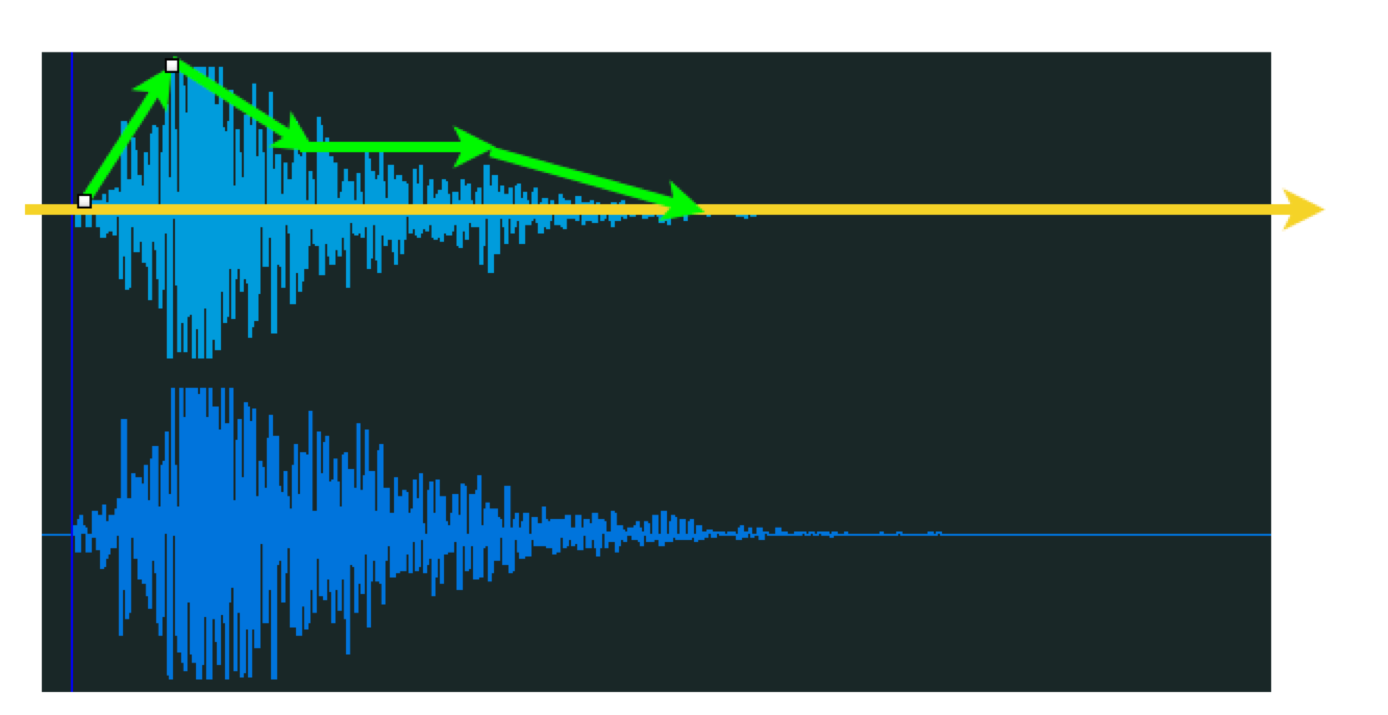

If a sound lasts long, e.g., a music segment longer than 60 seconds, then it will be an inappropriate target to describe with ADSR. So a new term is introduced: Shape. A Shape is the outer profile of the peak sequence of a sound wave. By changing this profile, we can change the loudness evolution of the sound. This approach is called 'shaping', also widely used in synthesis and effects (more on this in the future). Shaping is also an approach that I frequently use for controlling the rhythm and the loudness of sounds, especially during the final touch-up. To shape is to not change the RMS, but to change the evolution of the peaks, allowing a sustained sound to dip at certain insignificant moments, yet rise a bit when you need it to. This can affect the listeners attention so the desired loudness variation is achieved.

For more loudness reference materials, you can read a book that I co-authored with Professor AnDong when I was young: 数字音频基础

The book mentions a series of numerical laws regarding loudness for your reference.

Frequencies

We already know the equal-loudness contour, and let's save the talk about its significance. The curves tell us a basic phenomenon: Frequencies, as Attack, affect our loudness perception. Controlling loudness through frequencies is nonetheless easily overlooked according to my observations and experience. For example, in mobile games, frequency content below 350Hz quickly disappears when coming from cellphone speakers, and most gamers play without wearing headphones. In this case, raising the low frequencies of an explosion would lead to nothing but clipping and other sounds getting masked, and you will hear the overall sound muffled. In fact, we all have this impression: The greater the explosion, the more muffled it may appear to be. Aren't there any high frequencies? Yup, there are. But it's just that the low frequencies mask the high frequencies! Therefore, if you want the sound to stay bright, you must bring up the high frequencies and bring down the unnecessary lows at the same time. It's a typical scenario when mixing bass for music. To make a bass sound thick and sturdy, it would be useless to bring up the low frequencies. A better approach is to tweak the highs (above 4kHz). However, this will raise the overall volume as well. Then, how do we fix that? Attenuate the mid and low-mid frequencies, of course! The mid (1-2kHz) often make a sound appear close and loud, which to a degree masks the high and low frequencies. For certain musical genres, the low-mid mentioned here can be replaced with low frequencies as well, depending on your needs. So, the most effective treatment to volume and loudness isn't playing with volume levels, but cutting down some mids (1-2kHz) or low-mids (500Hz or so). This way, the highs and lows of your bass can show their muscles without bringing up much of the low and high frequencies. At this point, you can even consider attenuating below 52Hz of the bass, and leaving it to other instruments. Oh well ... this is already about mixing. Yes! But it's a skill that almost every sound designer must study and master. Mixing is not about pushing faders, it’s mainly about solving the loudness, depth, and spatial issues of every instrument by using EQ. And, you have to try different EQs for different tasks, since you won't find enough types of EQ processors in the world.

Let me share another example, a story about 1kHz and 500Hz. The intensity of most sounds focuses around 500-1000Hz, and around 250Hz for more powerful sounds. If you would like a sound to appear loud but not too in your face, you could try to cut 1000Hz. First, try a quick test. Bring the frequency all the way down to see if the approach works for this particular sound, and then slowly bring it back up if it proves effective. Avoid bit-by-bit attenuation at all costs. Such delicate morphing tends to melt away your own attention, leaving you clueless in the end! If you want a sound to be punchy (low frequency) yet tough (mid around 1000Hz), but not mask quiet sounds, then you can aim at 500Hz directly, usually between 250 and 500Hz.

You need to realize that every sound has its major frequency band in the power spectrum sense. Once you touch this band, then the entire loudness of the sound will change. In other words, this frequency band is significant, and everything else can be cleaned up as needed! For example, 500Hz is crucial to the timbre of an acoustic-electric guitar, but what if the guitar is used just for rhythms? It wouldn't affect its musical function if we cut 500Hz. So why not just cut it off? In fact, in a lot of music, it's common practice to cut out everything below 500Hz of a rhythm acoustic-electric guitar. An important message comes out of this story: every asset serves as a part of the mix. The unique trait of an asset is why the asset exists (otherwise why keep it?). We could opt to keep the trait or modify it, but no matter what, we should keep the part of the sound that contributes the most to the whole, while treating everything else as less significant, and disposable if needed. If you want to keep something, think first: Must I? Then consider: can the less-significant frequency bands serve as a valid contrast?

Here is another fun story. Many adults' spectral hearing range cannot actually reach 20kHz. In my case, it's around 18.5kHz in recent years, but a sine wave at 18kHz can already gives my ears a needle-sharp sensation. Also, the sound of fingernails scratching a whiteboard drives most people crazy as a very loud sound would, even though a measurement with an SPL meter would show that the level of the sound is not that high. It can have a high transient, but its RMS is low.

How you look at frequency responses largely depends on your mixing skills and experience. When mixing, we care about the overall presentation of the works first, with all the tweaks serving as a part of the big picture. Whether it's for a game, film, TV show, or a piece of music, the role of each element determines where the element should be placed and how prominent it needs to be made.

Rhythm and Movement

Most sounds that we experience are complex, and they move. Their rhythms and movements can affect how we perceive their loudness. For instance, in many films, there will be a 20-80ms short gap before a spectacular explosion (usually a panoramic shot). Even the music and dialogues will stop abruptly. Then you will find the subsequent explosion extremely loud. But if you extracted the assets and test its volume level or even RMS, you would find them much lower than how loud the sound is perceived.

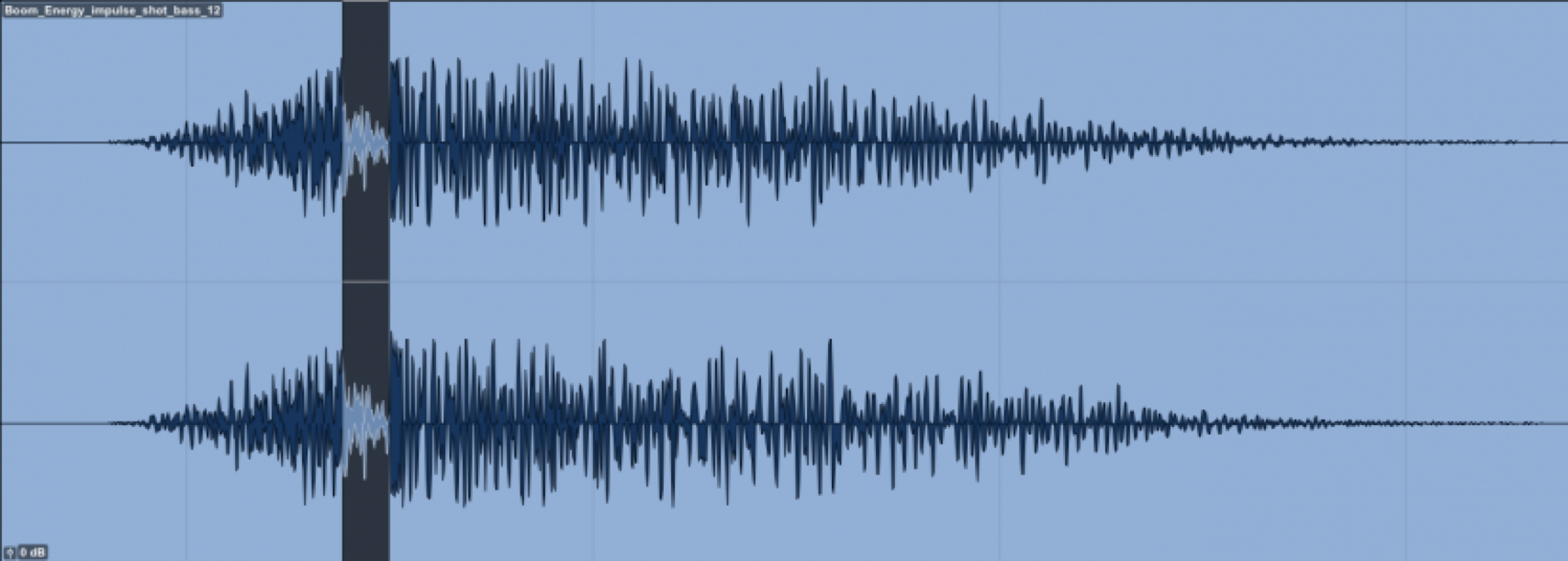

The principle behind this effect is very simple: Dynamics. Dynamics in acoustics defined as: volume difference. You could simplify that to 'the difference between peak levels', but as loudness measurement matures, we now use LRA as a loudness difference metric to depict dynamics. This approach is widely used, especially for huge explosions, stingers with a reversed or growing sound, or for keeping the intensity of subsequent impacts under control. Sometimes, the peaks of the subsequent sounds don't need to be high to create an illusive loud sensation. The trick is in controlling the peak difference between the neighbouring segments, and the duration of the gap. In many Hollywood movies, the gap can be as long as 1-2 seconds. The dramatic pause can cause a great aural impact.

The difference between the major frequency bands of the consecutive sounds can create a very noticeable impact. Think about drum beats, the basic constituents are a bass drum and a snare drum, with a big difference in their major frequency bands. Even at the same peak or RMS, a reasonable sequence of the two can still lead to a loud-enough loudness. Drum N'Bass and Hip Hop are classic examples of this. Here are two samples, the second half of them is the same stinger, but the reversed first half uses a very different major frequency band. We can easily feel the difference in the results:

In the above image, the highlighted dark part marks the gap, about 100ms

Feel the loudness difference with the stinger alone:

Then, with the stinger preceded with an intro and a gap:

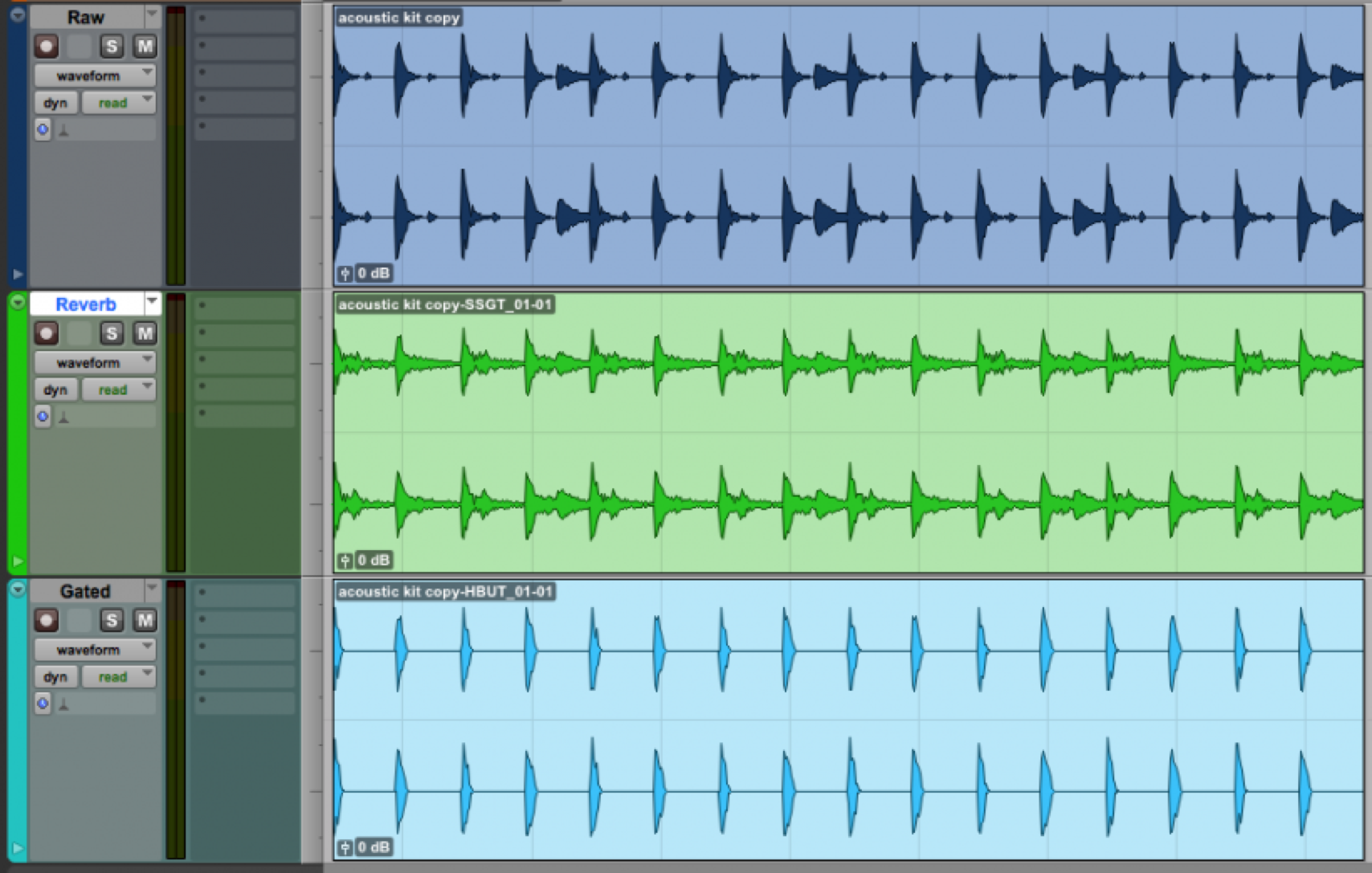

Let's take a look at these beats: [The first row is the original sound]

A. Row #2, with a long reverb, there is an obvious glue between their timbres:

B. Row #3, without reverb, there is no obvious glue and they are even separated using gating:

Most of us know how reverb affects loudness, but we need to clearly understand that it not only affects the portions of ADSR, but can also amplify certain frequency bands, causing an impact on aural experience. This is a compound effect. For sound designers, these factors can be analyzed and exploited. Especially when sounds are temporally separate, they break aural continuity and make it difficult for the listener to judge loudness precisely, or via the listening experience.

Although in essence these are musical beats, the percussion instruments are no different from most sound effects, which usually belong to the 'noise' category. So the approach for percussion instruments' performance, creating timbres for them, and mixing them, is similar to creating sound effects. But the part of perceived dynamics generated from rhythm, movement, and frequency difference is more noticeable than generic 'sound effects'. Let’s consider another experience. If the drummer performs at an unstable tempo, or even with unstable dynamics, you would feel the sounds abrupt or you may even find the wrong notes extremely loud. This phenomenon proves that loudness is very subjective, empirical, and selective.

There are many other situations and approaches of which I am not including here. In fact, the approaches you choose to use will depend on how you understand and imagine sounds, and your courage to try. There is no right or wrong, only good and better. I suggest that you practice mixing, whether it's multitrack mixing for music or for film soundtracks. In the long run, you will acquire a lot of skills and experience from this.

All the above is for further discussion at your discretion. Please feel free to correct me where I'm wrong and it will be greatly appreciated!

Comments