atmoky Ears is the one stop solution for rendering hyper-realistic spatial audio experiences to headphones. It provides an unparalleled combination of perceptual quality and efficiency, whilst getting the best out of every spatial audio mix. atmoky Ears puts the listener first and offers a patented perceptual optimization. For those who want to squeeze out the very last drop of performance from their devices, atmoky Ears delivers that boost that makes the difference.

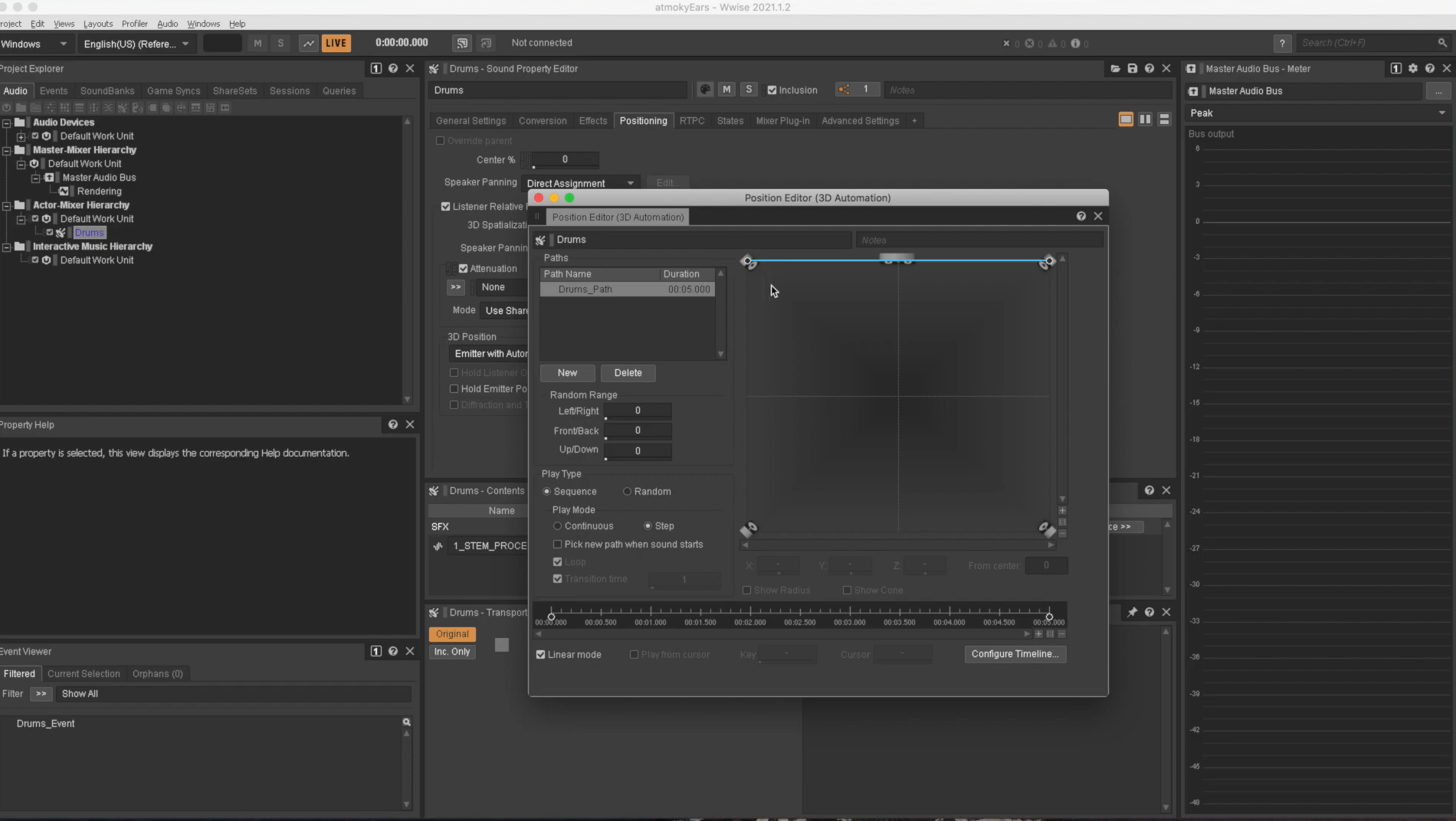

atmoky Ears | Authoring Tool

atmoky Ears | Why We Created This Plug-in!

In real-life we can distinguish between up to 1000 directions from all around us. With this information we have the ability to determine a lot about the space we are in while moving freely in our environments and the ‘acoustic scene’. We hear three-dimensionally.

To match this depth of auditory understanding in a virtualized environment, creating a hyper-realistic spatial audio experience, we need to provide a high and convincing spatial resolution, and plausible room characteristics, while incorporating head movements in the rendering. In short: dynamic rendering for the win!

With this dream in our pockets, we set out to come up with one plugin for rendering all flavours of spatial audio to headphones that would fulfill all our wishes.

Throughout the creation journey, we crossed a lot of different paths, listened with a lot of different ears, and realized that it is quite challenging to come up with a solution that would meet all of our expectations. Especially if computational power is limited. Spoiler: in the end we nailed it :)!

Here is what we have learned on our journey!

Dynamic Rendering Is Essential

Have you ever listened to a loudspeaker in a room and while you were moving your head the loudspeaker moved with you? No? Or it moved with you and then moved back to its original position? Also no? Or somehow the timbre of the sound source changed significantly?

Neither have we! But over the years we have had all of those experiences with head-tracked binaural rendering.

If the sound source is moving along as you rotate your head, the rendering is obviously not dynamic. But, in our experience dynamic rendering is essential for interactive spatial audio, giving the feeling of being immersed in a scene. To be clear, we believe dynamic rendering should be used whenever possible. Luckily, Wwise provides this exact functionality.

A common problem when rendering dynamically is the latency of the headtrackers itself - welcome to the uncanny valley. I’m afraid we are no hardware manufacturers and aren’t in control of the wireless transmission protocols, so we can’t solve that problem - but we are looking forward to 5G.

But, with its perceptually optimized rendering, atmoky Ears solves the location or direction dependent coloration problem. Thus, the timbre of the sound source isn’t unnaturally altered as we rotate our heads or as a sound source moves around us. The trajectories and different directions are perceived as intended and anticipated in reality.

Getting an "out-of-the-head" Experience

Have you listened to a loudspeaker in an anechoic chamber or a non-reflective environment and closed your eyes?

We have, and while we could locate the loudspeaker we couldn’t really grasp how far away it was.

What does this have to do with spatial audio or immersive audio?

Externalized sounds require the sources to be located in a virtual room, a simplified room, or something similar. This is why we chose to include an externalizer in atmoky Ears that is optimized for getting an “out-of-the-head” feeling while maintaining the characteristics of the mix and the sound quality.

An Experience For all Generations

Have you ever asked a child where they think the audio source is when listening to a virtual audio scene?

We have. Unsurprisingly we found that human hearing is quite individual across age groups. People have diverse preferences, strengths, and are of different shapes. This individuality matters when it comes to spatial audio. We came up with our own atmoky HRTF sets optimized for crisp high-quality audio and adjusted for the listening preferences of different age groups. The atmoky Ears personalization allows for delivering customized mixes for children, teens, and adults.

We bet that there are games and projects out there that are intended for specific age groups. Notably virtual reality educational games or anxiety therapies can benefit from our personalization presets.

Efficiency Is Key

Have you ever tried to tell an immersive spatial audio story with nothing but a pair of headphones and a smartphone?

Neither have we! But we know someone who did.

We incorporated the feedback from a project created by Thomas Aichinger (his story to follow) and came up with a unique optimization that lets you trade perceptual quality vs.computational effort by design. For especially demanding setups with lower hardware capabilities and more strict battery requirements we added a performance mode which reduces the plug-in’s required memory and CPU resources by up to 50%.

There Are Many Flavours In The Ice Cream Store

There are so many spatial audio formats and conventions - so many flavours and we like them all. With that, atmoky Ears renders scene-based formats as well as channel-based standard layouts starting from mono to 13.1 configurations.

Although we like all spatial audio flavours, our hearts beat for the variant that supports rendering of sources from all directions (also below and above you) and at the same time is layout agnostic.

There Are Many Platforms Out There

atmoky Ears is available on macOs, Windows, Linux, iOs, and Android. We plan to extend to other platforms soon. Please drop us an email if a platform for your project is missing. We will take care of it as soon as possible.

atmoky Ears - For Location-Based Augmented Audio Experiences

Have you ever tried to tell an immersive spatial audio story with nothing but a pair of headphones and a smartphone?

We haven’t! Neither have we! But we know someone who did. Here’s the full story.

By Thomas Aichinger, scopeaudio

Time travelling in a DeLorean is iconic, but imagine you can acoustically time travel, and all you need is a pair of headphones and a smartphone. Quite awesome, right?

We at scopeaudio thought so as well and, together with hoeragentur, we came up with an immersive augmented audio experience called Sonic Traces. The stage is the Heldenplatz in Vienna where we left audible marks that lead the user on various paths of history and allows them to dive into it, or experience new acoustic worlds.

The creation of alternate virtual acoustic realities, that you can walk through (6DoF), with sounds and voices is key in our work. Technology is used to support our new way of storytelling – not the other way around! However, we came across various technological challenges and constraints during the process. While some of the challenges are related to the user tracking on location, some of them are directly related to the rendering of the spatial audio content.

Obviously, there is always a tradeoff between perceptual quality and computational effort. But especially on a smartphone with limited computational resources, we had to very carefully adjust and tune to come up with satisfying results.

We shared our experiences with the atmoky team and they incorporated our feedback in the atmoky Ears plugin. We tested it and were highly convinced by the plugin. It offers the optimal trade-off between highest perceptual quality vs. computational effort which makes atmoky Ears the go-to solution for all our applications and games that aim at offering the highest spatial audio quality on mobile devices and power horses alike.

But the plugin doesn’t only stand out in terms of efficiency. We also highly recommend using the externalizer as some sort of ground-layer to get the out-of-the-head-experience. As a preset we suggest setting amount to 44 and character to 71!

Testimonial by Michael Iber

“Externalization is an essential attribute for a realistic projection of 3D audio to human ears via headphones. Based on the binauralization algorithms of the well-known IEM plugins, atmoky Ears is a unique tool that provides an adjustable degree of externalization dependent on the scene and the attributes of the used sound sources. Thus, movements of a player become acoustically highly realistic enhancing the overall impression of auditory envelopment and immersion. I have tested the easy to implement plugin in different environments and am quite stunned with the results. “

By Michael Iber

|

MICHAEL IBER |

Comments