Part 1: Distance Modeling and Early Reflections

Part 2: Diffraction

Part 3: Beyond Early Reflections

A lot has happened in Spatial Audio towards the release of 2019.2. One key development was the revamp of our ray casting engine. Drawing inspiration from lighting techniques, some of which were presented by our teammate Christophe Tornieri at AES NY last fall [1], allowed us to make it scale much better with respect to the number of triangles, emitters, and reflection order. Another key change with 2019.2 is that Spatial Audio is now better integrated in Wwise, and easier to set up for both programmers and designers, offering more ways to be creative. You may have seen this presented at the WWWOE by our teammate Thalie Keklikian [2].

Some time before the release, the Spatial Audio Research team at Audiokinetic sat down with Simon Ashby, Head of Product to learn about his experience in re-mixing the Wwise Audio Lab using the new features. To our delight, he was quite enthusiastic and said, “It provides the listener with a world that is dynamic and contrasted. A world that is alive! That is what spatial audio promises.” Interestingly, as he described his workflow, he also said that he was “in control of the mixing rules, but the system has the final word.”

This sparked a reflection on our approach to spatial audio and the apparent dichotomy of sound design versus physical accuracy. Indeed, Wwise Spatial Audio is resolutely “Wwise” because it gives a lot of control to the sound designer. But sound propagation obeys immutable laws of physics. So, why do we care about providing the user control anyway? Isn’t it just supposed to work? In this blog series I will try to share with you through practical examples some of our thinking towards the direction in which we decided to take Wwise Spatial Audio.

The Case of Distance Modeling

A common and basic example of where designers go astray from the laws of physics in order for their game to sound good and plausible is how they model distance. In every sound engine for games, there is a way to determine how distance affects a sound. However, the attenuation of sound is well known, and is also common to all sounds. A point source’s attenuation follows an inverse square law (that is, its amplitude is proportional to 1/r, or in other words, it drops by 6dB every time the distance doubles) because of energy being spread out over a growing area as it is radiated outwards [ 3]. At larger distances the energy spread is less significant, and attenuation due to air absorption takes over [4].

Yet, designers use different curves for different sounds, and these curves are often not physically accurate. In fact, distance attenuation is an important sound design tool, and we can speculate on a few reasons as to why that is.

Game Play

Game play is more important than physical accuracy. For example, dialog curves often have little (or flat) attenuation, so that they are intelligible from afar.

Limited Dynamic Range

Mitigating the constraints of limited dynamic range. Real world sounds have a very large dynamic range (from 0 to 190 dB SPL), but virtual worlds are put in a box where audio is rendered from your speakers or headphones. Designers will more than likely edit distance based volume curves differently whether sounds are very loud or quiet.

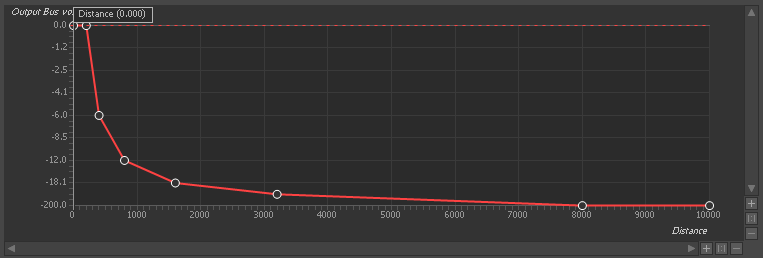

(a)

(b)

Figure 1 - Example curve of a loud sound. While the curve (a) would be more accurate, a designer may choose to dial a curve more like (b), in order to “squash” the very loud part into a dynamic range that is more appropriate for rendering in their players’ sound systems.

World Scale

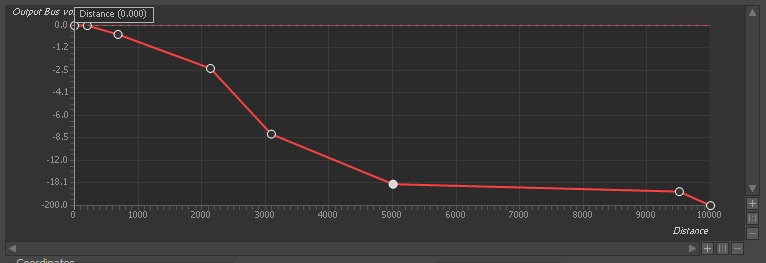

The scale of the 3D world is optimized to look good, not sound good. For example in the Wwise Audio Lab [5], what looks like a regular living room is about 12 meters deep. Pretty large. Also, the player walks at 7.5 m/s and runs at 18 m/s!

Figure 2 - This living room from the Wwise Audio Lab is 12 meters deep, according to the geometry’s coordinates.

Recorded Sounds

Recorded sounds have some of their environment “baked in”. When authoring a sound, we make it sound like we hear it, or at least how we would like to hear it and it is sometimes hard to dissociate the part that is strictly due to the physical process from that which is due to propagation and the environment. It is well known that the amount of reverberation present in a sound, measured by the direct-to-reverberant ratio (DRR), is an important cue for perceiving distance [6]. Indeed, typically as a listener gets away from a source, they perceive the source more via its reflections than from itsdirect wave. If you have ever been in an anechoic room, you would have noticed that your typical sonic landmarks are absent there.

We would rarely import sounds recorded in an anechoic chamber, but game systems will generally add reverberation based on the environment. This would have repercussions on the distance attenuation curves that are used for the direct sound and/or the dry-wet ratio (that is, the “signal flow equivalent” of DRR).

A related question to consider is why people frequently use high-pass filtering in their distance model. From a mixing perspective, as more signal is sent to the reverb, removing the low-end prevents muddying the mix [7]. However, textbooks in acoustics make no mention of dissipation of energy due to distance that is specific to the low frequency range [8].

Perhaps, some of you have other ideas on how we use distance attenuation creatively in games? We would be happy to hear your thoughts.

If distance attenuation is so distinct from reality in practice, how could more complex or subtle phenomena related to acoustics possibly be physically accurate?

Early Reflections

We have touched on this subject in the previous section. As a wavefront extends from an emitter and eventually reaches an obstacle, part of its energy will be absorbed by (that is, it will continue travelling through) the material while another part will be reflected. The delay between the time of arrival of the reflection compared to the direct sound, and its direction of arrival, provide the listener with a lot of information about the shape of their environment. The first few reflections, called early reflections, thus play a critical role in our perception of the environment, and contribute greatly to immersion and externalization [9].

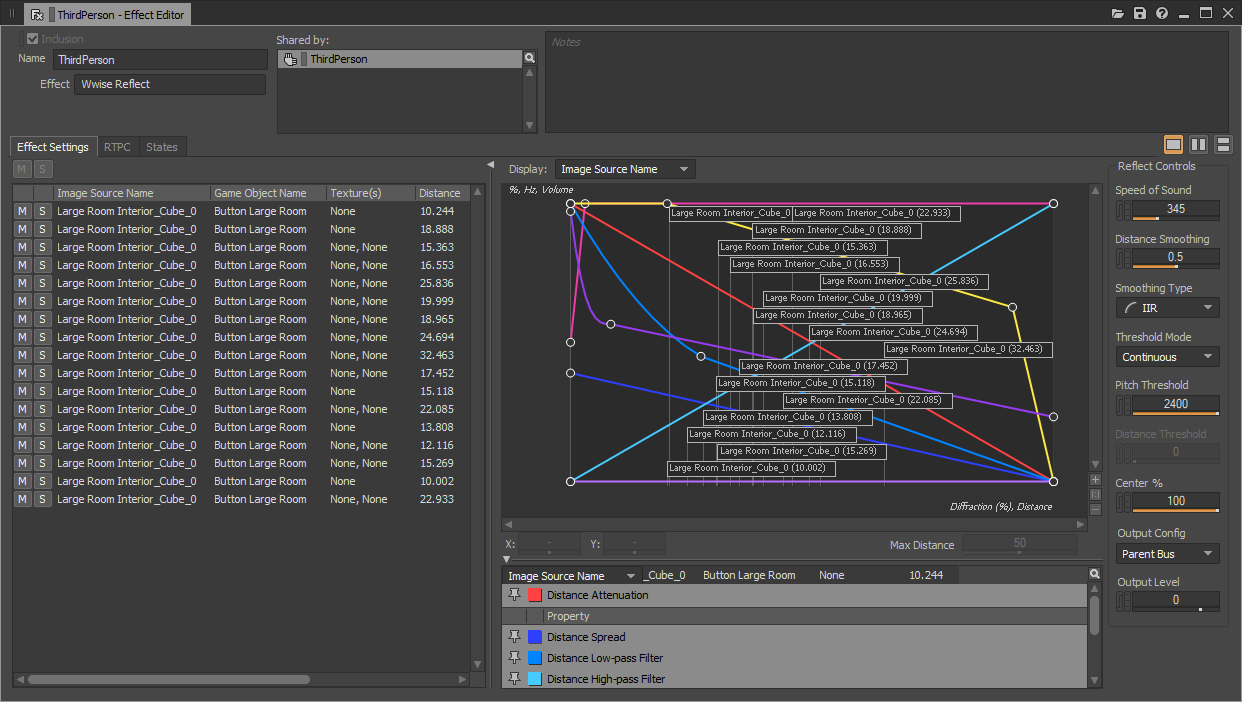

Driven by Wwise Spatial Audio, the Wwise Reflect plug-in [10,11] models these early reflections. In the words of Simon, they “provide depth and instant feedback to your position and orientation in the world by reflecting back to you the room characteristics.” Our approach with Wwise Reflect so far has been to provide the feeling of dynamism and immersion of early reflections, at a relatively low cost, computationally speaking, compared to entirely geometry-driven reverberation.

Early reflections undergo attenuation due to distance and energy loss at the interface between air and the material. It is interesting to consider how and why sound designers would also “cheat” on the accuracy of the modeling of reflections.

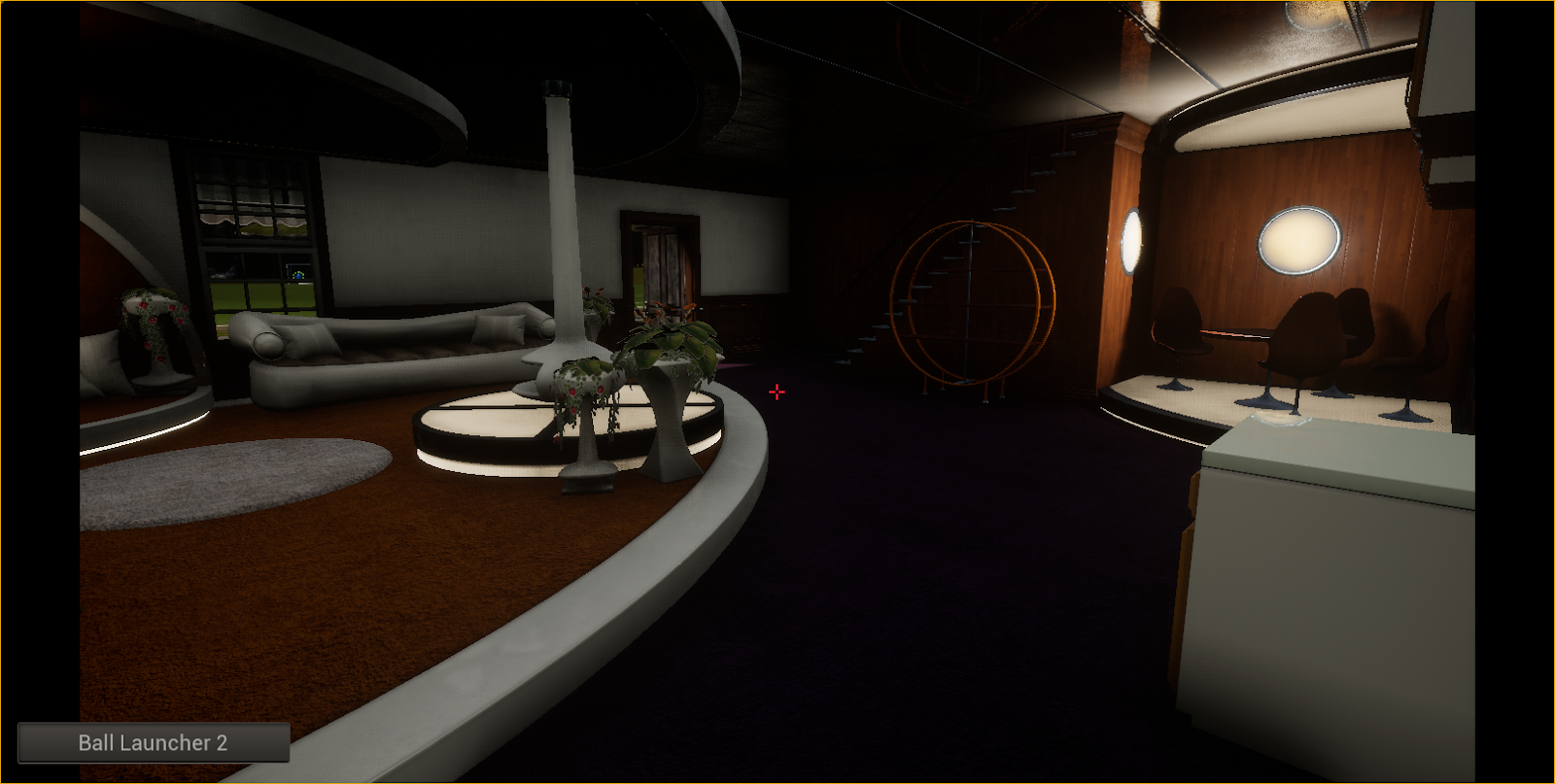

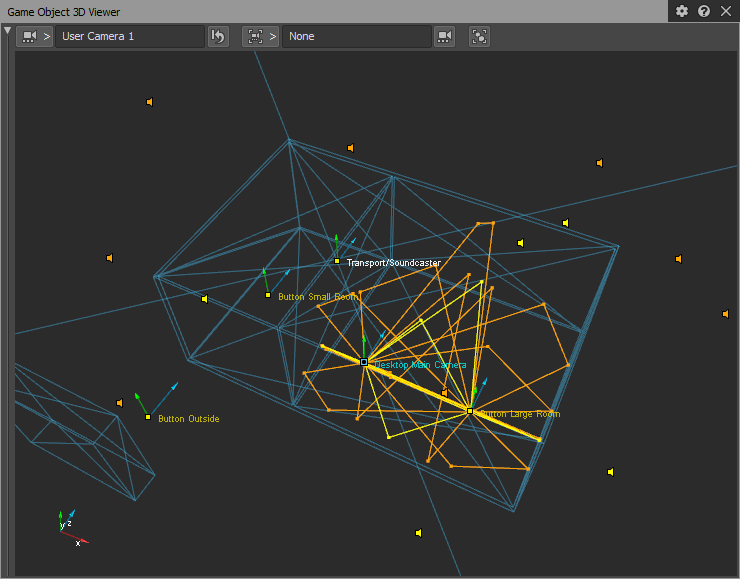

(a)

(b)

Figure 3 - Wwise Reflect renders early reflections from data calculated by Wwise Spatial Audio. (a) Early reflection paths, calculated by Wwise Spatial Audio and displayed in the 3D Game Object View;

(b) Wwise Reflect UI, with corresponding image sources displayed in real-time.

Distance Attenuation

Distance attenuation is customized for the same reasons direct sounds are. Wwise Reflect offers its own set of curves for this. One reason is structural: it is difficult for a plug-in existing on a bus to peek at the Attenuation ShareSet of its source, and this is complicated by the fact that it can have multiple sources with different customized distance attenuations. However with the work done in Wwise 2019.2, we are getting closer to working around this problem. The other reason is that designers don’t necessarily want them to be the same as the direct sound.

It is not obvious why audio creators often feel the necessity to author the distance attenuation of early reflections differently than they would author that of the dry sound, or how the former relates to the latter. I conjecture that in some cases the DRR may have something to do with it. This study [12] suggests that the first few reflections play a dominant role in the perception of distance, and the signal produced by Wwise Reflect definitely contributes to the “reverberant side” of the DRR. However, Reflect is exempt from the traditional “distance-driven send level” with auxiliary busses, since this simplified model does not apply to Reflect where each reflection has its own distance. Therefore, sound designers somewhat need to compensate for the lack of global DRR as they design the early reflections’ behavior with distance. When close to the source, designers often make reflections quieter than they should in order to emphasize the feeling of proximity. On the contrary, when far away, designers make them louder so that they convey some sense of distance. I think that the reason for this exaggeration boils down to doing more with less. Indeed, the number of early reflections rendered by Wwise Reflect is much smaller than reality, because of the idealization of the geometry and performance constraints. In any case, simplifying Wwise Reflect’s distance model is an open question, and the first thing to do is to understand how it diverges from the distance model of the dry sound. Consequently, we are very interested in knowing what motivates your design decisions!

Wall Absorption

Another case of doing more with less is illustrated with wall absorption. Absorption ratios per frequency band are readily available in the literature for everyday materials, and you will find that they are fairly low (or put another way, materials are highly reflective). That being said, in a room, a wave would reflect on the walls several hundreds of times, each time losing a bit of its energy due to material absorption. So, absorbing a few decibels per hit within a frequency band may have a significant impact on the total decay time of the reverberation and the perceived timbre of the room.

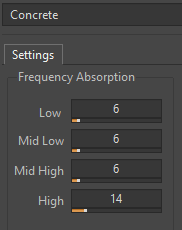

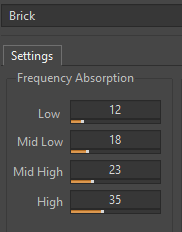

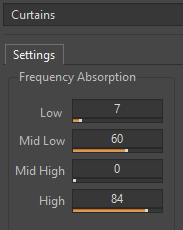

On the contrary, Wwise Spatial Audio only simulates up to four reflections per wave path; before 2019.2 it would barely make it beyond one! In Wwise, material absorption is simulated with Acoustic Textures. Using Acoustic Textures, designers can exaggerate the absorption profile compared to real materials in order to emphasize the acoustic properties of the environment and thereby truly imprint the walls’ materials into the audio produced.

|

Materials |

Absorption coefficients by frequency (Hz) |

||

|

125 (~Low) |

500 (~Mid Low) |

2,000 (~Mid High) |

|

|

Painted concrete |

.10 |

.06 |

.09 |

|

Brick |

.03 |

.03 |

.05 |

|

Heavy curtains |

.15 |

.55 |

.70 |

Figure 4 - Curtains and Brick materials in the Factory Reflect Acoustic Textures versus real values taken from this Wikipedia page [13]. Note that coefficients in Wwise are in percentage. Concrete is fairly close, while Brick has a similar spectral shape but is generally more absorbent. As for Curtains, our artist has really gone wild!

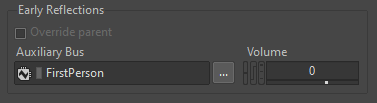

Reflection Send

New in Wwise 2019.2 are the Reflection Send properties on sounds. These let you select the desired Wwise Reflect ShareSet (and/or any other effects) for a given sound, and also dial in how much of the sound you want to feed it. But why would you want that? Physical sounds always reflect with the same strength regardless of their nature, don’t they? Well, you may want to make some sounds “reflect” more because it makes sense in terms of gameplay or narrative (think of footsteps in an empty corridor). Or, you may need to tame down a sound’s reflections because it already has a lot of reverberation baked into it. You may also wish to tweak a sound’s reflection with more signal processing etc. I hope this inspires you in trying to use the inherent flexibility of the system in novel ways.

Figure 5 - Early Reflection Send in the Wwise authoring tool.

In the next blog from this series, we will continue to reflect on the direction we have been taking with Wwise Spatial Audio, and discuss practical use cases of an important acoustic phenomenon: Diffraction.

Comments